KonradMolner: Difference between revisions

imported>Student2016 |

imported>Student2016 |

||

| (25 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

== Introduction == | == Introduction == | ||

Immersive and experiential computing systems are entering the consumer market and have the potential to profoundly impact our society. Applications of these systems range from entertainment, education, collaborative work, simulation and training to telesurgery, phobia treatment, and basic vision research. In every immersive experience, the primary interface between the user and the digital world is the near-eye display. Thus, developing near-eye display systems that provide a high-quality user experience is of the utmost importance. Many characteristics of near-eye displays that define the quality of an experience, such as resolution, refresh rate, contrast, and field of view, have been significantly improved over the last years. However, a potentially significant source of visual discomfort prevails: the vergence-accommodation conflict. | |||

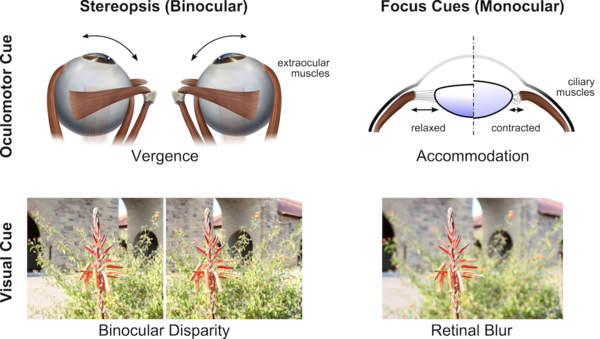

In the natural environment, the human visual system relies on a variety of cues to determine the 3D layout of the scenes. Vergence and accommodation are two different oculomotor cues that the human visual system can use for estimating absolute and relative distance of objects. Vergence refers to the relative rotation angle of the two eyeballs. When we fixate on a nearby object, our eyes rotate inwards, whereas the opposite happens when we fixate on far objects. The brain uses the relaxation or contraction of the extraocular muscles that physically rotate the eyeball as a cue for the absolute distance of the fixated object (see Fig. x). The associated visual cue is known as binocular disparity, the relative displacement between the images of a 3D scene point projected on the two retinal images. Together, vergence and disparity make up stereopsis, which is generally considered a strong depth cue, especially for objects at intermediate distances (i.e. 1-10m). | |||

[[File:Perception.png|600px | right | thumb | caption | Overview of relevant depth cues. Vergence and accommodation are oculomotor cues whereas binocular disparity and retinal blur are visual cues. In normal viewing conditions, disparity drives vergence and blur drives accommodation.]] | |||

Accommodation is an absolute monocular depth cue that refers to the state of the ciliary muscles, which focus the crystalline lens in each eye. As with vergence, the state of these muscles varies as a function of the distance to the point at which the eyes are focused. Accommodation combined with the associated visual cue, retinal blur or perceived depth of field (DOF), make up the focus cues, which are particularly useful for depth perception of objects nearby. | |||

The brain is “wired” to interpret all of these visual and oculomotor stimuli in a consistent manner, because that is what a person with normal vision experiences in the physical world. Unfortunately, all commercially-available near-eye displays produce conflicting depth cues referred to as the vergence-accommodation conflict or VAC. Displaying a stereoscopic image pair on a near-eye display creates binocular disparity, which drives the vergence of the eye to a simulated object distance. However, conventional near-eye displays use static magnifying optics to create a virtual image of a physical micro-display that is perceived at a fixed optical distance that cannot be changed in software. Hence, retinal blur drives the users’ accommodation to the virtual image (for reference, 1.3m for Oculus Rift). The discrepancy between these depth cues (vergence and accommodation), hinders visual performance [Hoffman et. al. 2008] and creates visual discomfort and fatigue, and compromised visual clarity [Shibata et al. 2011]. | |||

In this work, we explore a new potential way of tackling the VAC. By taking advantage of the parallax on the retina due to eye movements during fixation, we hypothesize the potential to drive our accommodation to arbitrary distances. Our accommodation is driven by the retinal blur cue, created by objects in the scene exhibiting parallax over the pupil, and therefore retina. An out of focus objects is one that exhibits a “large” amount of parallax on the retina, meaning that the images as perceived by pinhole cameras on opposite sides of the pupil, do not overlap perfectly on the retina, giving the impression of a blurred, or out of focus object. An in-focus object would be one that exhibits no parallax (theoretically), where the images on opposing sides of the pupil overlap perfectly on the retina. When one changes focus, the crystalline lens of the eye bends, changing the objects in the scene that overlap on the retina. | |||

We hypothesize that we can simulate this parallax exhibited in the real world by displaying different images to different points on the retina by taking advantage of the eye drifts during fixation. As initial steps towards this goal, we begin by understanding microsaccades in this context. Using microsaccade models from literature we generate our own model of the rotation of the eye and simulate the amount of parallax generated on the retina using an OpenGL and ISETBIO pipeline. Using this simulation pipeline we explore the importance of microsaccades as depth cues, as well as the amount of parallax that the rotations generate on the retina. | |||

== Background == | == Background == | ||

===Near-eye Displays with Focus Cues=== | |||

Generally, existing focus-supporting displays can be divided into several classes: adaptive focus, volumetric, light field, and holographic displays. | |||

Two-dimensional adaptive focus displays do not produce correct focus cues -- the virtual image of a single display plane is presented to each eye, just as in conventional near-eye displays. However, the system is capable of dynamically adjusting the distance of the observed image, either by physically actuating the screen [Sugihara and Miyasato 1998] or using focus-tunable optics [Konrad et al. 2016]. Because this technology only enables the distance of the entire virtual image to be adjusted at once, the issue with these displays is that the correct focal distance at which to place the display depends on point in the simulated 3D scene the user is looking at. | |||

Three-dimensional volumetric and multi-plane displays represent the most common approach to focus-supporting near-eye displays. Instead of using 2D display primitives at some fixed distance to the eye, volumetric displays either mechanically or optically scan out the 3D space of possible light emitting display primitives in front of each eye [Schowengerdt and Seibel 2006]. Multi-plane displays approximate this volume using a few virtual planes [Dolgoff 1997; Akeley et al. 2004; Rolland et al. 2000; Llull et al. 2015]. | |||

Four-Dimensional light field and holographic displays aim to synthesize the full 4D light field in front of each eye. Conceptually, this approach allows for parallax over the entire eyebox to be accurately reproduced, including monocular occlusions, specular highlights, and other effects that cannot be reproduced by volumetric displays. However, current-generation light field displays provide limited resolution [Lanman and Luebke 2013; Hua and Javidi 2014; Huang et al. 2015] whereas holographic displays suffer from speckle and have extreme requirements on pixel sizes that are not afforded by near-eye displays today. Our proposed display mode falls into this light field producing category. | |||

===Microsaccades=== | |||

Microsaccades are a specific type of fixational eye movement, characterized by high angular velocity, large angular rotation motion after a period smaller, less directed random eye motion. Microsaccades exhibit a preference for horizontal and vertical movement and occur simultaneously, suggesting a common source of generation [Zuber et al]. They are generally binocular in behavior and the velocity of the saccade is directly proportional to the distance the eye has wandered during fixation . | |||

While microsaccades have been documented across a wide range of research studies, there is no formal definitions or measurement thresholds for microsaccades. Definitions of microsaccades change depending on what best fits context of the study [Martinez-Conde]. Across literature, angular velocities vary between 8º/sec and 40º/sec. Frequencies vary between 0.5Hz and 2Hz and angular rotation ranges from 25’ to 1.5º. | |||

== Methods == | == Methods == | ||

Our project relies heavily on simulation to better understand the depth cues and parallax afforded during a microsaccade. The ISETBIO Toolbox for MATLAB proved to be incredibly useful for modeling the human eye optics and for quantifying the lateral disparity due to objects at different depths during a microsaccade. To simulate physically accurate rotations of the eye during eye drift we render images in OpenGL which we then use as the stimulus in ISETBIO. | |||

===OpenGL Pipeline=== | |||

OpenGL is a cross-platform API for rendering 2D and 3D vector graphics. Using OpenGL we are able to render 3D scenes with parameters similar to that of the eye. We generate a rotational model of the eye that accurately represents what an eye might “see” during eye drift. Using OpenGL we render the viewpoint from the center of the pupil, making the strong assumption that the pupil is infinitely small (i.e. the pinhole model of the eye). Using OpenGL, we render two line stimuli at distances of 25 cm and 4m from the camera. The stimuli are scaled to have the same perceived size. To evaluate different amounts of drift we render the scene with varying amounts of eye rotations. We use 0.2º horizontal increments with a maximum rotation of 1º. For each rotation angle, we re-render the scene. We project the 3D scene onto a plane 1m , as indicated by d, away from the retina, with a 2º field of view as indicated by θ. We then use ISETBIO, as described in the next section, to see the amount of parallax generated on the retina itself. | |||

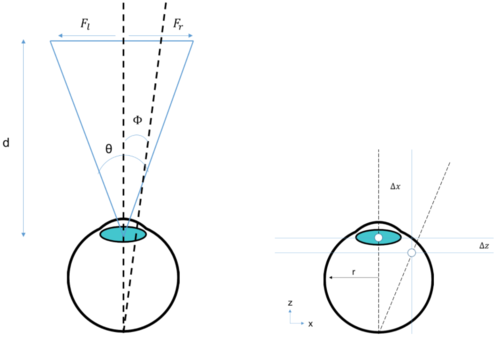

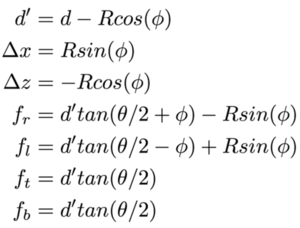

To create an accurate representation of what the eye may see when it drifts by some angle Φ, we must consider that there is both a slight shift in the pupil location, indicated by Δx and Δz, and a change in the viewing direction. Assuming an eye radius R of 12mm, the change in pupil positions are indicated by equations 2 and 3. The perspective shift is accounted for in equations 4-7, and is in terms of the change in viewing frustum. All of these variations are accounted for in our rendering model. | |||

[[File:Eye model.png|500px | left | thumb | caption | Rotational model of the eye. A slight change of angle effects both the viewing direction as well as causing a slight shift in position of the pupil.]] | |||

[[File:Model equations.png|300px | center | thumb | Rendering parameters that change as a function of the rotation angle.]] | |||

=== ISETBIO Pipeline === | |||

Our model of the human eye was built with the human eye optics template in ISET BIO. We give the eye a horizontal field of view of 2º, enough to cover the fovea and for the eye to move by one degree on either side during a simulated microsaccade. Additionally, we focused our eye at a distance of 1m, a typical viewing distance for head mounted displays. Watson et al. show that a 4mm pupil is an appropriate diameter to use in simulations with current-day VR headsets. We also use a focal length of 17mm. Our model for the retina is the standard ISETBIO model, sized to cover the horizontal field of view of 2º. | |||

In MATLAB, we feed each rotated image through our model of human eye optics. After this step, we see chromatic aberrations and blurring of our stimuli. The image achieved in this step represents the optical image projected onto the retina. We then project this image onto the retina and compute the cone absorbances across the fovea. Using traditional image processing techniques, we locate the horizontal centers of the line stimuli at each rotation. From these centers, we can calculate the lateral shift across the fovea in units of cones. | |||

== Results == | == Results == | ||

| Line 12: | Line 49: | ||

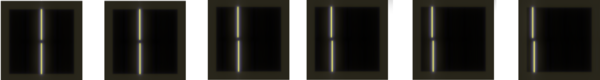

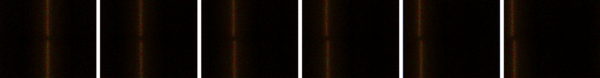

In the following image we present the input stimuli presented to the eye from 0º to 1º rotations in 0.2º increments, from left to right. The top stimuli is 4m from the camera and the bottom stimuli is 25cm from the camera. Note the increasing horizontal disparity between the two stimuli as the eye rotation increases. | In the following image we present the input stimuli presented to the eye from 0º to 1º rotations in 0.2º increments, from left to right. The top stimuli is 4m from the camera and the bottom stimuli is 25cm from the camera. Note the increasing horizontal disparity between the two stimuli as the eye rotation increases. | ||

[[File:InputStimuli.png|600px | [[File:InputStimuli.png|600px| center]] | ||

After passing these stimuli through our model for the eye, we observe the following series of images. | After passing these stimuli through our model for the eye, we observe the following series of images. | ||

[[File:EyeStimuli.png|600px | [[File:EyeStimuli.png|600px| center]] | ||

The following shows the observed cone absorbances on the fovea. | The following shows the observed cone absorbances on the fovea. | ||

[[File:ConeAbsorbances.png|600px | [[File:ConeAbsorbances.png|600px| center]] | ||

We locate the horizontal centroid of the line stimuli, in units of cones, with the following black and white image. | We locate the horizontal centroid of the line stimuli, in units of cones, with the following black and white image. | ||

[[File:BwAbsorbances.png|600px | [[File:BwAbsorbances.png|600px| center]] | ||

In the table below, we calculate the horizontal displacement from the 0º stimuli in units of cones, as well as the change in disparity between the near and far stimuli. | In the table below, we calculate the horizontal displacement from the 0º stimuli in units of cones, as well as the change in disparity between the near and far stimuli. | ||

| Line 59: | Line 96: | ||

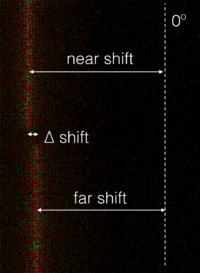

The displacement calculations are illustrated in the following graphics: | The displacement calculations are illustrated in the following graphics: | ||

[[File:EyeDistances.png|200px| | [[File:EyeDistances.png|200px|center]] | ||

== Conclusions == | == Conclusions == | ||

=== In Comparison to the pupil === | |||

Assuming an eye radius of 12mm and a 1º microsaccade, only 0.209mm of parallax are generated on the pupil. This 0.209mm of parallax corresponds to the 6.95 cone shift between the near and far stimuli in our simulation. | |||

We hypothesize that the 4mm of parallax generated across the pupil, assuming a pinhole camera model at the limits of the pupil, serve as a much stronger depth cue than the parallax observed during a microsaccade. In future work, we look to simulate the parallax observed across the pupil opening alone, in units of cones, for better comparison with our microsaccade simulation. | |||

=== Hacking the visual system === | |||

Our simulation of the retinal disparity due to a microsaccade looked to evaluate the amount of parallax one experiences when examining the natural, static world, with the same eye optics as when wearing a VR headset. While we certainly observed horizontal parallax and believe that this could serve as a depth cue for accommodation, the parallax across the pupil opening was much larger, indicating that it is a stronger depth cue than parallax during a microsaccade. | |||

In contrast to the real world, VR displays allow us to project different images onto the eye at any point in time. If a rendering engine knew when the eye was microsaccading, successive video frames with greater horizontal disparity than normal could be shown, forcing stronger parallax across the retina, and therefore driving accommodation more aggressively than typically experienced. The strength to which this could serve as the accommodation could be adjusted, based on the content in the scene, and to the depth that would alleviate vergence-accommodation strains. | |||

== Future Work == | |||

Simulations of parallax across the retina due to microsaccades allowed us to draw our conclusion that depth information could be gathered during fixational eye movements. Before we can fully understand the strength of this depth cue, we first need to better understand the retinal shift, in cone disparity, due to parallax across the pupil. We can simulate this by placing two pinhole cameras 4mm apart from each other and running the same optical simulations and disparity measurements. | |||

Moving from simulation to physical measurements, we need a high resolution, high speed eye tracker, as well as displays with high refresh rates, enabling us to display different images to the eye during fixational eye movements. | |||

Furthermore, there is little research on how strongly accommodation is influenced by parallax across the retina. User studies that measure accommodation across a range of apertures will inform how much parallax we will need to overcome to drive accommodation with parallax between successive video frames. | |||

== Citations == | |||

* Hoffman, D., Girshick, A., Akeley, K., AND Banks, M. 2008. Vergence-accommodation conflicts hinder visual perfor- mance and cause visual fatigue. Journal of Vision 8, 3. | |||

* SHIBATA, T., KIM, J., HOFFMAN, D., AND BANKS, M. 2011. The zone of comfort: Predicting visual discomfort with stereo displays. Journal of Vision 11, 8, 11. | |||

* SUGIHARA, T., AND MIYASATO, T. 1998. 32.4: A lightweight 3-d hmd with accommodative compensation. SID Digest 29, 1, 927–930. | |||

* SCHOWENGERDT, B., AND SEIBEL, E. 2006. True 3-d scanned voxel displays using single or multiple light sources. J. SID 14, 2, 135–143. | |||

* DOLGOFF, E. 1997. Real-depth imaging: a new 3d imaging tech- nology with inexpensive direct-view (no glasses) video and other applications. Proc. SPIE 3012, 282–288. | |||

* AKELEY, K., WATT, S., GIRSHICK, A., AND BANKS, M. 2004. 688 A stereo display prototype with multiple focal distances. ACM 689-690 | |||

Trans. Graph. (SIGGRAPH) 23, 3, 804–813. | |||

*ROLLAND, J., KRUEGER, M., AND GOON, A. 2000. Mul- tifocal planes head-mounted displays. Applied Optics 39, 19, 32093215. | |||

*LLULL, P., BEDARD, N., WU, W., TOSIC, I., BERKNER, K., 763 AND BALRAM, N. 2015. Design and optimization of a near-eye 764 | |||

ZHAI, Z., DING, S., LV, Q., WANG, X., AND ZHONG, Y. 2009. Extended depth of field through an axicon. Journal of Modern Optics 56, 11, 1304–1308. | |||

multifocal display system for augmented reality. In OSA Imaging and Applied Optics. | |||

* LANMAN, D., AND LUEBKE, D. 2013. Near-eye light field dis- plays. ACM Trans. Graph. (SIGGRAPH Asia) 32, 6, 220:1– 220:10. | |||

* HUA, H., AND JAVIDI, B. 2014. A 3d integral imaging optical see- through head-mounted display. Optics Express 22, 11, 13484– 13491. | |||

* HUANG, F., CHEN, K., AND WETZSTEIN, G. 2015. The light field stereoscope: Immersive computer graphics via factored near-eye light field display with focus cues. ACM Trans. Graph. (SIG- GRAPH) 34, 4. | |||

* Watson, Andrew B., and John I. Yellott. "A unified formula for light-adapted pupil size." Journal of vision 12.10 (2012): 12-12. | |||

* Martinez-Conde, Susana, et al. "Microsaccades: a neurophysiological analysis." Trends in neurosciences 32.9 (2009): 463-475. | |||

* Wandell, Brian. ISETBIO. Computer software. Http://isetbio.org/. Computational Eye and Brain Project, n.d. Web. | |||

* Wandell, Brian A. Foundations of vision. Sinauer Associates, 1995. | |||

* Engbert, Ralf. "Computational modeling of collicular integration of perceptual responses and attention in microsaccades." The Journal of Neuroscience 32.23 (2012): 8035-8039. | |||

* Rolfs, Martin. "Microsaccades: small steps on a long way." Vision research 49.20 (2009): 2415-2441. | |||

* Engbert, Ralf, et al. "An integrated model of fixational eye movements and microsaccades." Proceedings of the National Academy of Sciences 108.39 (2011): E765-E770. | |||

== Appendix I == | == Appendix I == | ||

The code used to implement the OpenGL eye rotation model as well the ISETBIO code (in Matlab), as well as some images generated using these procedures, can be found [https://www.dropbox.com/s/y908gq1jvjaulij/Code_Data.zip?dl=0 here]. | |||

== Appendix II == | == Appendix II == | ||

Robert tackled the OpenGL portions of the simulation, while Keenan created the ISETBIO model and image processing pipeline to measure cone disparity. We collaborated on the presentation, future work, and write up. | |||

Latest revision as of 21:14, 19 December 2016

Introduction

Immersive and experiential computing systems are entering the consumer market and have the potential to profoundly impact our society. Applications of these systems range from entertainment, education, collaborative work, simulation and training to telesurgery, phobia treatment, and basic vision research. In every immersive experience, the primary interface between the user and the digital world is the near-eye display. Thus, developing near-eye display systems that provide a high-quality user experience is of the utmost importance. Many characteristics of near-eye displays that define the quality of an experience, such as resolution, refresh rate, contrast, and field of view, have been significantly improved over the last years. However, a potentially significant source of visual discomfort prevails: the vergence-accommodation conflict.

In the natural environment, the human visual system relies on a variety of cues to determine the 3D layout of the scenes. Vergence and accommodation are two different oculomotor cues that the human visual system can use for estimating absolute and relative distance of objects. Vergence refers to the relative rotation angle of the two eyeballs. When we fixate on a nearby object, our eyes rotate inwards, whereas the opposite happens when we fixate on far objects. The brain uses the relaxation or contraction of the extraocular muscles that physically rotate the eyeball as a cue for the absolute distance of the fixated object (see Fig. x). The associated visual cue is known as binocular disparity, the relative displacement between the images of a 3D scene point projected on the two retinal images. Together, vergence and disparity make up stereopsis, which is generally considered a strong depth cue, especially for objects at intermediate distances (i.e. 1-10m).

Accommodation is an absolute monocular depth cue that refers to the state of the ciliary muscles, which focus the crystalline lens in each eye. As with vergence, the state of these muscles varies as a function of the distance to the point at which the eyes are focused. Accommodation combined with the associated visual cue, retinal blur or perceived depth of field (DOF), make up the focus cues, which are particularly useful for depth perception of objects nearby.

The brain is “wired” to interpret all of these visual and oculomotor stimuli in a consistent manner, because that is what a person with normal vision experiences in the physical world. Unfortunately, all commercially-available near-eye displays produce conflicting depth cues referred to as the vergence-accommodation conflict or VAC. Displaying a stereoscopic image pair on a near-eye display creates binocular disparity, which drives the vergence of the eye to a simulated object distance. However, conventional near-eye displays use static magnifying optics to create a virtual image of a physical micro-display that is perceived at a fixed optical distance that cannot be changed in software. Hence, retinal blur drives the users’ accommodation to the virtual image (for reference, 1.3m for Oculus Rift). The discrepancy between these depth cues (vergence and accommodation), hinders visual performance [Hoffman et. al. 2008] and creates visual discomfort and fatigue, and compromised visual clarity [Shibata et al. 2011]. In this work, we explore a new potential way of tackling the VAC. By taking advantage of the parallax on the retina due to eye movements during fixation, we hypothesize the potential to drive our accommodation to arbitrary distances. Our accommodation is driven by the retinal blur cue, created by objects in the scene exhibiting parallax over the pupil, and therefore retina. An out of focus objects is one that exhibits a “large” amount of parallax on the retina, meaning that the images as perceived by pinhole cameras on opposite sides of the pupil, do not overlap perfectly on the retina, giving the impression of a blurred, or out of focus object. An in-focus object would be one that exhibits no parallax (theoretically), where the images on opposing sides of the pupil overlap perfectly on the retina. When one changes focus, the crystalline lens of the eye bends, changing the objects in the scene that overlap on the retina.

We hypothesize that we can simulate this parallax exhibited in the real world by displaying different images to different points on the retina by taking advantage of the eye drifts during fixation. As initial steps towards this goal, we begin by understanding microsaccades in this context. Using microsaccade models from literature we generate our own model of the rotation of the eye and simulate the amount of parallax generated on the retina using an OpenGL and ISETBIO pipeline. Using this simulation pipeline we explore the importance of microsaccades as depth cues, as well as the amount of parallax that the rotations generate on the retina.

Background

Near-eye Displays with Focus Cues

Generally, existing focus-supporting displays can be divided into several classes: adaptive focus, volumetric, light field, and holographic displays.

Two-dimensional adaptive focus displays do not produce correct focus cues -- the virtual image of a single display plane is presented to each eye, just as in conventional near-eye displays. However, the system is capable of dynamically adjusting the distance of the observed image, either by physically actuating the screen [Sugihara and Miyasato 1998] or using focus-tunable optics [Konrad et al. 2016]. Because this technology only enables the distance of the entire virtual image to be adjusted at once, the issue with these displays is that the correct focal distance at which to place the display depends on point in the simulated 3D scene the user is looking at.

Three-dimensional volumetric and multi-plane displays represent the most common approach to focus-supporting near-eye displays. Instead of using 2D display primitives at some fixed distance to the eye, volumetric displays either mechanically or optically scan out the 3D space of possible light emitting display primitives in front of each eye [Schowengerdt and Seibel 2006]. Multi-plane displays approximate this volume using a few virtual planes [Dolgoff 1997; Akeley et al. 2004; Rolland et al. 2000; Llull et al. 2015].

Four-Dimensional light field and holographic displays aim to synthesize the full 4D light field in front of each eye. Conceptually, this approach allows for parallax over the entire eyebox to be accurately reproduced, including monocular occlusions, specular highlights, and other effects that cannot be reproduced by volumetric displays. However, current-generation light field displays provide limited resolution [Lanman and Luebke 2013; Hua and Javidi 2014; Huang et al. 2015] whereas holographic displays suffer from speckle and have extreme requirements on pixel sizes that are not afforded by near-eye displays today. Our proposed display mode falls into this light field producing category.

Microsaccades

Microsaccades are a specific type of fixational eye movement, characterized by high angular velocity, large angular rotation motion after a period smaller, less directed random eye motion. Microsaccades exhibit a preference for horizontal and vertical movement and occur simultaneously, suggesting a common source of generation [Zuber et al]. They are generally binocular in behavior and the velocity of the saccade is directly proportional to the distance the eye has wandered during fixation .

While microsaccades have been documented across a wide range of research studies, there is no formal definitions or measurement thresholds for microsaccades. Definitions of microsaccades change depending on what best fits context of the study [Martinez-Conde]. Across literature, angular velocities vary between 8º/sec and 40º/sec. Frequencies vary between 0.5Hz and 2Hz and angular rotation ranges from 25’ to 1.5º.

Methods

Our project relies heavily on simulation to better understand the depth cues and parallax afforded during a microsaccade. The ISETBIO Toolbox for MATLAB proved to be incredibly useful for modeling the human eye optics and for quantifying the lateral disparity due to objects at different depths during a microsaccade. To simulate physically accurate rotations of the eye during eye drift we render images in OpenGL which we then use as the stimulus in ISETBIO.

OpenGL Pipeline

OpenGL is a cross-platform API for rendering 2D and 3D vector graphics. Using OpenGL we are able to render 3D scenes with parameters similar to that of the eye. We generate a rotational model of the eye that accurately represents what an eye might “see” during eye drift. Using OpenGL we render the viewpoint from the center of the pupil, making the strong assumption that the pupil is infinitely small (i.e. the pinhole model of the eye). Using OpenGL, we render two line stimuli at distances of 25 cm and 4m from the camera. The stimuli are scaled to have the same perceived size. To evaluate different amounts of drift we render the scene with varying amounts of eye rotations. We use 0.2º horizontal increments with a maximum rotation of 1º. For each rotation angle, we re-render the scene. We project the 3D scene onto a plane 1m , as indicated by d, away from the retina, with a 2º field of view as indicated by θ. We then use ISETBIO, as described in the next section, to see the amount of parallax generated on the retina itself.

To create an accurate representation of what the eye may see when it drifts by some angle Φ, we must consider that there is both a slight shift in the pupil location, indicated by Δx and Δz, and a change in the viewing direction. Assuming an eye radius R of 12mm, the change in pupil positions are indicated by equations 2 and 3. The perspective shift is accounted for in equations 4-7, and is in terms of the change in viewing frustum. All of these variations are accounted for in our rendering model.

ISETBIO Pipeline

Our model of the human eye was built with the human eye optics template in ISET BIO. We give the eye a horizontal field of view of 2º, enough to cover the fovea and for the eye to move by one degree on either side during a simulated microsaccade. Additionally, we focused our eye at a distance of 1m, a typical viewing distance for head mounted displays. Watson et al. show that a 4mm pupil is an appropriate diameter to use in simulations with current-day VR headsets. We also use a focal length of 17mm. Our model for the retina is the standard ISETBIO model, sized to cover the horizontal field of view of 2º.

In MATLAB, we feed each rotated image through our model of human eye optics. After this step, we see chromatic aberrations and blurring of our stimuli. The image achieved in this step represents the optical image projected onto the retina. We then project this image onto the retina and compute the cone absorbances across the fovea. Using traditional image processing techniques, we locate the horizontal centers of the line stimuli at each rotation. From these centers, we can calculate the lateral shift across the fovea in units of cones.

Results

In the following image we present the input stimuli presented to the eye from 0º to 1º rotations in 0.2º increments, from left to right. The top stimuli is 4m from the camera and the bottom stimuli is 25cm from the camera. Note the increasing horizontal disparity between the two stimuli as the eye rotation increases.

After passing these stimuli through our model for the eye, we observe the following series of images.

The following shows the observed cone absorbances on the fovea.

We locate the horizontal centroid of the line stimuli, in units of cones, with the following black and white image.

In the table below, we calculate the horizontal displacement from the 0º stimuli in units of cones, as well as the change in disparity between the near and far stimuli.

| Horizontal Eye Rotation | 0.2º | 0.4º | 0.6º | 0.8º | 1º |

|---|---|---|---|---|---|

| Near stimulus shift from 0º | 24.22 Cones | 49.40 Cones | 73.45 Cones | 97.46 Cones | 122.62 Cones |

| Far stimulus shift from 0º | 23.08 Cones | 46.40 Cones | 69.45 Cones | 92.50 Cones | 115.67 Cones |

| ∆ shift from near to far stimuli | 1.14 Cones | 3.01 Cones | 4.00 Cones | 4.69 Cones | 6.95 Cones |

The displacement calculations are illustrated in the following graphics:

Conclusions

In Comparison to the pupil

Assuming an eye radius of 12mm and a 1º microsaccade, only 0.209mm of parallax are generated on the pupil. This 0.209mm of parallax corresponds to the 6.95 cone shift between the near and far stimuli in our simulation.

We hypothesize that the 4mm of parallax generated across the pupil, assuming a pinhole camera model at the limits of the pupil, serve as a much stronger depth cue than the parallax observed during a microsaccade. In future work, we look to simulate the parallax observed across the pupil opening alone, in units of cones, for better comparison with our microsaccade simulation.

Hacking the visual system

Our simulation of the retinal disparity due to a microsaccade looked to evaluate the amount of parallax one experiences when examining the natural, static world, with the same eye optics as when wearing a VR headset. While we certainly observed horizontal parallax and believe that this could serve as a depth cue for accommodation, the parallax across the pupil opening was much larger, indicating that it is a stronger depth cue than parallax during a microsaccade.

In contrast to the real world, VR displays allow us to project different images onto the eye at any point in time. If a rendering engine knew when the eye was microsaccading, successive video frames with greater horizontal disparity than normal could be shown, forcing stronger parallax across the retina, and therefore driving accommodation more aggressively than typically experienced. The strength to which this could serve as the accommodation could be adjusted, based on the content in the scene, and to the depth that would alleviate vergence-accommodation strains.

Future Work

Simulations of parallax across the retina due to microsaccades allowed us to draw our conclusion that depth information could be gathered during fixational eye movements. Before we can fully understand the strength of this depth cue, we first need to better understand the retinal shift, in cone disparity, due to parallax across the pupil. We can simulate this by placing two pinhole cameras 4mm apart from each other and running the same optical simulations and disparity measurements.

Moving from simulation to physical measurements, we need a high resolution, high speed eye tracker, as well as displays with high refresh rates, enabling us to display different images to the eye during fixational eye movements.

Furthermore, there is little research on how strongly accommodation is influenced by parallax across the retina. User studies that measure accommodation across a range of apertures will inform how much parallax we will need to overcome to drive accommodation with parallax between successive video frames.

Citations

- Hoffman, D., Girshick, A., Akeley, K., AND Banks, M. 2008. Vergence-accommodation conflicts hinder visual perfor- mance and cause visual fatigue. Journal of Vision 8, 3.

- SHIBATA, T., KIM, J., HOFFMAN, D., AND BANKS, M. 2011. The zone of comfort: Predicting visual discomfort with stereo displays. Journal of Vision 11, 8, 11.

- SUGIHARA, T., AND MIYASATO, T. 1998. 32.4: A lightweight 3-d hmd with accommodative compensation. SID Digest 29, 1, 927–930.

- SCHOWENGERDT, B., AND SEIBEL, E. 2006. True 3-d scanned voxel displays using single or multiple light sources. J. SID 14, 2, 135–143.

- DOLGOFF, E. 1997. Real-depth imaging: a new 3d imaging tech- nology with inexpensive direct-view (no glasses) video and other applications. Proc. SPIE 3012, 282–288.

- AKELEY, K., WATT, S., GIRSHICK, A., AND BANKS, M. 2004. 688 A stereo display prototype with multiple focal distances. ACM 689-690

Trans. Graph. (SIGGRAPH) 23, 3, 804–813.

- ROLLAND, J., KRUEGER, M., AND GOON, A. 2000. Mul- tifocal planes head-mounted displays. Applied Optics 39, 19, 32093215.

- LLULL, P., BEDARD, N., WU, W., TOSIC, I., BERKNER, K., 763 AND BALRAM, N. 2015. Design and optimization of a near-eye 764

ZHAI, Z., DING, S., LV, Q., WANG, X., AND ZHONG, Y. 2009. Extended depth of field through an axicon. Journal of Modern Optics 56, 11, 1304–1308. multifocal display system for augmented reality. In OSA Imaging and Applied Optics.

- LANMAN, D., AND LUEBKE, D. 2013. Near-eye light field dis- plays. ACM Trans. Graph. (SIGGRAPH Asia) 32, 6, 220:1– 220:10.

- HUA, H., AND JAVIDI, B. 2014. A 3d integral imaging optical see- through head-mounted display. Optics Express 22, 11, 13484– 13491.

- HUANG, F., CHEN, K., AND WETZSTEIN, G. 2015. The light field stereoscope: Immersive computer graphics via factored near-eye light field display with focus cues. ACM Trans. Graph. (SIG- GRAPH) 34, 4.

- Watson, Andrew B., and John I. Yellott. "A unified formula for light-adapted pupil size." Journal of vision 12.10 (2012): 12-12.

- Martinez-Conde, Susana, et al. "Microsaccades: a neurophysiological analysis." Trends in neurosciences 32.9 (2009): 463-475.

- Wandell, Brian. ISETBIO. Computer software. Http://isetbio.org/. Computational Eye and Brain Project, n.d. Web.

- Wandell, Brian A. Foundations of vision. Sinauer Associates, 1995.

- Engbert, Ralf. "Computational modeling of collicular integration of perceptual responses and attention in microsaccades." The Journal of Neuroscience 32.23 (2012): 8035-8039.

- Rolfs, Martin. "Microsaccades: small steps on a long way." Vision research 49.20 (2009): 2415-2441.

- Engbert, Ralf, et al. "An integrated model of fixational eye movements and microsaccades." Proceedings of the National Academy of Sciences 108.39 (2011): E765-E770.

Appendix I

The code used to implement the OpenGL eye rotation model as well the ISETBIO code (in Matlab), as well as some images generated using these procedures, can be found here.

Appendix II

Robert tackled the OpenGL portions of the simulation, while Keenan created the ISETBIO model and image processing pipeline to measure cone disparity. We collaborated on the presentation, future work, and write up.