RayChenPsych2012Project: Difference between revisions

imported>Psych2012 |

imported>Psych2012 |

||

| Line 37: | Line 37: | ||

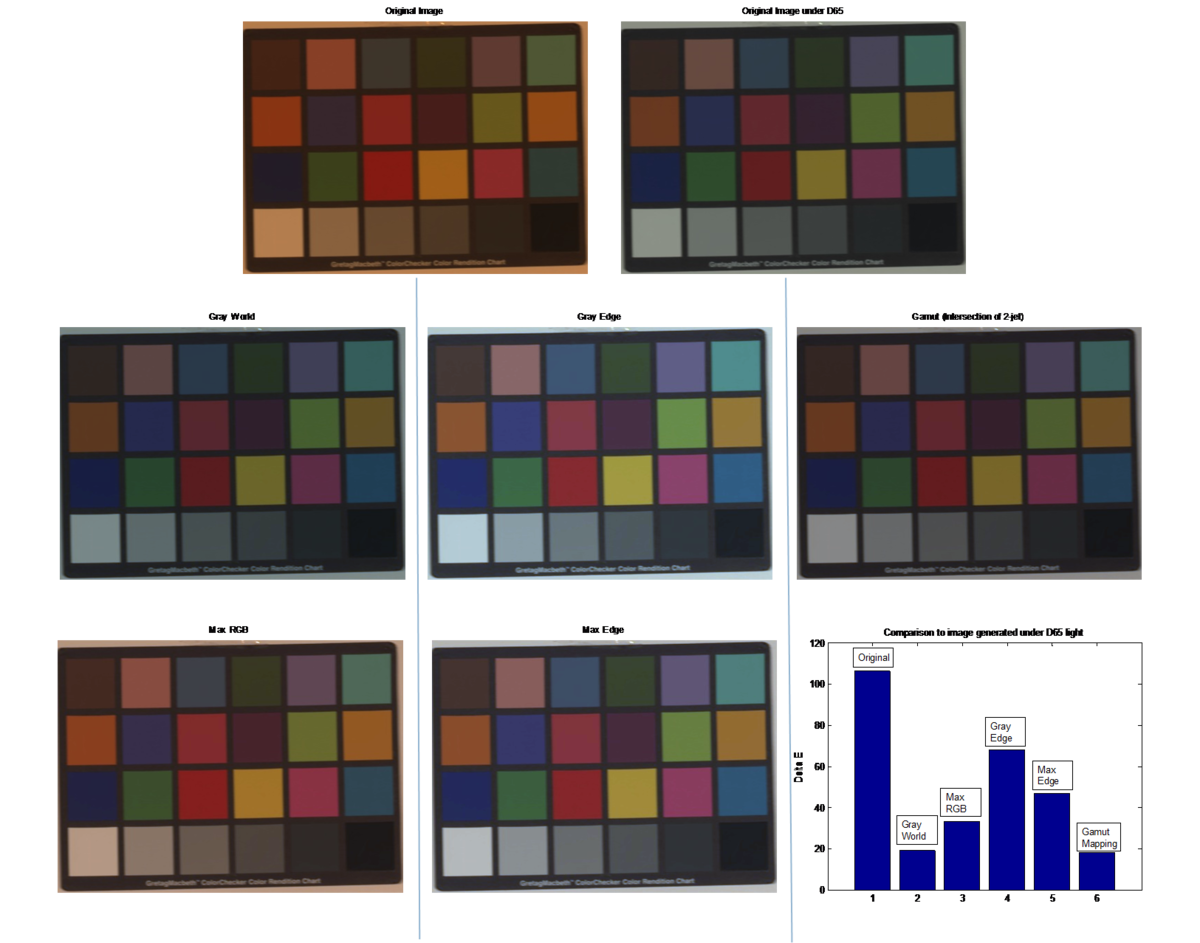

== Image set 1 == | == Image set 1 == | ||

<center>[[File:rc_macbethresults.png |1200px]]</center> | <center>[[File:rc_macbethresults.png |1200px]]</center> | ||

We see that in this case, Gray World actually performs best due to the fact that | |||

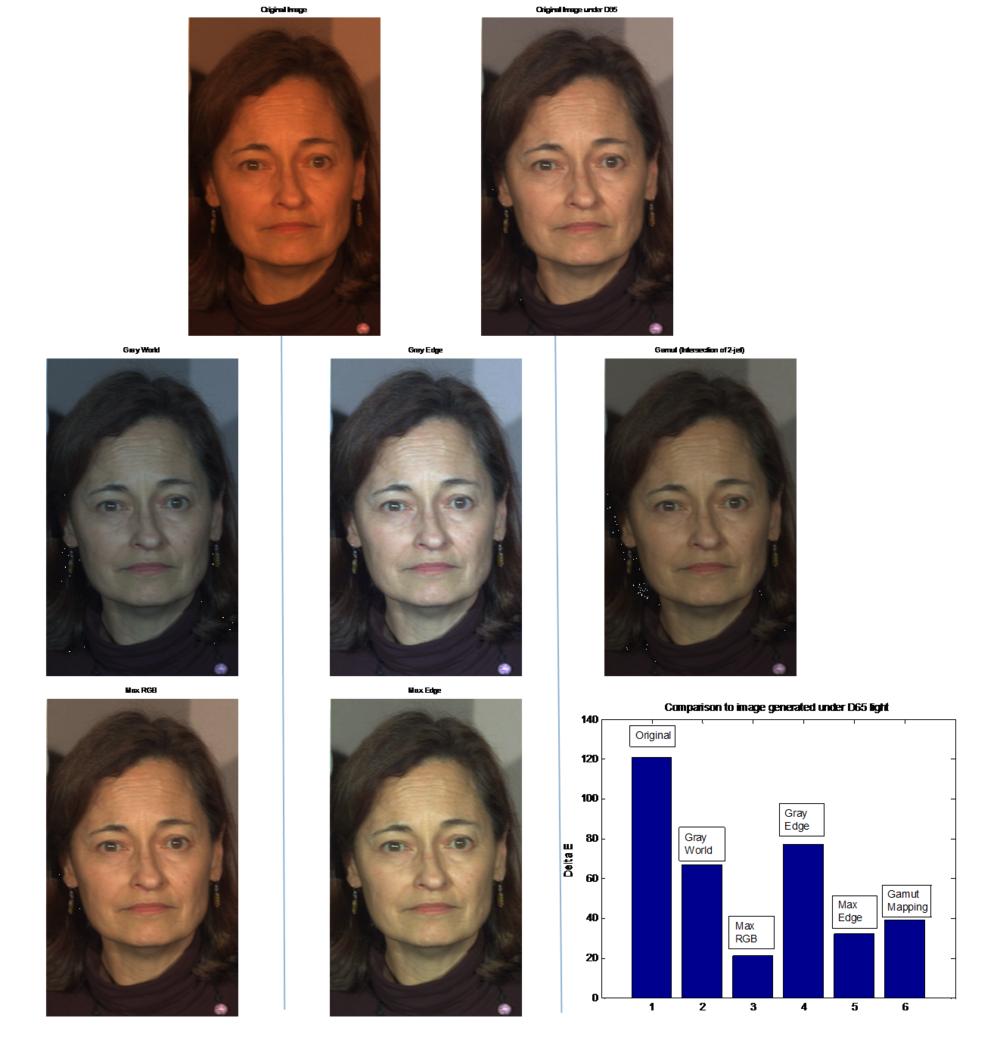

== Image set 2 == | == Image set 2 == | ||

Revision as of 21:57, 19 March 2012

Background

The human visual system features color constancy, meaning that the perceived color of objects remain relatively constant under varying lighting conditions. This helps us identify objects, as our brain lets us recognize an object as being a consistent color regardless of lighting environments. For example, a red shirt will look red under direct sunlight, but it will also look red indoors under fluorescent light.

However, if we were to measure the actual reflected light coming from the shirt under these two conditions, we would see that they differ. This is where problems arise. Think about the last time you took a picture with your digital camera, and the colors just seemed wrong. This is because cameras do not have the ability of color constancy. Fortunately, we can adjust for this by using color balancing algorithms.

Methods

In this project, I explore a number of popular color balancing algorithms. Specifically, I implement Gray World, Max-RGB, and Gray-Edge. In addition, I compare the results to an existing state-of-the-art application of gamut mapping [1].

Gray World

A simple but effective algorithm is Gray World. This method is based on Buchsbaum's explanation of the human visual system's color constancy property, which assumes that the average reflectance of a real world scene is gray. Meaning that according to Buchsbaum, if we were to take an image with a large amount of color variations, the average value of R, G, B components should always average out to gray. The key point here is that any deviations from this average is caused by the effects of the light source.

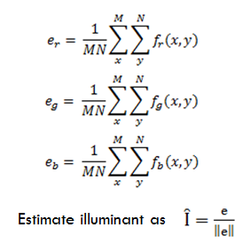

In other words, if we took a picture under orange lighting, the output image would appear more orange throughout, effectively disturbing the Gray World assumption. If we were to take this image and rescale the RGB components to average out to gray, then we should be able to remove the effects of the orange light. To do this, we just scale the RGB values of the input image by the average of the RGB values (calculated using the equations below) relative to the average of the gray value. f is our original input image, where the subscripts denote the color channel.

Max RGB

A similar algorithm to Gray World is called max-RGB. This is based on Land's theory that the human visual system achieves color constancy by detecting the area of highest reflectance in the field of view. He hypothesizes that it does this separately by the three types of cones in our eye (which detect long, medium, and short wavelengths - corresponding to R, G, and B respectively), and that we achieve color constancy by normalizing the response of each cone by their highest value.

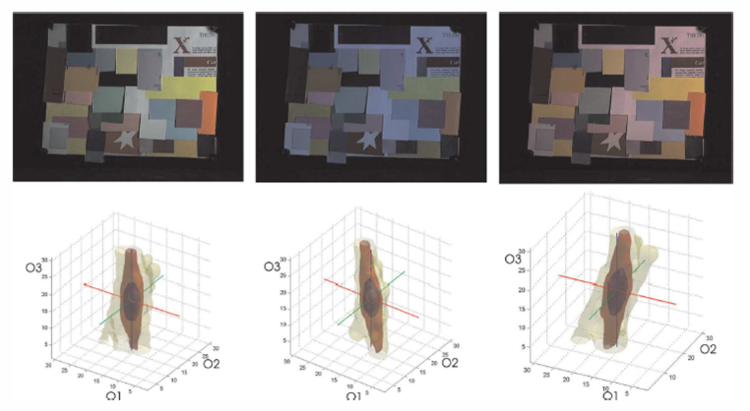

So, to approximate this, we take the maximum in each color channel and normalize the pixels in each channel according to the maximal value using the equations below.

Gray Edge & Max Edge

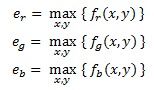

Unlike Gray World and Max-RGB, Gray Edge and Max Edge take into account the derivative structure of images; the Gray Edge hypothesis assumes that the average reflectance differences in a scene is achromatic, and Max Edge assumes that the maximum reflectance difference in a scene is achromatic. Proposed in 2007, Edge-based color constancy is based on Van De Weijer et al's observations that the distribution of derivatives of images forms a relatively regular, ellipsoid shape. The key idea here is that the long axis of this ellipsoid coincides with the illumination vector. In the figure below, we see that different types of illumination on the same scene changes the orientation of the long axis in the ellipsoid. [2]

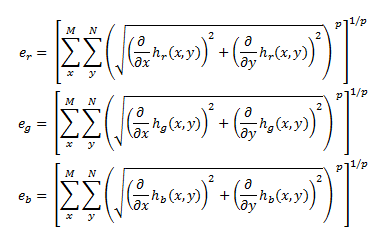

So, we can take advantage of this and estimate the illuminant using the equations below, where h is our original image passed through a Gaussian filter to reduce noise.

Results - What you found

For the results, I compare the output of the algorithms to the original image generated under D65 daylight as the standard. I use the Delta E metric between these for a numerical value in difference.

Image set 1

We see that in this case, Gray World actually performs best due to the fact that

Image set 2

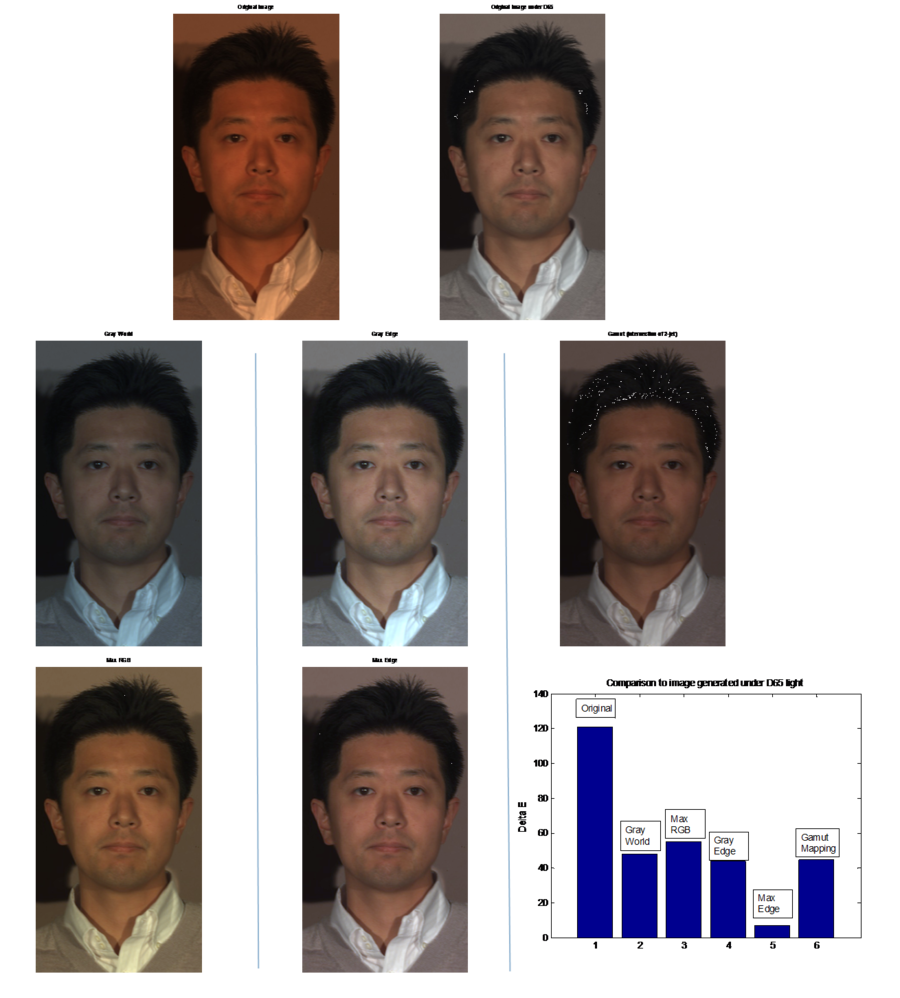

Image set 3

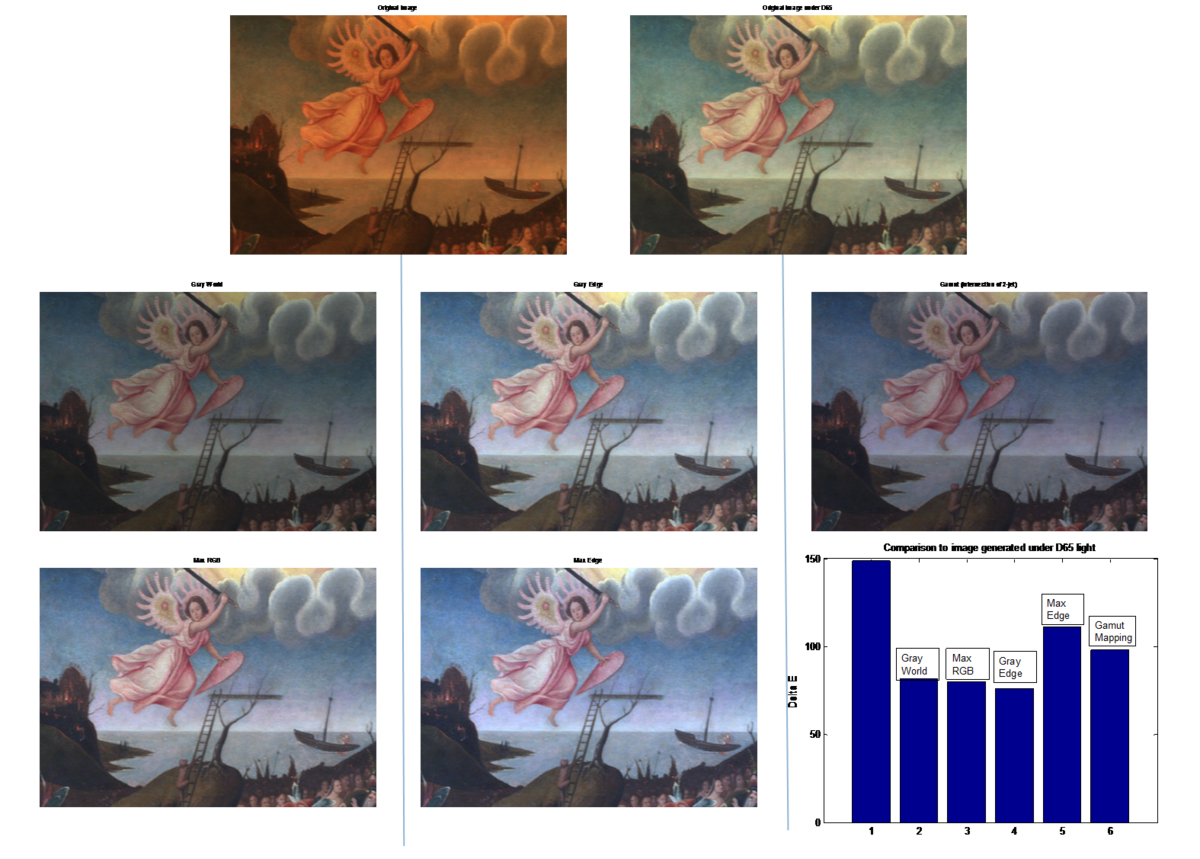

Image set 4

Conclusions

[1] A. Gijsenij, T. Gevers, J. van de Weijer. "Generalized Gamut Mapping using Image Derivative Structures for Color Constancy.

[2] J. van de Weijer, T. Gevers, A. Gijsenij. "Edge-Based Color Constancy"