Joelle Dowling

Introduction

Color Calibration is one piece of simulating the image processing pipeline. Without color calibration, or the camera sensor's spectral sensitivity, we would not know if we are accurately simulating the colors of the sensor image.

In past work, accurate simulated spectral quantum efficiencies were achieved. However, the data used to generate this model is tedious and costly to gather. Previously, the data would be collected by illuminating a surface with many different monochromatic lights. Monochromatic lights are expensive and retaking measurements for each one takes a lot of time. In this project, the data is collected by illuminating the surface with 3 different illuminants. Having this simpler data allows for quicker and cheaper data generation.

The purpose of this project is to model the spectral quantum efficiency (QE) of a camera sensor. Since we do not know the spectral transmittance of the optics and filters in the camera, we take all the wavelength dependency to lie in the sensor. Accurately modelling this can help in validation on other projects. In this project we will model the spectral QE, then test it on new data.

Background

There are a couple of important concepts to understand before going into the setup of the project.

Spectral Quantum Efficiency

Spectral Quantum Efficiency of a sensor is the number of electrons emitted in the sensor per photon absorbed and is typically a function of wavelength. Since we do not know the wavelength characteristics of the optics and filters in the camera, we assume that all the wavelength dependency occurs at the camera sensor. Therefore, we have one spectral sensitivity function [1]. This function relates the surface reflectance (spectral radiance measurements) and sensor response (RGB values). To setup the equation, we'll let n be the number of sampled wavelengths and m be the number of patches on the MCC. Let the surface reflectance measurements be in a n x m matrix, M. Let the sensor response be in a m x 3 matrix, R. And let the spectral quantum efficiency be the n x 3 matrix, S. We can relate these matrices by the following linear equation:

We are given R and M. So we need to solve for S.

Overfitting

Overfitting is a phenomena where the model fits the training data so well, that it may not be able to fit to new datasets. In other words, the model will accurately predict results for the training data, but will perform much worse on new datasets [2].

Singular Value Decomposition (SVD)

Singular Value Decomposition (SVD) is the factorization of a matrix into three different matrices, as shown below:

U and V are the left and right singular vectors, respectively. D is a diagonal matrix of singular values[3][4]. SVD is a direct way of completing a principal component analysis of a dataset. It is a helpful technique to understand variation in a dataset and to approximate a high-dimensional matrix with lower-dimensional matrices.

Methods

In this section, the data and instruments used in the project are explained, as well as the techniques.

Data and Instrumentation

This project was completed using MATLAB. Especially important was the use ImageVal's Image Systems Engineering Toolbox for Cameras (ISETCAM). This is an educational tool that can simulate certain aspects of image systems, such as sensor and display models.[1]

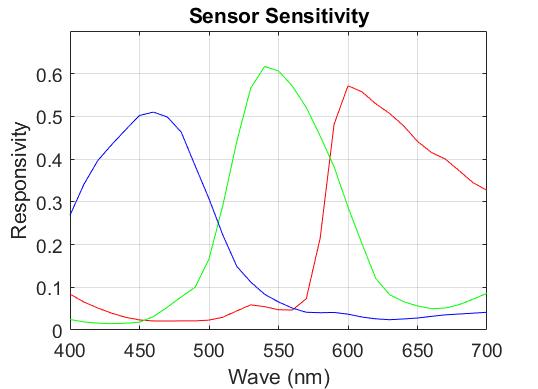

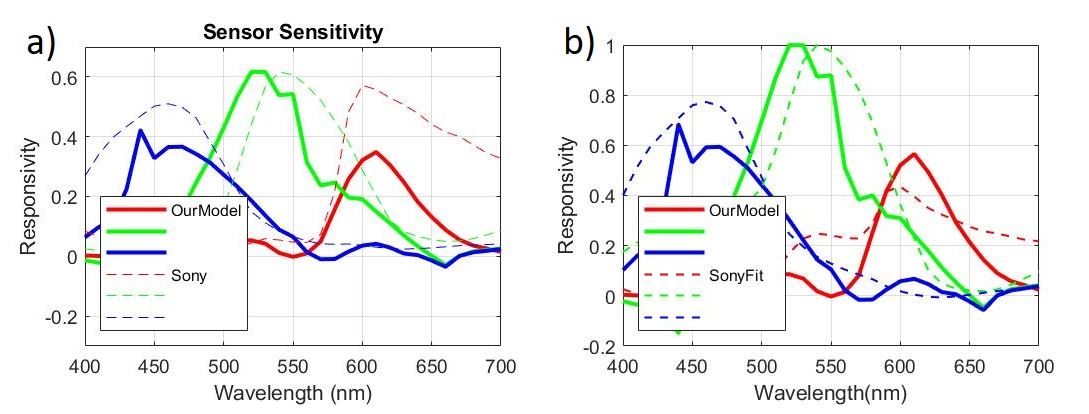

There are two important types of data that are necessary for this project, spectral radiance measurements and raw camera images. To gather the data, a Macbeth ColorChecker is illuminated by a known illuminant. The radiance measurements can then be taken using a spectrophotometer (in this case the Photoresearch spectrophotometer, model PR670 was used). This is considered the input of the camera. The Google Pixel 4A is used to capture the camera output (i.e. the images). These images are the digital negative values (DNG) files. This phone uses the Sony IMX363 sensor, which is well documented. Sony has published the sensor's spectral sensitivity data and it has previously been input in ISETCAM, shown in Figure #. This will be helpful to compare our model with.

Since this project creates and tests the model, we have "training" datasets and test datasets. The former are referred to as Measurements1 and the latter, Measurements2. Measurements1 contains radiance data and DNG files for the MCC illuminated by one of three illuminants, Tungsten (A), Cool White Fluorescent (CWF), and Daylight (DAY). Measurements2 contains radiance data and DNG files for the MCC illuminated by a Tungsten light. All the camera images are taken with the same ISO Speed, but have different Exposure Times.

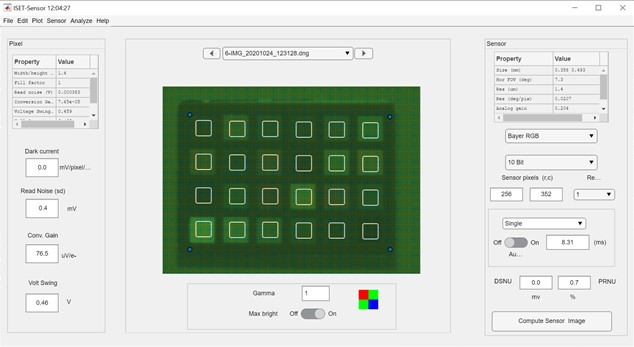

We have to extract the RGB values from the DNG files. For this, we use ISETCAM's sensor functions. First we read the DNG file using sensorDNGRead. Then we open a window that displays the sensor image using, sensorWindow.From there we estimate rectangles where the 24 boxes are on the MCC using the chartCornerpoints function. An example of the sensor window with the rectangles charted is shown in Figure 1.If we are satisfied by the placement of the rectangles, we get the digital values from each square using the function chartRectsData. We then get the mean and standard deviation of the RGB values for each square. More detail about how to complete these steps is in the source code tls_ProjectHelpersSelect.mlx linked in the Appendix.

Making the Spectral QE Model

This project utilizes multiple methods to improve the spectral quantum efficiency model.

Simple Linear Equation

To solve for the spectral quantum efficiency matrix, the previously explained linear equation was used. To initially solve for the spectral quantum efficiency matrix, the linear equation explained in the previous section was used. However, doing this alone is not sufficient to get an accurate model. This is the case, in part, because the radiance data was generated by measuring the radiance of MCC. Our data technically has 72 samples (24 per MCC x 3 illuminants), but the patches are not independent. As we'll see, in the coming sections, there is less than 10 independent measurements in our radiance dataset. Since we are trying to get the spectral QE information for 31 wavelengths (400:10:700 nm), we are heavily under-sampled.

Furthermore, simply solving the linear equation will create an overfitted model.

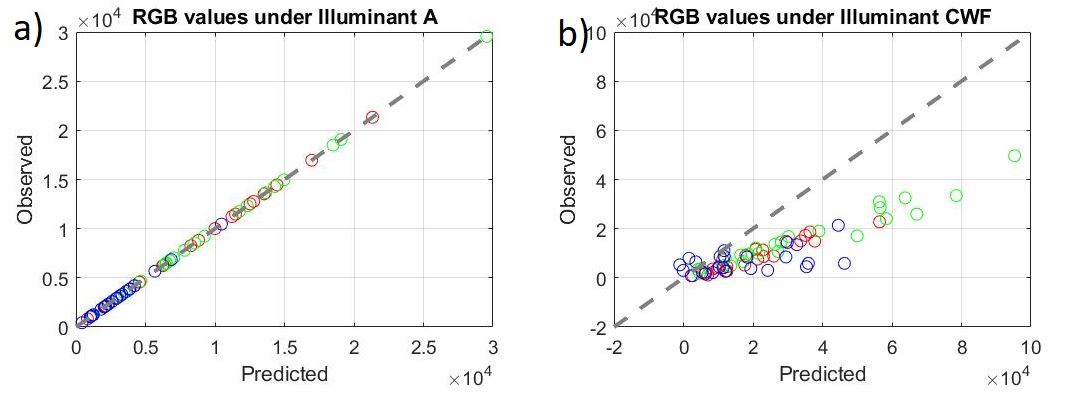

For example, the spectral QE was solved for with the subset of the radiance data for which the MCC is illuminated by a Tungsten light. This spectral QE can be used to predict the observed RGB values for this illumination with 0 error. However, this model does a very poor job of predicting the observed RGB values for a different illumination, with an rms error over 1. These results are shown in Figure 2. One way of fixing this is using only a few of the most important principal components. This is discussed in the next section.

Lower Dimensional Model

There are multiple ways of solving for the principal components of the radiance data. In this work, Singular Value Decomposition (SVD) was used. Performing SVD on the radiance data outputs 3 matrices, U, S, V. We can use D to calculate the variance of the dataset. We use the Matlab cumsum function to find the cumulative sum of the elements along the diagonal of D. We then divide the cumulative sum by the sum of D. When this operation equals 1, all of the variation of the dataset is within that subset of the data. When this is close to zero, it means that very little of the variation is represented in that subset of the data. When performing this operation on the radiance data, we get the plot shown in Figure 3. We can see that as more columns of D are included in the sum, the proportion of variation is almost 1 when about 10 columns of D are included in the sum. To prevent overfitting, we want to include the principal components that account for less than 0.95 of the variation. In our case, this is with less than 8 principal components.

Now that we know how many principal components to include in our low-dimensional model, we can represent it as a weighted sum of those components,

For our new basis, we use some amount of the most important principal components. We get these principal components from left singular vector, U. For example, if we let p be the number of principal components we will use, then our new basis is

As explained in the previous section, we want to choose a p such that proportion of variation represented in the dataset is less that 0.95, but we do not want to choose something with too little variation represented. In this work, p = 5 is choosen.

Now, we just have to solve for the weights:

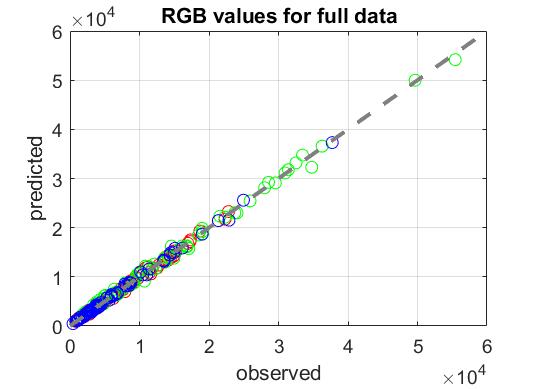

While there are many ways to calculate the inverse of a matrix in Matlab, the pseudoinverse (pinv) was chosen because it saves computing time, and outputs a reasonably accurate inverse. The spectral QE can then be calculated with the basis and weights to give us a lower-dimensional model. The lower-dimensional model does not have a problem with overfitting. To check this, the model was made with the full radiance dataset. Then the model predicted the RGB values. The results when compared to the observed values are shown in Figure 4. Here, the rms error was 0.04. A good metric to compare the rms error to is the standard deviation of the dataset, which in our case was 0.051. These values are quite close together, making this a good approximation.

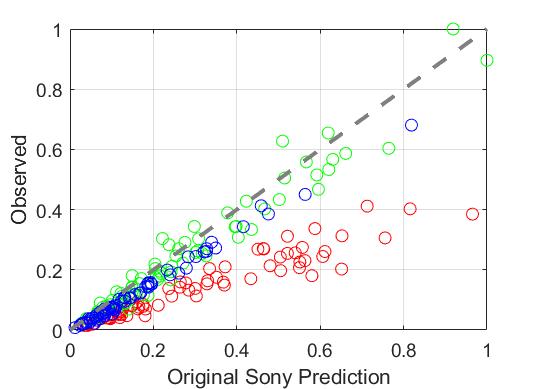

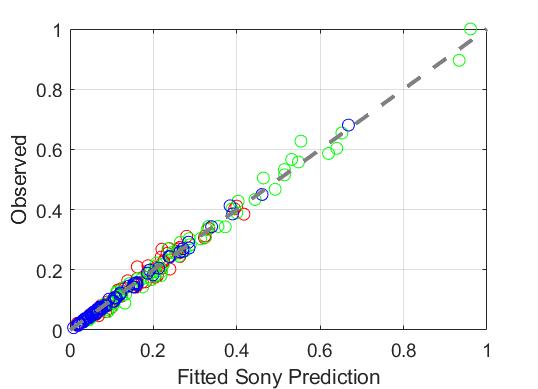

Best Linear Fit

When comparing the observed RGB values with the RGB values predicted by Sony's spectral QE model, there is quite a bit of error, about 0.4. This is shown in Figure #. Notice that particularly the red channel is off. This discrepancy between Sony's characterization and what is observed could be because of mixing between channels and channel gains being off.

We can fit the Sony model to our observed data by a linear transformation, L:

Now to get the fitted Sony spectral quantum efficiency we just multiply the original Sony curves by the linear transformation L:

Now, re-predicting the RGB values with the fitted Sony Spectral QE, the error is much lower, about 0.06. The results of this re-prediction are shown in Figure #.

Testing the Model

Once we are confident in our model, we can test it with a new dataset, Measurements 2.

First we read the radiance data and extract the RGB values from the DNG files. Then we use our low-dimensional model on the new radiance data to predict the RGB values. We compare the predicted and observed RGB values. We can also compare these values with the predictions of the Sony model. We can also calculate the RMS error of the between these values to quantitatively evaluate how good our prediction is.

Results

After completing all the previous steps, we have Spectral QE model and a linearly fitted Sony Spectral QE that we can use on the new radiance dataset from Measurements2. Figure # shows our low-dimensional model compared to the original (a) and fitted (b) Sony spectral QE model.

Sony Linear Fit and Our Estimate

The fitted Sony spectral QE

RGB Value Comparisons and Error

Conclusions

References

- Digital camera simulation (2012). J. E. Farrell, P. B. Catrysse, B.A. Wandell . Applied Optics Vol. 51 , Iss. 4, pp. A80–A90

- Overfitting Definition

- Singular value decomposition. (2020, November 09). Retrieved November 27, 2020

- Peng, R. (2020, May 01). Dimension Reduction.Retrieved November 27, 2020