Impact of Camera Characteristics on DNN Model Inference Performance: Difference between revisions

| Line 95: | Line 95: | ||

=== F/# and Focal Length === | === F/# and Focal Length === | ||

=== | === Exposure Time === | ||

<gallery mode="packed" widths=500px heights=500px> | |||

Image:Beagle_with_10_ms_Exp_Time.jpg|500px|Beagle Image with 10 milliseconds exposure time | |||

Image:Beagle_with_80_ms_Exp_Time.jpg|500px|Beagle Image with 80 milliseconds exposure time | |||

</gallery> | |||

In the Exposure Time experiment, the images clearly reveal the impact of exposure time. A direct relationship is observed: as exposure time increases, the images become brighter, and conversely, they become darker. | |||

<gallery mode="packed" widths=300px heights=300px> | |||

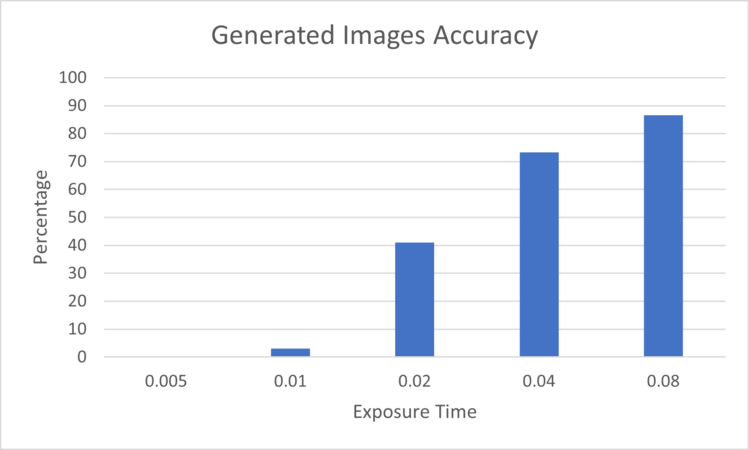

Image:Exposure Time Generated Accuracy (EfficientNetB0).png | Figure 1: Generated Images Accuracy for the different Exposure Times evaluated on EfficientNetB0 | |||

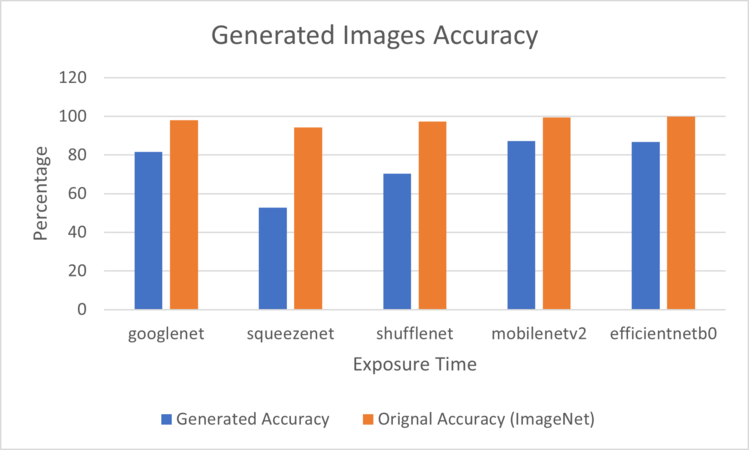

Image:Exposure Time DNNs Generated Accuracy (80 milliseconds exp time).png | Figure 2: Generated Images Accuracy for 80 milliseconds Exposure Time evaluated on deferent DNNs | |||

</gallery> | |||

In Figure 1, the chart illustrates the impact of the exposure time on the classification accuracy. The greater the exposure time, the better the classification accuracy since the images are clearer and sharper. Also, it is noticeable among the various deep neural networks (DNNs) employed to assess the images, the performance of the SqueezeNet DNN was the worst with a 52.8% accuracy, as shown in Figure 2, while the MobileNetV2 archived the best accuracy with 87.2%. | |||

=== Read Noise === | === Read Noise === | ||

Revision as of 06:28, 18 December 2023

Introduction

There are many image recognition applications that classify images using pre-trained Neural networks. However, testing images used in these application could be captured by different users, cameras, environments, etc. How could the image quality affects the application decisions or classification? Using ISETCameraDesigner, we will generate images with different camera characteristics. These set of images will be evaluated on different DNN model inferences and conclusions will be drawn about the different DNN models performance, scores, and effect of the images quality on the predictions.

Background

F/#

The ratio of the aperture diameter to focal length.

N: F/# (F-Number)

f: Focal Length (m)

D: Aperture Diameter (m)

Focal Length

The measurement of how strongly the system converges or diverges light.

Exposure Time

The duration of the camera/sensor collects light.

ISET AI Camera Designer

- Build Camera subsystems; Optics, Sensor, and IP.

- Use a collection of images.

- Generate Images from the original Images with the Camera Design.

- Evaluates Original Images vs Generated Images on different pre-trained DNNs.

- Scores are based on the classification match (top-1) of the original and generated images.

ImageNet

ImageNet Dataset:

- 1000 classes/categories

- 1,281,167 training images

- 50,000 validation images

- 100,000 test images

DNN Architecture

- Pre-trained on ImageNet dataset and available in Matlab

- Chosen for smaller footprint targeting embedded applications

- All Convolutional Neural Networks (CNNs)

- Output a predicted class using probability distribution from softmax

| DNN Architecture | Depth | Size | Parameters (Millions) | Image Input Size |

|---|---|---|---|---|

| GoogleNet | 22 | 27 MB | 7.0 | 224-by-224 |

| SqueezeNet | 18 | 5.2 MB | 1.24 | 227-by-227 |

| ShuffleNet | 50 | 5.4 MB | 1.4 | 224-by-224 |

| MobileNetV2 | 53 | 13 MB | 3.5 | 224-by-224 |

| EfficientNetB0 | 85 | 20 MB | 5.3 | 224-by-224 |

Methods

In this project, there were many techniques that were used to measure and analyze the data. It was fully ran thru MATLAB and ISETCam. MATLAB and ISETCam played significant role in generating images with different Camera characteristics. Throughout this project, different DNN models, ImageNet datasets, and camera characteristics were used to measure the mean classification accuracy of the Beagle Category (196 Images) and analyze them.

ISET AI Camera Designer

- Simulates Cameras with different subsystems; Optics, Sensor, and IP.

- Uses a collection of images selected by the user.

- Applies modifications to the images through utilizing the designed subsystems.

- Evaluates Original Images and generated images on different pre-trained DNNs. However, scores are based on the classification match of the original and generated images depending on the number of score classes.

Experiments

In this project, we tweaked the camera characteristics by incorporating a combination of parameters in the camera subsystems such as Optics and Image Sensor. Our approach involved conducting multiple experiments to thoroughly investigate the effect of the different camera characteristics on the DNN models classification.

The combination of data used in each of the experiments is as follows:

F/# - Focal Length Combination

In this combination experiment, we generated images with a combination of f-numbers and focal lengths. The combination were used in the data collection are as follows:

F/#: f/1.0, f/1.4, f/2, f/4, f/5.6, f/8, f/11, f/16, f/22, f/32

Focal Lengths used for each of F/#: 15, 20, 35, 50 mm

Exposure Time

In this experiment, we generated images by only modifying the exposure time. The exposure times were used in the data collection are as follows:

Exposure Times:

Read Noise

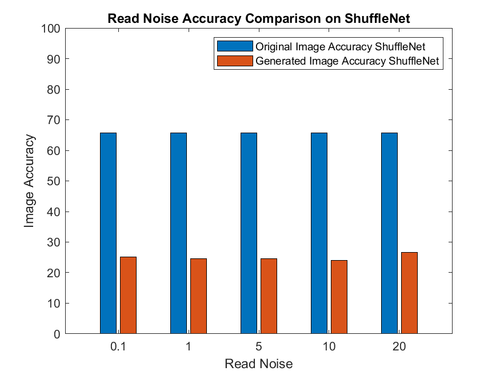

In this experiment, we generated images with the default settings of the Camera Designer App. However, we only modified the read noise. The read noise values that were used in the data collection are as follows:

Read Noise: 0.1, 1, 5, 10, 20 mV

Results

F/# and Focal Length

Exposure Time

-

Beagle Image with 10 milliseconds exposure time

-

Beagle Image with 80 milliseconds exposure time

In the Exposure Time experiment, the images clearly reveal the impact of exposure time. A direct relationship is observed: as exposure time increases, the images become brighter, and conversely, they become darker.

-

Figure 1: Generated Images Accuracy for the different Exposure Times evaluated on EfficientNetB0

-

Figure 2: Generated Images Accuracy for 80 milliseconds Exposure Time evaluated on deferent DNNs

In Figure 1, the chart illustrates the impact of the exposure time on the classification accuracy. The greater the exposure time, the better the classification accuracy since the images are clearer and sharper. Also, it is noticeable among the various deep neural networks (DNNs) employed to assess the images, the performance of the SqueezeNet DNN was the worst with a 52.8% accuracy, as shown in Figure 2, while the MobileNetV2 archived the best accuracy with 87.2%.

Read Noise

Conclusions

- DNN model performance is impacted by camera parameters

- Empirical iterative discovery process to determine impact direction and magnitude

- Camera parameters’ adjustments can be applied at training time either to restrict or widen the data distribution depending on application

- Restrict to target a smaller model parameter space (more constrained application)

- Widen to generalize better (less constrained application)

- At inference time they can bring an out-of-distribution example into in-distribution

Appendix

You can write math equations as follows:

You can include images as follows (you will need to upload the image first using the toolbox on the left bar, using the "Upload file" link).