Thermal Imaging and Pitvipers: Difference between revisions

| Line 18: | Line 18: | ||

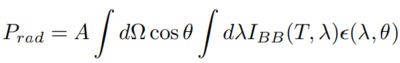

There are no geometrical terms here - the object is assumed to be a “blackbody” that perfectly absorbs all incident light, and thus also perfectly emits thermal light. Most everyday objects are not perfect absorbers, but instead are partially reflective. We can model these “greybodies” by multiplying the blackbody spectrum by the emissivity - a number between 0 and 1 that represents how absorptive or emissive the object is. In general, the emissivity depends on wavelength and angle, but oftentimes an average emissivity is sufficient. | There are no geometrical terms here - the object is assumed to be a “blackbody” that perfectly absorbs all incident light, and thus also perfectly emits thermal light. Most everyday objects are not perfect absorbers, but instead are partially reflective. We can model these “greybodies” by multiplying the blackbody spectrum by the emissivity - a number between 0 and 1 that represents how absorptive or emissive the object is. In general, the emissivity depends on wavelength and angle, but oftentimes an average emissivity is sufficient. | ||

By integrating the greybody intensity spectrum we find that the power of thermal radiation scales with temperature to the fourth power times the emissivity. | By integrating the greybody intensity spectrum we find that the power of thermal radiation scales with temperature to the fourth power times the emissivity. | ||

[[File:eq_bbrad_power_int.png|400px]], which | [[File:eq_bbrad_power_int.png|400px]], which for a constant emissivity is simply | ||

[[File:eq_bbrad_power.png|150px]] | [[File:eq_bbrad_power.png|150px]] | ||

<!-- | <!-- | ||

Revision as of 18:44, 18 December 2023

Introduction

Electromagnetic radiation refers to traveling oscillations in the electric and magnetic fields. The name “electromagnetic radiation” has about nine syllables too many, so in physics it is often simply called “light”. Light can be categorized based on the portion of the electromagnetic spectrum it resides in (e.g. “visible light”) or it can be categorized based on its source (e.g. “laser light”). In this report we will refer to two regions of the spectrum - the “visible” and the “infrared”. The visible region is the portion our eyes can see and consists of wavelengths 400 - 700 nm. The infrared region is everything between the visible and microwave portions of the spectrum, or wavelengths roughly from 700 nm - 1 mm. We will focus on light generated by the thermal motion of particles, which we call “thermal light”.

Although different portions of the electromagnetic spectrum often are treated as completely separate, we will show here that both the visible and infrared are used by animals for vision. Our goal is to understand the components fundamental to imaging in these two wavelength regions and to connect the animals’ vision to today’s technology.

We first briefly introduce the origins of thermal light. Then we will discuss the evolution of vision in animals to both the visible and the infrared, before comparing the two and noting the implications for human camera technology in the two regions of the spectrum.

Background

Scientists observed that warm things emitted light, and then tried to model it. In 1900 Max Planck was shown data on the spectrum of thermal light, and the same day guessed a formula that modeled it. He started with the answer and then worked backwards to explain it, changing any physics that disagreed along the way - in doing so he invented the Boltzmann constant and also quantized the energy levels of the light - marking the very beginning of quantum mechanics [Hollandt 2012]. His equation for the spectrum of thermal light emitted by an object at temperature T is [Planck 1910]

![]()

There are no geometrical terms here - the object is assumed to be a “blackbody” that perfectly absorbs all incident light, and thus also perfectly emits thermal light. Most everyday objects are not perfect absorbers, but instead are partially reflective. We can model these “greybodies” by multiplying the blackbody spectrum by the emissivity - a number between 0 and 1 that represents how absorptive or emissive the object is. In general, the emissivity depends on wavelength and angle, but oftentimes an average emissivity is sufficient.

By integrating the greybody intensity spectrum we find that the power of thermal radiation scales with temperature to the fourth power times the emissivity.

, which for a constant emissivity is simply

, which for a constant emissivity is simply

![]()

To summarize, everything emits thermal light, with a spectrum and radiance that depends on material and temperature. The sun has a temperature of about 6000 K and emits light with a peak wavelength in the visible around 500 nm. Everything at earth-scale temperatures near 300 K emits in the infrared with a peak wavelength around 10 um.

Vision in Animals

Most animals need to perceive their environment in order to find food and avoid predators. Animals evolved the ability to sense light from the environment, multiple times, in order to better perceive the environment. In this section, we will discuss two different kinds of vision in the animal kingdom. First, we talk about vision with visible light, which detects the reflections of sunlight off the surroundings. Then we talk about vision with infrared light, which detects the thermal light emitted by the surroundings.

Visible

Vision first evolved about 500 million years ago during the Cambrian period, a time when all life was underwater. It is thought that the first eyes consisted of photoreceptors and had only light/dark sensitivity. Gradually, some eyes became dimpled to form pinhole cameras and therefore acquired spatial resolution. The pinhole aperture eventually became sealed by a cornea and the lenses we know and have today evolved. Some animals, however, never evolved lensed eyes and remain virtually unchanged since the Cambrian. The nautilus is one such living fossil that provides a glimpse of what some early eyes looked like.

The Chambered Nautilus (Source: Monterey Bay Aquarium)

The Chambered Nautilus (Source: Monterey Bay Aquarium)

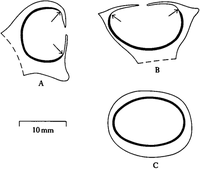

Nautilus eyes consist of a 1 mm diameter pinhole opening up to a roughly 10 mm diameter cavity. The back of the cavity is lined with photoreceptors with a peak sensitivity to blue light at 465 nm, which makes sense given that only blue light reaches the 100+ meters of depth where the nautiluses live.

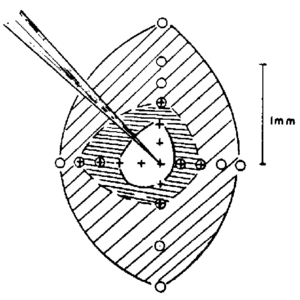

Cross sections of the nautilus' pinhole eyes. (Source: Raj 1984)

Cross sections of the nautilus' pinhole eyes. (Source: Raj 1984)

To understand the limits to Nautilus vision, let’s examine the angular and spatial resolution of pinhole eyes.The visual resolution of pinhole eyes is a combination of the aperture size (D) and its distance from the retina (h). The geometrical blur of a large aperture , the diffraction blur (Airy disk half angle) . We can also project the angular resolution onto the back of the retina to get the spatial resolution from the spot size . The intensity of light on the detector is a fraction of the ambient intensity , so therefore there is a direct tradeoff between resolution and sensitivity. To summarize, larger apertures increase blurring due to the aperture size, decrease blurring due to diffraction, and increase the amount of light collected.

The pinhole eyes of the nautilus have large apertures. Plugging in and depth we find

Our estimated resolution is consistent with behavioral experiments on living nautiluses, which found their resolution to be between 5.5 and 11.25 degrees. Surprisingly, the photoreceptor spacing in the nautilus of 0.28 degrees is much smaller than the aperture limited spot size of 6.5 degrees. Some hypothesize that the extra photoreceptor density has no visual benefit. Another possible explanation is that the brain is able to post-process and correct for the geometric blurring due to the pinhole. We will revisit this idea in the next section, where we examine another creature with pinhole eyes.

Infrared

When animals first moved onto land about 500 million years ago, they entered a new sensory environment compared to the oceans. Unlike underwater, infrared waves propagate in air and carry additional information about the temperature of the surroundings. Temperature is particularly relevant to snakes since they thermoregulate with their surroundings, often prey on warm-blooded rodents, and often must avoid warm-blooded predators. When snakes evolved about 50 million years ago, some developed the ability to sense this infrared radiation and thus discern the temperature of their surroundings [Greene 1997].

Pitvipers are one such group of snakes that evolved a set of pits that function as pinhole eyes for seeing infrared light. The pits are roughly diameter and depth . Suspended just off the back lining is a 15 um thick membrane of receptors.

-

Close up of Western Diamondback Rattlesnake. Nostril, pit, and eye are visible. (Source: Bakken 2012)

-

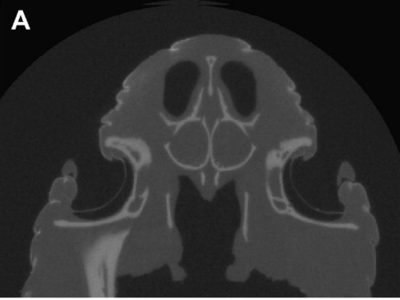

Xray cross section of Western Diamondback Rattlesnake. Note the thin membrane suspended near the back of the pit. (Source: Bakken 2012)

Instead of photoreceptors, the membrane contains temperature-sensitive nerves - similar to those humans have that tell if something is too hot or cold. Incident infrared light gets absorbed by water in the pit membrane, heating it up and leading to a change in temperature. Temperature differences in the scene are represented by differences in infrared light illumination, which when imaged by the pinhole onto the back of the pit membrane leads to measurable temperature differences across the membrane. Temperature changes as small as 0.002 C are detectable.

Based on the geometry of the pit, we find it's resolution to be

The roughly 7000 sensory cells of the pit form a sensor mosaic with spacing of about 60 um - much more densely packed than the 1 mm resolution we estimated above. One possible explanation for the oversampling by the receptor mosaic is that the brain can use the additional data to post-process and sharpen the blurred image. The Infrared "Vision" of Snakes Hartline 1982 To understand how postprocessing can help, consider the point spread function of a large pinhole. A single bright point source on a dark background will uniformly illuminate a circle on the pit membrane. The edges of the circle will be blurred by diffraction on the order of , and outside this the membrane will be in shadow. If the edges of the circle can be accurately determined, then the location of the point source can be accurately determined by inferring the circle’s center. This is the role of post-processing in the brain.

The brain combines and post-processes the sensory information from the eyes and forms a “visual map” from the raw “sensory map”. A single pixel (neuron) on the visual map consists of a combination of the outputs from many sensory pixels - the “receptive field” of that pixel. In mammals, the receptive field of a neuron is an excitatory center with an inhibitory surrounding ring [Kuffler 1953]. For pinhole eyes where all the information lies in the edge-detection abilities, each pixel on the visual map is formed by ring-like excitatory and inhibitory combinations of pixels from the sensory map. If the ring radius properly matches the pinhole radius, then it has been shown that this neural process can recover the scene quite well as long as there isn’t much noise in the sensory data. Noise is particularly important since a single noisy pixel on the sensory map will skew many pixels of the visual map [Hemmen 2006].

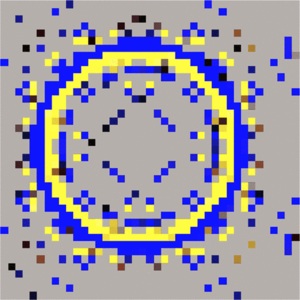

-

Measured excitatory center and inhibitory surround of the receptive field of a cat neuron. (Source: Kuffler 1953)

-

Simulated excitatory (yellow) and inhibitory (blue) rings of the receptive field of a pitviper with optimal post-processing. (Source: Hemmen 2006)

-

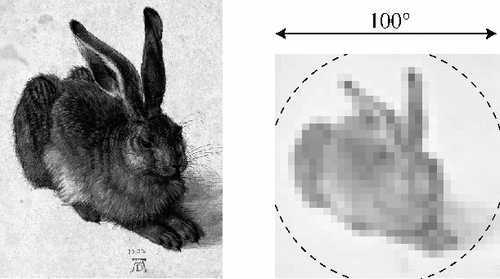

Input image for pit simulation: a scaled up rabbit filling the pit's field of view with 32x32 pixels comparable to the number of pit sensors. (Source: Hemmen 2006)

-

Left: Simulated resulting sensory map on the pit membrane. Right: recovered visual map, for various levels of sensory noise. (Source: Hemmen 2006)

There is strong evidence that the snakes can perform this post-processing. The variance in strikes of a blinded pitviper to a warm object is less than 5 degrees - far better than the geometric-limited resolution we estimated above.

Methods

Results

Conclusions

References

Appendix I

Appendix II

You can write math equations as follows:

You can include images as follows (you will need to upload the image first using the toolbox on the left bar, using the "Upload file" link).