LiuVenkatesanYang

Back to Psych 221 Projects 2013

Background

Since digital images have become ubiquitous in the internet, the image based forgeries have increased too. From the ultra slim model flashing in the cover of a fashion magazines to the manipulated images submitted to the Journal of cell biology, image based forgeries have become very common these days. The U.S Office of Research Integrity reported that there were less than 2.5% of accusations of fraud involving disputed images in 1990. The percentage rose to 26% in 2001 and by 2006, it went up to 44.1% Reference_1. Image Forgeries are frequently seen in forensic evidence, tabloid magazines, research journals, media outlets and hoax images sent in spam emails which leaves no doubts for the viewer as they appear to be visually acceptable without any signs of tampering. This necessitates a good method to detect these kind of forgeries. There are two main interests in Digital Camera Image Forensics. One is source identification and the second is forgery detection. Source identification delas with identifying the source camera with which an image is taken while camera forensics deals with detecting tampering in an image by assessing the underlying statistics of the image.

Below is another example of a reinotopic map in a different subject.

Figure 2

Once you upload the images, they look like this. Note that you can control many features of the images, like whether to show a thumbnail, and the display resolution.

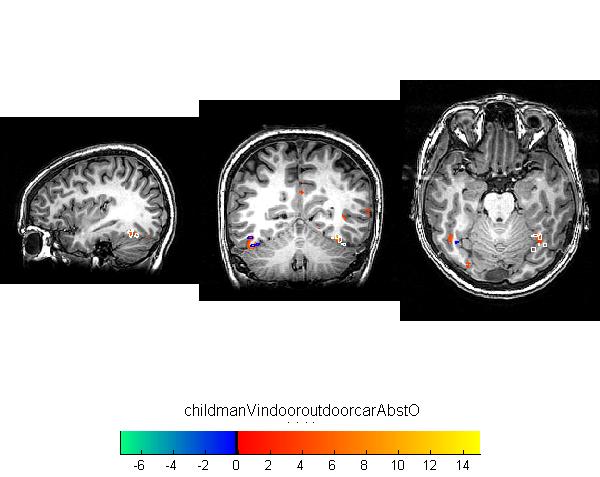

MNI space

MNI is an abbreviation for Montreal Neurological Institute.

Methods

Measuring retinotopic maps

Retinotopic maps were obtained in 5 subjects using Population Receptive Field mapping methods Dumoulin and Wandell (2008). These data were collected for another research project in the Wandell lab. We re-analyzed the data for this project, as described below.

Subjects

Subjects were 5 healthy volunteers.

MR acquisition

Data were obtained on a GE scanner. Et cetera.

MR Analysis

The MR data was analyzed using mrVista software tools.

Pre-processing

All data were slice-time corrected, motion corrected, and repeated scans were averaged together to create a single average scan for each subject. Et cetera.

PRF model fits

PRF models were fit with a 2-gaussian model.

MNI space

After a pRF model was solved for each subject, the model was trasnformed into MNI template space. This was done by first aligning the high resolution t1-weighted anatomical scan from each subject to an MNI template. Since the pRF model was coregistered to the t1-anatomical scan, the same alignment matrix could then be applied to the pRF model.

Once each pRF model was aligned to MNI space, 4 model parameters - x, y, sigma, and r^2 - were averaged across each of the 6 subjects in each voxel.

Et cetera.

Results - What you found

Retinotopic models in native space

Some text. Some analysis. Some figures.

Retinotopic models in individual subjects transformed into MNI space

Some text. Some analysis. Some figures.

Retinotopic models in group-averaged data on the MNI template brain

Some text. Some analysis. Some figures. Maybe some equations.

Equations

If you want to use equations, you can use the same formats that are use on wikipedia.

See wikimedia help on formulas for help.

This example of equation use is copied and pasted from wikipedia's article on the DFT.

The sequence of N complex numbers x0, ..., xN−1 is transformed into the sequence of N complex numbers X0, ..., XN−1 by the DFT according to the formula:

where i is the imaginary unit and is a primitive N'th root of unity. (This expression can also be written in terms of a DFT matrix; when scaled appropriately it becomes a unitary matrix and the Xk can thus be viewed as coefficients of x in an orthonormal basis.)

The transform is sometimes denoted by the symbol , as in or or .

The inverse discrete Fourier transform (IDFT) is given by

Retinotopic models in group-averaged data projected back into native space

Some text. Some analysis. Some figures.

Conclusions

Here is where you say what your results mean.

References

Software