Chandra Rajyam

Introduction

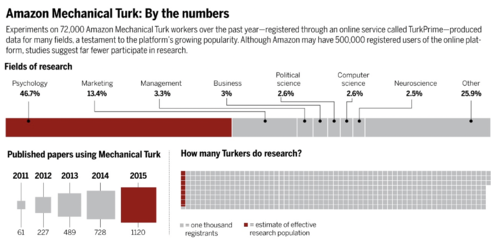

Over the past few years, we have seen the rise in popularity of online vision-based experiments [1]. By conducting online experiments, researchers can access larger and more diverse participant samples than what may be possible within a lab. In fact, large numbers of participants can be collected at quickly and at relatively low costs (<24h, ~$1-2 USD/participant/10min). There are multiple online resources to recruit participants online, with the most popular being Amazon Mechanical Turk.

However, there are drawbacks over lack of control over the participant’s computer environment. For example, the experimenter will have trouble controlling for screen resolution. In this project, we will set up an experiment using Mechanical Turk that allows for the calibration of viewing conditions. Particularly, we will be focusing on accounting for the variability in hardware and software used by participants.

(DATA) Google Scholar/TURKPRIME; (GRAPHIC) A. Cuadra/SCIENCE

(DATA) Google Scholar/TURKPRIME; (GRAPHIC) A. Cuadra/SCIENCE

Background

The goal is to have this experiment be a lodestar for easily calibrating experiment environments online, in the form of an library. We will want the library to have modular content, where necessary calibration tests can be added in. Similar libraries are https://www.jspsych.org/ and https://www.psychopy.org/.

Methods

First, screen resolutions vary considerably among participants. In fact, average deviations in participant screen resolutions are 243 and 136 pixels (width and height) [3]. As a work around, we can ask for participants to use a real-world object for measurement. They will have to hold up a credit card (which is standardized in size at 85.6mm in width) and be asked to adjust a shape on the screen to match it in size [3]. We will then be able to measure the logical pixel density:

The logical pixel density enables us to translate between physical distance and on-screen pixel length.

What participants see on Mechanical Turk. They are able to move the sliding bar to change the size of the on-screen credit card.

What participants see on Mechanical Turk. They are able to move the sliding bar to change the size of the on-screen credit card.

Next, viewing distance can vary among participants. The experiment can take advantage of the blind spot in the human eye (entry of the optic nerve on the retina). The entry point of the blind spot is located at approximately 13.5° horizontally. Using this information, combined with the logical pixel density, we can calculate the distance between the participant and their screen.

The trigonometry behind distance estimation (taken from [3]).

The trigonometry behind distance estimation (taken from [3]).

For determining the blind spot, we will make use of a sweeping red dot, moving away from the point of eye fixation until the viewer can just not see it peripherally. A modification was made to the method suggested in [3], where we will no longer be relying on the reaction of participant. Instead, the participant will be able to use a sliding bar to adjust the red dot.

Failed to parse (syntax error): {\displaystyle Viewing Distance(d)=Physical Distance(s)/tan(\alpha)}

To ensure that there is consistency in brightness and screen contrast, we present a band of grey bars. Participants will be asked to adjust their computer brightness and contrast settings until they can distinguish all 20 bars [4]. We are able to get information about the user’s operating system and provide them instructions on how to modify their contrast and brightness settings. The grayscale images show a brightness range of 5-255, defined in RGB values and with a change of 5 RGB steps each patch. Again, we are capable of recording this information to a database.

Brightness and contrast test - Gray patches to determine the perceived white and black point.

Brightness and contrast test - Gray patches to determine the perceived white and black point.

A user prompt from Modifying contrast on MacOS.

A user prompt from Modifying contrast on MacOS.

Next, we also can check for the display gamma. The industry standard has converged to a standard display gamma of 2.2, but there can be variability in that of participant computers. We use our knowledge that gamma correction is defined by the power-law expression. We ask users to perceptually compare a solid gray (col3) inner box to and outer box of alternating lines of black/white (col1/col2) [2].

Based on bel.fi/alankila/lcd/gamma.html

Based on bel.fi/alankila/lcd/gamma.html

A simple survey was also added to gather information. Taking cues from [4], we can ask questions about possible color deficiencies in the user. We can also gather more information about their ambient surroundings and computer hardware.

Other modules are currently in development, allowing experiments to detect hue shift [4], saturating non-linearity [2] and color (“Coca-Cola solution” - C Bechlivanidis & DA Lagnado, pers.comm., 2015). It is important that modules are simple. Observer stress should be kept low to avoid an early dropout of the subject. Collecting more data is a healthy way of dealing with software/hardware diversity. Rigorously calibrating the user environment is tough (i.e. people fidgeting after distance test). It would be nice to test these modules and get data on error (Preliminary tests on distance measurements have been included below). However, there is potential for this project to grow into an open-source library.

Results

We run a similar experiment to the one from [3] to understand the accuracy behind the blind spot distance detection. We asked 10 participants to run the program on a 13” (2019 MacBook) and 24” (p2419h Dell monitor). We measure seating distance, defined by “the distance between the center of the chair and the center of the display”, and ask them to use the computer as they usually would. We see that this method is suitable for rough estimates of distance, but an alternative test should be explored for more accuracy. The results found are recorded in the table here:

Conclusion

The modules mentioned here have varying degrees of success. For example, the blind-spot distance method is rather inaccurate, whereas the logical pixel density module fares better. The choice of what modules to use will ultimately depends on the circumstances of the experiment being conducted. In fact, a useful next step would be to survey researchers who use Mechanical Turk to better understand what modules they would want (adding more modules is important). This will help clarify tolerances/features that need to be developed, in turn encouraging better adoption of the library.

References

[1] A. T. Woods, C. Velasco, C. A. Levitan, X. Wan, and C. Spence, “Conducting perception research over the internet: a tutorial review,” PeerJ, vol. 3, 2015.

[2] L. To, R. L. Woods, R. B. Goldstein, and E. Peli, “Psychophysical contrast calibration,” Vision Research, vol. 90, pp. 15–24, 2013.

[3] Q. Li, S. J. Joo, J. D. Yeatman, and K. Reinecke, “Controlling for Participants’ Viewing Distance in Large-Scale, Psychophysical Online Experiments Using a Virtual Chinrest,” Scientific Reports, vol. 10, no. 1, 2020.

[4] I. Sprow, Z. Baranczuk, T. Stamm, and P. Zolliker, “Web-based psychometric evaluation of image quality,” Image Quality and System Performance VI, 2009.

Appendix I

Code from this project is available here: Google Drive. A Stanford email is needed to access it. Modules will be moved to Github later.