Cohen

Back to Psych221-Projects-2012

Background

Motivation

Hyperspectral imaging technology refers to the process of collecting and processing two-dimensional representation of a scene over a wide range of the electromagnetic spectrum. Hyperspectral images differ substantially from standard RGB images - rather than capturing data only in the range of visible light (roughly between 400nm-700nm), hyperspectral images often extend this range information in the near-infrared range (NIR, 750nm-1.4um), the short-wavelength-infrared (SWIR, 1.4um-3um) and beyond. In addition, unlike standard images which are composed of three color bands (or channels), each of them an integration over a wide range of wavelengths, hyperspectral images usually provide high spectral resolution - the information contained in each channel represents only a narrow spectral band. The extended wavelength range, as well as the higher spectral resolution result in large image cubes, containing hundreds of bands.

Hyperspectral imaging has become

Displaying and interacting visually with hyperspectral images poses a significant challenge - most available display technologies are able to present three independent color bands (R,G,B channels) for each spatial position (or pixel) of the image. Therefore, presenting hyperspectral images involves a problem of dimensionality reduction, or how to compress the wide, finely-spaced range of wavelengths into the available three bands. It is important to note that, all dimensionality-reduction methods inevitably result in a loss of information compared with the complete hyperspectral representation (excluding degenerated end-cases). This project is focused on the interesting question of how to minimize that loss of information.

== I chose to pose the problem of displaying hyperspectral images as the following optimization problem - find three weighting functions, each defined over the entire available wavelength range, which map the image bands to the three display channels, such that most of the information in the scene is preserved. The mapping is done by maximizing an objective function. Other approaches

The usefulness of

requires

An interesting question that

As a consequence,

I’m interested in the question of presenting hyperspectral images to the viewer – having to compress the hyperspectral data to three RGB values of the display results in a significant dimensionality reduction and loss of information. If we choose to display only the bands which correspond to visible light out of the hyperspectral stack, the viewer may miss important details about the scene which are hidden in other spectral bands. I propose to try and develop an adaptive algorithm for automatically choosing which spectral bands to use from the input in order to construct the final RGB image. The purpose of the algorithm is to adaptively choose the bands which hold the largest amount of information about the scene, and combine them to a plausible output image. I will develop and test such an algorithm using the available database of spectral images.

The The resulting hyperspectral imagery has very high spectral resolution, providing better diagnostic capability for object detection, classification, and discrimination than multispectral imagery. However, it is challenging to display all the useful information contained in such a huge 3-D image cube. A common practice is to use a red-green-blue (RGB) color representation to provide a quick overview of a scene for decision-making support. Obviously, such a three-color channel display results in significant loss of information. Our objective is to display classes distinctively to maximize the class separability.

Hyperspectral and multispectral images may contain hundreds of image bands; the dimensionality of the image bands must be reduced to three bands (or less) to display on a standard tristimulus display. Much analysis of hyperspectral imagery is performed by software packages, such as RSI’s ENVI. However, maximizing human users comprehension of the data and analysis is vital for effective and responsible use. After analysis, the user needs to understand the results in order to make decisions. We propose some taskindependent design goals for the display of hyperspectral images. We present solutions that satisfy these goals using fixed, spectrally-weighted linear integration of the N bands to create a RGB image. The most common way to display a hyperspectral image is to select three of the original N bands and map them to RGB, as done in the image browser for AVIRIS (aviris.jpl.nasa.gov/html/aviris.quicklooks.html). The three bands can be picked by an adaptive method based on the image content [1], to highlight expected features, or to approximate a conventional color image. If the band-selection This work was supported in part by the National Science Foundation Grant SBE-0123552. is image adaptive, then the meaning of display colors can be drastically different for different images. A standard method for reducing the dimensionality of a data set is Principal Components Analysis (PCA). For anNband hyperspectral image, the first three principal components are the three N-dimensional, orthonormal basis vectors that capture the most data variance; spatial information is not used. The N-band image pixels are linearly projected onto these three N-dimensional basis vectors to create three image bands. The three bands can be mapped to RGB, HSV [2], etc. for display.

Related Work

In this project, we ...

Rather than focusing on the , hyperspectral images contain information

The problem of

One is responsible for the visible and near-infrared (VNIR) portion of the spectrum and operates in the range of 400 to 1000 nm

You can use subsections if you like.

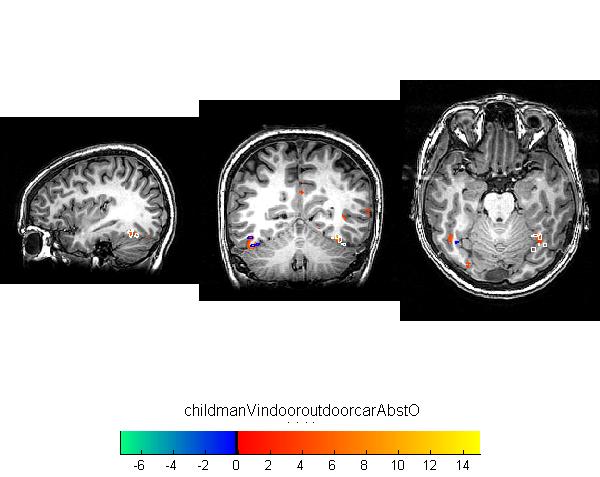

Below is an example of a retinotopic map. Or, to be precise, below will be an example of a retinotopic map once the image is uploaded. To add an image, simply put text like this inside double brackets 'MyFile.jpg | My figure caption'. When you save this text and click on the link, the wiki will ask you for the figure.

Below is another example of a reinotopic map in a different subject.

Figure 2

Once you upload the images, they look like this. Note that you can control many features of the images, like whether to show a thumbnail, and the display resolution.

MNI space

MNI is an abbreviation for Montreal Neurological Institute.

Methods

Measuring retinotopic maps

Retinotopic maps were obtained in 5 subjects using Population Receptive Field mapping methods Dumoulin and Wandell (2008). These data were collected for another research project in the Wandell lab. We re-analyzed the data for this project, as described below.

Subjects

Subjects were 5 healthy volunteers.

MR acquisition

Data were obtained on a GE scanner. Et cetera.

MR Analysis

The MR data was analyzed using mrVista software tools.

Pre-processing

All data were slice-time corrected, motion corrected, and repeated scans were averaged together to create a single average scan for each subject. Et cetera.

PRF model fits

PRF models were fit with a 2-gaussian model.

MNI space

After a pRF model was solved for each subject, the model was trasnformed into MNI template space. This was done by first aligning the high resolution t1-weighted anatomical scan from each subject to an MNI template. Since the pRF model was coregistered to the t1-anatomical scan, the same alignment matrix could then be applied to the pRF model.

Once each pRF model was aligned to MNI space, 4 model parameters - x, y, sigma, and r^2 - were averaged across each of the 6 subjects in each voxel.

Et cetera.

Results - What you found

Retinotopic models in native space

Some text. Some analysis. Some figures.

Retinotopic models in individual subjects transformed into MNI space

Some text. Some analysis. Some figures.

Retinotopic models in group-averaged data on the MNI template brain

Some text. Some analysis. Some figures. Maybe some equations.

Equations

If you want to use equations, you can use the same formats that are use on wikipedia.

See wikimedia help on formulas for help.

This example of equation use is copied and pasted from wikipedia's article on the DFT.

The sequence of N complex numbers x0, ..., xN−1 is transformed into the sequence of N complex numbers X0, ..., XN−1 by the DFT according to the formula:

where i is the imaginary unit and is a primitive N'th root of unity. (This expression can also be written in terms of a DFT matrix; when scaled appropriately it becomes a unitary matrix and the Xk can thus be viewed as coefficients of x in an orthonormal basis.)

The transform is sometimes denoted by the symbol , as in or or .

The inverse discrete Fourier transform (IDFT) is given by

Retinotopic models in group-averaged data projected back into native space

Some text. Some analysis. Some figures.

Conclusions

Here is where you say what your results mean.

References

Software

Appendix I - Code and Data

Code

Data

Appendix II - Work partition (if a group project)

Brian and Bob gave the lectures. Jon mucked around on the wiki.