HouSetra

Introduction

There are several examples of popular tools for filter creation. A popular one is Instagram, released in 2010 and acquired by Facebook in 2012. Instagram has several filters, most of which involve modifying the image's colors based on its existing RGB values, for example converting an RGB image into greyscale or boosting the red value.

Another one is Prisma, which makes use of convolutional neural networks to transfer artistic qualities of one image onto another [1]. This allows one to transform a sample into to have the style of a famous artist.

Our goal is to create filters as well, but with a focus on applying the depth of an image onto the filter properties.

Background

The idea of photographic filters has been around nearly since the inception of photography. We can filter photos for various reasons - to correct imperfections, to aid in visualization, to enhance colors, or many others. With the rise of digital and mobile photography, it has become easier and easier to apply filters for visual effect. A quick search will show that the number of apps on the market that offer a suite of filters is very large.

Most cameras only produce image data, and this is what is used when filtering. However, there are cameras that give more. Some modern cameras, such as the Intel realsense cameras, include depth data, allowing for detailed environmental data to accompany their images.

We aim to explore the use of depth data in image processing. With accurate depth data, it is possible to emulate a number of in-camera effects that were previously impossible (such as depth of field), as well as create visualizations that can tell you a lot more about an image than the image data itself. In addition, depth data can provide an interesting source of modulation for various processing algorithms that can be applied to images.

In this project, we explore these techniques. We take depth data and apply it to three filters that can be used on depth images.

Methods

Data Capture

We captured RGB and Depth images with two tools: the Intel Realsense R200 world-facing camera and the Google Camera app.

|

|

The Intel camera (shown above) captures RGB images at up to 1920x1080 resolution, and depth images at up to 480x360 resolution. In order to keep the sizes consistent, we kept the resolution of both at 480x360. The depth maps themselves are created on-board the camera by first texturing the scene with an infrared projector (Fig. 2). The two infrared cameras then capture the textured scene and a depth map is created from the disparity between the two images. For best results, the depth map is rated at 0.5m-4m indoors, and up to 10m outdoors, although specular objects will produce inaccurate depth values. Examples of captured images are below. We used Visual Studio to program the camera.

|

|

As can be seen from Fig. 4, there are a large amount of dark black areas. These are referred to as holes, and are areas where the camera could not accurately detect a depth value. We will need to fill these in before using the depth maps.

In order to fully test our filters, we also captured images using the Google Camera application. This application captures several images of a scene by moving the camera slowly. It then estimates depth values through the disparity of these images and outputs a much denser depth map than the Intel camera. The resolution of the resultant RGB and depth image are 1440x1080. Examples of this are below.

|

|

Hole Filling

As mentioned before, we need a hole filling algorithm to process the Intel camera's depth images in order to use them more effectively.

We wanted to implement a content-aware bilateral filter. Just using the depth map information, we can provide some guesses for missing pixels, but this gets more difficult in large patches that are missing. Thus we operate under the assumption that for pixels that are close to a point (within a certain neighborhood), pixels that have similar RGB color values will have similar depth.

The hole filling algorithm proceeds as follows:

-We iterate through the pixels in the image, and flag any pixels that have missing depth information. This is represented by a '0' value in the depth map.

-Then, for each flagged pixel with depth value and RGB values , we create a neighborhood around the pixel of non-flagged pixels with depth and color values and respectively. In practice we created a window centered on (see Fig. 7). If there are no non-flagged pixels in the chosen neighborhood, then the pixel is skipped.

-Now, for each pixel in the neighborhood, we calculate the RGB color distance between itself and the central pixel.

-We then calculate an exponential weight

with the norm in the exponent. For our images we used a value of . The idea here is that nearby pixels with similar colors should have similar depths, and so those should have a higher weight.

-Finally, we produce and estimate of the original pixel depth value by setting:

This process is then repeated for four iterations, because at each iteration only flagged pixels neighboring non-flagged pixels will be updated. The before and after images can be seen below

|

|

However there will be speckles, or small dark dots. These are not black (missing) values, but are simply inaccuracies from the original depth image which where not flagged and not fixed in filtering. To remedy this, we applied one more pass of the bilateral filter, but we made use of all of the pixels, and did not ignore any flagged pixels. This is a more typical bilateral filter which is a common edge-preserving filter [2].

The final pass did have some effect in smoothing, but it was capable of reducing almost all speckling.

|

|

|

Filter 1: Depth of Field

The first filter we created was a simulation of the effect of depth of field. Essentially, we would like to keep certain parts of the image in focus, and other parts out of focus or blurry. As an input we had an RGB image with a corresponding depth map . We first created a new image which is a copy of , but only at pixels with a user-specified depth that we would like to keep in focus.

Then we created new images which are images (of same size as ) that copy over values of only at pixels meant to be out of focus, and leaves pixels blank otherwise. Specifically, we divided the depth values to be out of focus linearly into 10 pieces, which each of these images focusing on a piece. For each of these images we applied a Gaussian filter with increasing blur rate linearly proportional to distance. Finally, we created a new filtered image by replacing empty pixel values in with those of that have a non empty matching pixel.

Filter 2: Color Mapping

We wanted a way to visualize both image data and depth data at the same time. To do this, we created a linear map from depth data to hue, and overlaid this over the image at variable transparency. For easier visualization on some scenes, a quantized version was also created. To do this, the depth data was rounded and divided into 7 (for the seven colors of the rainbow to provide sharper contrast). Overlay was controlled by an input factor. Images displayed in results are with an alpha of 0.3.

Filter 3: Background Warping

The last filter we attempted was that of a background warp. This is done by first taking an RGB image with depth values D. Then we choose some set of depth values to keep in focus and divide the image into two new same-sized images and . The first image, at pixels whose depth values are in , have the same value as , and pixels are black otherwise. The second image does the opposite and has empty pixels at locations with depth values in .

The next step is the warping of . We did this with a coordinate system on the image itself, with being the center. Additionally we used a pixel location as polar coordinates , where is the distance to the center, and is the angle formed from the horizontal going counterclockwise. The actual warping was done by replacing the RGB values of a pixel at location with the values of a pixel at location , where is the depth value for that pixel. Then, the two images were combined by replacing pixel values of with those in , at locations where does not have empty values.

Results

Hole filling

Below are examples of resultant images from our hole filling algorithm.

Though there are some errors, the algorithm does a good job of inferring depth in empty spaces from color data, and works in both indoor and outdoor situations. Additionally, there are limitations due to the low resolution of the source images.

Filter 1: Depth of Field

Below are results from our depth of field filter. The top series shows an image from the Intel camera. The focus is on the foreground with a blurry background and the results do resemble a depth of field effect. However, on the top left of the resultant image there is an artifact; the background is not blurred at this location. This is due to inaccuracies of the Intel camera depth map. When looking at the bottom series, from the Google Camera app, these effects are less prominent.

Filter 2: Color Mapping

Below are images showing the effects of our color mapping filter. The top series shows an image from the Intel camera. In this case, the unmodified filter had more noticeable impact, but in more short range cases the quantized filter had more impact (such as the bottom series), and does a nice job at detailing the different depths. Additionally, the Intel camera had very low resolution, leading to lesser quality. In contrast, the larger resolution more accurate depth maps from the google camera produced better results.

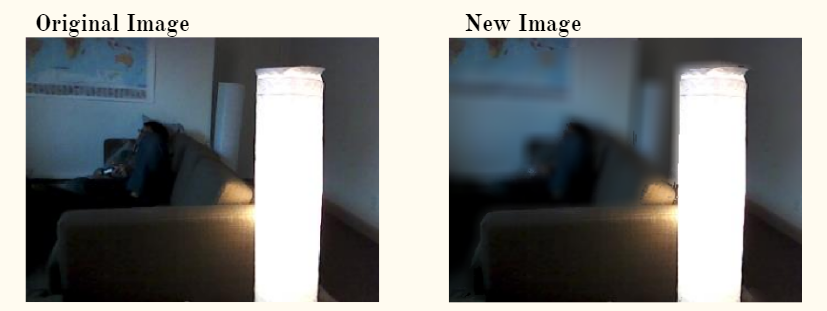

Filter 3: Background Warping

Below are results from our final filter, the background warp. We only attempted this on the images from Google Camera because it is very sensitive to inaccurate depth values. Even below we can see that portions of the lamp, which was meant to not change, were warped along with the background. This is because of small inaccuracies in the high resolution depth map. However, this is only a small portion of the lamp, and most of the background and foreground were separated nicely.

Matlab GUI

To combine all of our filters into one place, we created a GUI through Matlab which a user may play with. This interface has four buttons: depth of field (DOF), Warp Bg, Color Bg, and HSV Depth. The DOF and warp buttons create the depth of field and background warping filters mentioned above. The color mapping filter was divided into the Color Bg and HSV Depth buttons. The first displays depth as an alteration on the RGB values of an image, with a user choice of a red, green, or blue overlay, and the second displays the depth by altering the HSV values of an image.

There are also four sliders which allow a user to change parameters of the filter such as center of focus, range of depth values to focus on, blur rate, color rate, and warp rate. The slider descriptions "Not in Use" change to a more meaningful description once a filter is clicked on. Next, the filter need only be clicked a second time to produce the desired effect. This code is attached in the appendix for experimentation.

Conclusions

We made use of color and depth images generated from both the Intel Realsense R200 camera and the Google Camera app. The Intel images require hole filling to acquire dense depth maps, and we did this through a bilateral filter. This worked very well for the most part, but has issues with larger holes. In particular the issues are with recreating the depth of a background which may have been too far for the camera. This is due to the large inaccuracies of the camera's depth calculations. There are more complex image inpainting algorithms out there which make use of diffusive properties and could potentially be used for next year. Additionally, the Intel camera outputs both of its IR images used for depth calculations. These two images may also be used as additional information and may result in better depth maps.

With these dense depth maps we created three filters, a depth of field effect, a color mapping effect, and a background warping effect. The depth of field effect did provide some blurring which looked similar to the real effect. However, the blurring rate is user defined, and next year it could be good to apply a realistic blurring based on distance, rather than a user defined one. For example a user could input the fnumber and other camera information, in order to create a matching blur. The color mapping performed as expected. The background warping had a few difficulties because of the inaccurate depth maps. The actual warping itself performed pretty nicely, but the resultant images do not look nice if there are small errors in depth. One idea for next year is to apply these filters into simulated scenes with perfect depth maps. The filters could be fully tested on such scenes. Finally, we combined all of our filters together into a GUI created through Matlab code. This was interesting because it was the first time we created a GUI through Matlab.

References

[1] Leon Gatys, Alexander Ecker, and Matthias Bethge, "Image Style Transfer Using Convolutional Neural Networks," in The IEEE Conference on Computer Vision and Pattern Recognition, 2016, pp. 2414-2423. http://www.cv-foundation.org/openaccess/content_cvpr_2016/papers/Gatys_Image_Style_Transfer_CVPR_2016_paper.pdf

[2] Carlo Tomasi and Roberto Manduchi, “Bilateral filtering for gray and color images,” in Computer Vision, 1998. Sixth International Conference on . IEEE, 1998, pp. 839–846. https://users.cs.duke.edu/~tomasi/papers/tomasi/tomasiIccv98.pdf

Appendix I

Here is the Matlab Code used to create our filters and GUI: Code.

Here are test images to apply our filters to: Images.

Here is code that controls the camera and saves images: Code.

Appendix II

Most of the work, such as creating the GUI interface and background reading, was done jointly; the parts that were not were:

- Rafael: Depth of Field filter, Background warping filter

- Austin: Color map filter, hole filling algorithm