IOS app for programmable camera

Introduction

Project objective

To create an iOS application that enables users to take better pictures and add cool effects to their photos using a programmable camera.

Programmable cameras

A programmable camera is a portable digital camera which can be controlled using a smartphone or any other Wi-Fi enabled remote device. These cameras have a fixed image sensor, but can mount various lenses. The digital stream of pixels from the camera sensor is processed in a user app, which uses APIs to communicate with the camera. Today, there are many APIs available for developers to integrate smartphones (mainly Android and iOS) with these Wi-Fi enabled digital cameras.

Technically the cameras integrated on our smartphones are also programmable cameras. But, due to the limited range of their capacities and fixed lens, it is far less programmable than the a camera with advanced DSLR capabilities at the same time allowing programmers to use APIs to control the camera.

The picture on the right shows how smartphones act as a controlling interface for the camera.

Smartphone application

Users can take great pictures with these digital cameras by changing properties like ISO, Exposure, F-Number, white balance, etc. Unfortunately, users need prior knowledge of photography to operate these cameras properly. Besides this, there is no photo preview available when the user changes such camera properties and these cameras are low on processing memory.

Our team therefore decided to leverage the processing capability of smartphones and create an application that would provide complex image processing capabilities on the live preview image helping users to fathom the complex settings of powerful cameras. We enhanced the live preview of the programmable camera to be responsive to changes in luminosity and color channel exposures. In addition, We decided to implement real-time filters for the camera so that users can see filter effects applied on screen before capturing a picture.

Project description

We designed an iOS application for the programmable camera that displayed image information as well as showcased image processing techniques. By doing this, we hoped to learn more about the science behind these techniques. We also hoped to create the foundation for an application that users will eventually use to create better and more interesting photos.

In order to do this, we tried to come up with simple solutions to the following questions.

How can we enable taking better pictures?

As we said before, our aim in developing this app was to help users take better pictures. One of the first ideas we had was to overlay a real-time image histogram over the live camera stream seen by the user. This is a great way to help users capture the sort of picture they want by adjusting angles and lighting to achieve a certain kind of histogram. They can also make sure they are not missing details due to underexposure or overexposure of parts of the view.

Ultimately, we implemented in our app two common histograms useful for photographers: the luminosity histogram and the RGB histogram. Both these histograms have relative pixel amount for a given color on the Y-axis. The luminosity histogram shows brightness values on the X-axis, i.e., the darkest shadow (pure black) is located at the origin and the brightest highlight (pure white) is at 255 (in terms of the 256-step color values scale). The RGB histogram also has the same X-axis going from black to white, but shows absolute color peaks (red, green, and blue) as opposed to a brightness distribution.

How can we enable taking more interesting pictures?

We decided to implement a few real-time image filters in our app to add interesting effects to the live camera stream (and in turn, the pictures taken). Further down, we’ve discussed some of the filters we added to our application.

Methodology

User Interface of the Application

The user interface of this application is fairly simple to understand for those not too well-versed with the technical parameters of a digital camera (i.e., camera ISO, FNumber, Exposure, etc). The top bar contains a menu of options to select the camera property a user wishes to change. There is a ‘Filters' button which is a part of this menu and described in greater detail below. When any of the buttons are clicked, a table of options is launched in the live camera view.

Filters Button

This button is added to the top control panel with other adjustable options (the camera parameters mentioned above). When this button is tapped by the user, it gets highlighted in blue and triggers a table of filters the user can choose from. Upon choosing a filter option from this table, an appropriate filter is applied to the live camera stream. Users can pick and choose from different filters as well as change camera parameters and the live camera stream feedback changes accordingly. This makes it easy for users to follow the changes applied to the live stream and choose the best setting for their purposes before capturing images.

The filters UI looks as shown. It is scrollable and currently has 9 filters, but it can support many more. It works on live preview and applies filters to live preview images.

Taking better pictures: Histograms and Histogram Equalization

Image Histogram

Histograms are graphical representations of tonal and luminance distributions in an image. Inspecting histograms of live preview in a camera can help photographers to adjust the exposure values of their cameras. Also, the RGB histograms can be used as a guide to selectively adjust exposure for different colors. You can make sure that you are not losing details by underexposing or overexposing certain colors. That's why we incorporated the live histogram feature into our app. The GPUImage library provides APIs by which histograms of images can be extracted. We used the APIs GPUImageHistogramFilter and GPUImageHistogramGenerator to extract a histogram and plot it. After extracting the histogram image, we blend it into the live preview image using GPU blend filter. The screenshots are as seen below.

Histogram equalization and Histogram matching

Equalizing a histogram is a technique that is used to adjust pixel intensities to enhance contrast. It's a transformation by which the image pixel intensities (often ranging from 0 - 255) are normalized and the image is transformed to an image with a linear CDF (cumulative distribution function) of gray levels. Since CDFs can be inverse transformed, the intensities can be gotten back from this linearized CDF.

In a grayscale image or a monotone image, this works very well and results in better exposure of underexposed parts. Both in live images and when applied to stored images, grayscale equalization is shown to result in improving the details of the image. We could not find histogram equalization APIs in the GPUImage library. So, we used the Accelerate framework from iOS to implement histogram equalization.

Equalizing the histogram on the live image preview is useful for the user to adjust exposure and lighting for the scene being shot.

In a color image, however, this equalization needs to be repeated for the three color channels R, G, and B separately. However, applying the same levels in all bins for the three channels results in color distortion and the tonal distribution of the image is messed. This is because most images rarely have equal intensities in all three color channels.

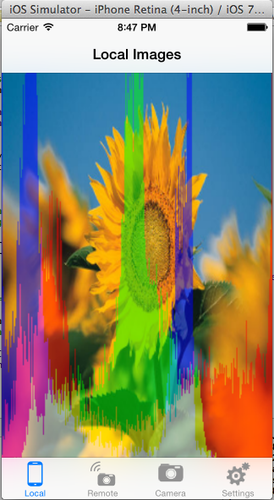

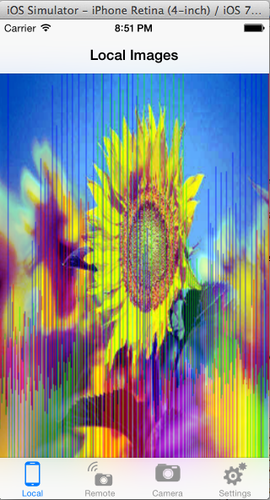

We show an example of a distorted color image when the R, G, and B histogram is equalized.

The same images with their RGB histograms blended over the images are as shown.

There are many RGB equalization techniques in research and in practice. In the future, we plan to incorporate histogram matching in our camera app. This will allow the user to specify a reference photo and ask the camera to match the histogram of the current view to the specified image’s histogram. This can help users' photos acquire the appearance of good photos.

Taking more interesting pictures: Filters using GPUImage library

GPUImage library

To begin with, we were given starter code that implemented a basic iOS app for a programmable camera. Initially, its functionality was that of any other camera app, letting users take and store pictures. It had some simple features that enabled users to adjust camera parameters such as ISO, Exposure, and FNumber. To add filter and histogram capabilities, we scoured the internet for helpful libraries to integrate into this code and finally settled on the GPUImage open-source library.

Reasons we decided to utilize this library:

- Users can create a wide variety of custom filters

- Simple, easy to understand Objective-C interface

- Ease of integration with the given Objective-C iOS starter code

- Speed (according to the creator of this library, GPUImage uses OpenGL ES 2.0 shaders to perform image and video manipulation much faster than could be done in CPU-bound routines)

The working and implementation of some of the filters in our application is outlined below.

Description of Filters

Grayscale Filter

A grayscale filter creates the effect of a typical black-and-white filter.

We use an instance of the GPUImageGrayscaleFilter class (available within the GPUImage library) and apply it to our live camera session when the user chooses ‘Grayscale’ in the dropdown menu of filters.

GPUImageGrayscaleFilter converts each pixel in the original color image to its grayscale equivalent. So, if a pixel has an original red value of ‘R’, green value of ‘G’, and blue value of ‘B’, its new grayscale value ‘BW’ is given by the following equation:

- BW = 0.2125R + 0.7154G + 0.0721B

Color Invert Filter

A color invert filter creates the effect of a typical photo ‘negative’.

We use an instance of the GPUImageColorInvertFilter class and apply it to our live camera session when the user chooses ‘ColorInvert’ in the dropdown menu of filters.

GPUImageColorInvertFilter changes the brightness value of each pixel to its inverse value on the 256-step color values scale. A pixel with a value of 255 in the original ‘positive’ image is changed to 0 in its negative image. The equations comprising this conversion for each pixel are as given below:

- Pixel’s Color Inverted Red value = 255 - Pixel’s Original Red value

- Pixel’s Color Inverted Green value = 255 - Pixel’s Original Green value

- Pixel’s Color Inverted Blue value = 255 - Pixel’s Original Blue value

Pixelate Filter

As indicated by its name, a pixelate filter is used to pixelate the image.

We use an instance of the GPUImagePixellateFilter class and apply it to our live camera session when the user chooses ‘Pixellate’ in the dropdown menu of filters.

GPUImagePixellateFilter clumps together pixels which have the same color value into regions. These regions are conventionally square-shaped ‘blocks’ (typical mosaic pixelation). This distorts the image; the larger the region size, the more the distortion.

Emboss Filter

As indicated by its name, an emboss filter is used to provide an embossed effect to the image.

We use an instance of the GPUImageEmbossFilter class and apply it to our live camera session when the user chooses ‘Emboss’ in the dropdown menu of filters.

GPUImageEmbossFilter replaces each pixel of an image by either a highlight or a shadow, depending on light and dark boundaries on the original image, respectively. The filtered image shows the rate of color change at each location of the original image.

The screenshots showcasing the filters are in the following order:

- Original image of Lenna

- Grayscale filtered version

- Color inverted version

- Pixelated version

- Embossed version

Results

We have embedded our result images and screenshots throughout this Wiki page.

Conclusions

We have developed an iOS application for a programmable camera that enables users to take better and more interesting pictures. In order to do this, we explored various image processing libraries that are used in iOS development frameworks.

What we learned

We had a lot of fun developing this application and learned a lot in the process. The most important things were

- To develop an iOS app

- The structure of the image processing pipeline in a digital camera in general and a programmable camera in particular

- To use the various amazing open-source image processing libraries available for iOS

- The tradeoffs of applying complex operations on live previews for the sake of real-time feedback

- Filters that can be applied to the digital stream of pixels and that images can be transformed using filters for the sake of further scientific observations

- Histogram equalization operations on live images as well as stored images

Next steps

We intend to implement histogram matching to help the user take a picture that resembles any picture of his choice. We also intend to implement filter chaining so that users can apply filters on top of an image which has already been passed through filters.

References

[1] https://github.com/BradLarson/GPUImage

[3] http://www.workshopsforphotographers.com/photo-imaging-tips-techniques/reading-histogram

[4] http://www.sunsetlakesoftware.com/2012/02/12/introducing-gpuimage-framework

[5] http://en.wikipedia.org/wiki/Image_embossing

Appendix 1

Due to the NDA we signed with the company that generously loaned us the programmable camera, we are not allowed to post or describe the code behind our application.

Appendix 2

Division of work for this project was as follows:

Amit:

- Code: User interface development

- Project documentation:

- Powerpoint slides introducing our project and its user interface

- Wiki page

Kanthi:

- Code: Backend implementation of filters and histograms and integration of image processing libraries

- Project documentation:

- User interface development

- Powerpoint slides on image histograms

- Wiki page

Sumitra:

- Code: Backend implementation of filters

- Project documentation:

- Powerpoint slides on working of the filters

- Wiki page

Acknowledgements

Our hearty thanks to:

- Prof. Wandell and Dr. Farrell for their support and inspiration

- Steven Lansel and Munenori Fukunishi, our helpful project mentors

- Brad Larson and his wonderfully useful GPUImage library