Irtorgb

Introduction

Near-Infrared (NIR) images have broad application in remote sensing and surveillance for its capacity to segment images according to object’s material. Although NIR images made object detection an easier task, its monochrome nature is conflicted with human visual perception and thus might not be user friendly. Lack of color discrimination or wrong colors on NIR images would limit people’s understanding and even lead to wrong judgement. So colorizing the grayscale NIR images would be desired.

Colorization of NIR images is a difficult and challenging task since a single channel is mapped into a three dimensional space with unknown interchannel correlation, which greatly reduces the effectiveness of using traditional color correction/transfer method to solve this problem. Moreover, since surface reflection in the NIR spectrum band is material dependent, some objects might be missing from the NIR scenes due to their transparency to NIR. Therefore, different from grayscale image colorization which only estimates chrominance, IR colorization requires estimating not only the chrominance, but also the illuminance, which add a lot complexity to the problem.

Traditional colorization method extracts color distribution from input image and then fit it into the color distribution of the output image. Typical method[1-2] involves segmenting image into smaller parts that receives the same color, then retrieve each color palette to estimate responding chromince. Recent approaches[3-5] leverage the use of deep neural networks to enable colorization automatically. Some[3] train from scratch, using the neural network to estimate the chrominance values from monochrome images, other methods[4,5] include using a pre-trained model as a starting point, and then apply transfer learning to adapt the current model for their own colorization tasks. All these work shows that deep learning techniques provide promising solutions for automatic colorization.

In this project, we proposed several machine learning solutions like L3 (Local, Linear and Learned) and a neural network based model to find the appropriate mapping from NIR to RGB visible spectrum representation which human eyes are more sensitive to. The results are evaluated by CIELAB ∆E and MSE.

Method

L3 Method

The L3 (Local Linear Learned) method[6] combines machine learning and image systems simulation that automates the pipeline design. It comprises two main steps: rendering and learning. The rendering step adaptively selects from a stored table of affine transformations to convert the raw IR camera sensor data into RGB images, i.e. each pixel is classified by its local features into one of a few hundred classes (e.g. a red pixel, with high intensity, surrounded by a uniform field). Each class has a learned affine transform (stored in a table) that weights the sensor pixel values in order to calculate the rendered outputs. Hence the rendered output of each pixel is calculated as an affine transform of the pixel and its neighbors.

The training step learns and stores the transformations used in rendering. Starting from scene spectral radiance data (left), two aligned representations are computed. Top: the sensor responses in a model camera system. Bottom: the target rendered image from scene radiance. The simulated sensor data at each pixel and its neighborhood (patch) is placed into one of several hundred pre-defined classes, based on properties such as the pixel type, response level, and local image contrast. For each class, the pairs of patch data and target output values are used to learn an affine transformation from the data and the target output value.

Dataset

Our input dataset are the original scene NIR images with 6 fields (mcCOEF, basis, comment, illuminant, fov, dist). We started with 26 such images. With some adaptation from the script in L3, we first created a RGB sensor, which generates the corresponding IR sensor with a irPassFilter. Then these two sensors read in the NIR images, along with the padded spectral radiance optical image computed from those spectral irradiance NIR images (to allow for light spreading from the edge of the scene), and output from sensorCompute(irSensor/rgbSensor, oi) the sensor volts(electrons) at each pixel from the optical image. Finally we used an ipCompute on these sensor volts images for the final sensor data images after demosaicing, sensor color conversion, and illuminant correction.

Note that our output images for training shows a reddish effect on the RGB images. We couldn't find the right way to get the normally colored RGB images and IR images with the same size - saving the images directly from the optical images gives the right hue on a complete size image (606*481), however the corresponding IR images cannot be saved this way. So for the purpose of the L3 training we decided to keep our original output from the image processing pipeline(ipCompute), which includes IR and RGB images of size 198*242, with the RGB images showing a reddish effect.

|

|

|

Model and Attempted Improvements

We did 4 experiments on our L3 model: (1) The general L3 model: training all images from the dataset, with the exception of 4 images each from one different category as the testing images.

(2) Single category training: training the 4 categories from the dataset (fruit, female, male, scenery) separately.

(3) Single channel training: training the RGB channels with IR images separately.

(4) RGB to IR reverse training: "decolorization" of images to get a sense of the difficulty on the mapping of both directions.

Results are demonstrated in the 'Results' section below.

CNN Method

Due to the great performance of CNN models in image processing tasks [7], we proposed an integrated approach based on deep-learning techniques to perform a spectral transfer of NIR to RGB images. As inspired by Matthias et al. [5], a Convolutional Neural Network (CNN) is applied to directly estimate the RGB representation from a normalized NIR image. Then, to obtain better image quality, the colorized raw outputs of CNN would go through an edge enhancement filter to transfer details from the high resolution input IR images.

Dataset

For the complexity of spectrum mapping, a deep CNN model with thousands or even millions of trainable variables would be desired. Thus, a large amount of data is required to prevent overfitting, where the model perfectly fits the training data set but loses the ability to inference on a new IR image. For CNN model, We use the RGB-NIR Scene Dataset which consists of 477 images in 9 categories captured in both RGB and Near-infrared (NIR) sensor.

Due to the comparatively positive result of single category training in L3, we only used Urban Building dataset that consists of 102 high resolution RGB/NIR images in the CNN model. The dataset was split into 80% training data and 20% test data, then all the images were cropped into 64 × 64 patches to feed into the model.

|

|

| Number of 64 × 64 Patches used in CNN Model | ||

|---|---|---|

| Data Type/ Data Use | Train | Test |

| Input IR (64 × 64) | 7572 | 1044 |

| Output RGB (64 × 64 × 3) | 7572 | 1044 |

CNN Architecture

Illustration of our CNN network architecture is given in Figure 8. We used many convolution/deconvolution layers and relatively few pooling layers to increases the total amount of non-linearities in the network, which would help us learn complex mapping from IR to RGB. The activation function of each convolution layers is the ReLu function: , batch normalization is followed afterwards to further avoid overfitting.

In total, we have 2,377,331 params in the model, among which 2,376,723 params are trainable. In the training process, we used stochastic gradient descent to minimize the mean squared error (MSE) between the pixel estimates of the normalized output RGB image (oRGB) and normalized ground truth RGB images(GT). MSE is defined as follows:

Post Processing

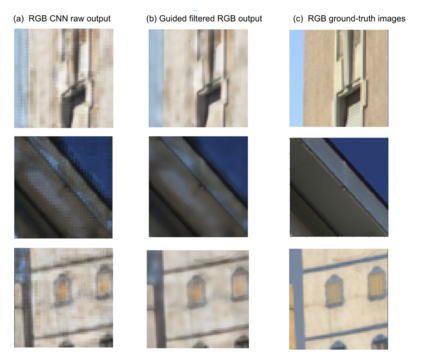

The raw output of the CNN is blurry and has visible noise, which might be caused by inaccurate pixel-wise estimations. The subsampling property of the pooling layers combined with the correlation property of the convolution layers amplify this effect[5]. So, post-processing is necessary to recover the lost details.

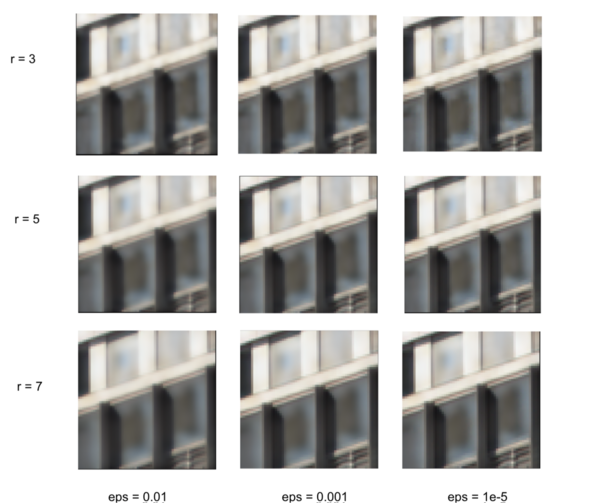

In the post-processing step, the colorized raw CNN output went through a guided filter[8] with edge-preserving smoothing property to learn high frequency details from input IR image. The edge-preserving smoothing property of guided filter is mainly controlled by two parameters: a) window radius r and b) noise parameter ε. In the 'Result' section, we compares different sets of parameters for guided filter. With fine-tuned parameters, you could see that compared to the raw output oRGB, object contours and edges are clearly visible.

Results

L3

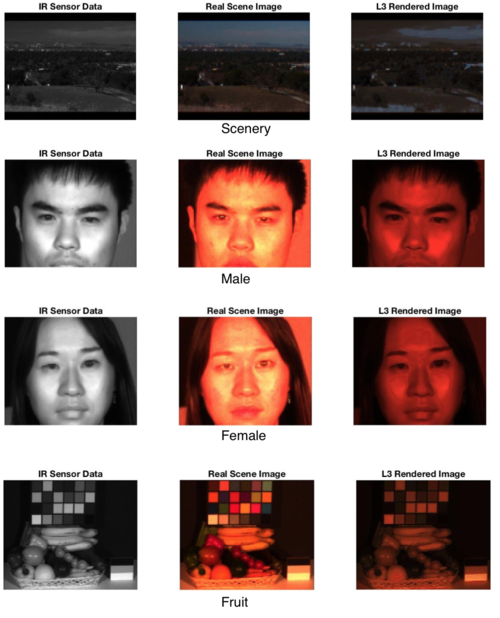

L3 model result

Main result for our L3 model (22 images for training set, 4 images for testing set):

| Category | Data Amount | DeltaE |

|---|---|---|

| Training+Testing | 22+4 | 7.1602 |

| Test: Scenery | 4 | 15.7399 |

| Test: Male | 10 | 5.9912 |

| Test: Female | 4 | 5.1804 |

| Test: Fruit | 8 | 7.1626 |

|

|

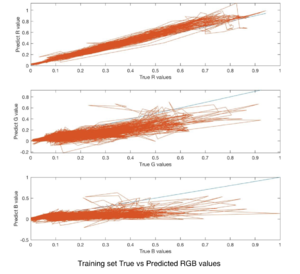

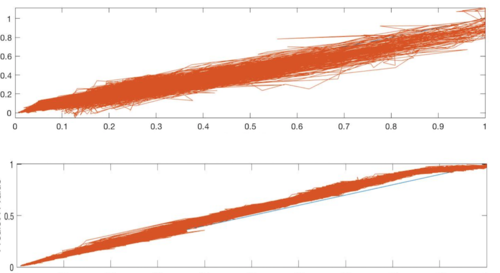

Our statistics shows a different distribution on the result for these categories and different color channels. Based on the categories the result varies drastically. Our theory is that while the scenery type has the least amount of training data (4), the female and male types can be considered in a way as the same category, since both contain pictures with human features. This is also why both types present the smallest deltaE of around 5, which is rather desirable. Moreover, our true vs predicted values on RGB channels show an obvious tendency of the red channel being the optimally predicted one. This might due to our reddish images. However, the fact that the red channel has the wavelength closest to infrared, which was presented in the IR images, also inspired us to separate the channels and map each one with the IR images. This hypothesis was later showed to be inaccurate.

With these intuition from the general training in mind, we experimented on the following three attempts aiming for a better result.

Attempt 1 : Single Category Training

| Category | Data Amount | DeltaE - Training | DeltaE - Testing |

|---|---|---|---|

| Test: Scenery | 4 | 5.8738 | 5.2851 |

| Test: Male | 10 | 5.1895 | 4.8235 |

| Test: Female | 4 | 2.8871 | 8.0136 |

| Test: Fruit | 8 | 5.7514 | 3.9328 |

|

|

|

|

From the categorized result we can see an obvious improvement on most types, especially with the scenery pictures, indicating an increase in accuracy for the L3 training if we preprocess the data by grouping the similar ones by color or subject.

Attempt 2 : Single Color Channel Training

| Category | Data Amount | DeltaE |

|---|---|---|

| Training | 22 | 7.1603 |

| Test: Scenery | 4 | 15.7399 |

| Test: Male | 10 | 5.9901 |

| Test: Female | 4 | 5.1816 |

| Test: Fruit | 8 | 7.1626 |

The single channel training searches the transformation mapping from IR to R, G, B respectively. The result below shows little difference from the combined RGB full dataset training. The reason might be that although we are feeding different channels as the target, we used the same sensor data as input for all three channels.

Attempt 3 : RGB to IR Reverse Training

| Category | mse - RGB to IR | mse - IR to RGB |

|---|---|---|

| Training | 0.05556 | 0.07572 |

| Test: Scenery | 0.04430 | 0.14670 |

| Test: Male | 0.06464 | 0.04639 |

| Test: Female | 0.03845 | 0.06328 |

| Test: Fruit | 0.04301 | 0.06706 |

Our reverse training did show a decrease on mse, which conforms with our intuition of an easier attempt for decolorization since the transformation goes from images with more information (3 channels for RGB images) to simpler ones (1 channel for IR images). This idea also indicates that in order to increase the accuracy for normal colorization, more NIR sensor data might be desired than the current one, i.e. performing a training from multiple IR images (same picture with different illuminance, separation on one IR images, etc) to one RGB image could increase accuracy by inducing more data on the input.

CNN

CNN model result

To evaluate the result against the ground truth RGB images, we used MSE metric to quantitatively measure pixels differences across image, and uses CIELAB ΔE metric to measure the difference in human perception level. Moreover, we also plot the relation between true RGB values and predicted RGB values to measure how close we are to the ground truth image. We trained the CNN model for 1000 epoch using CUDA Toolkit 9.0 in Nvdia GPU. The training error and test error still have a tendency to improve at a slower speed after 1000 epoch, if keep on training, we might reach a better result, but we stopped the training process for time concern. The following sections shows our current results of train and test set colorization.

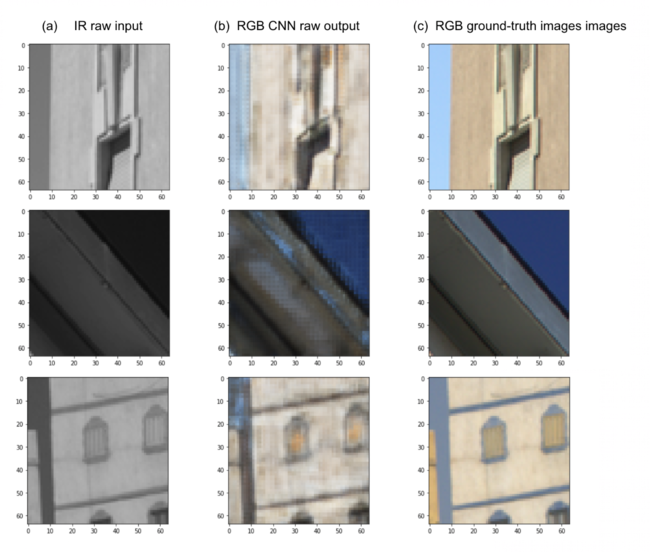

(1) Train set results

Metric 1: MSE = 0.119

Metric 2: CIELAB ΔE = 2.3265

|

|

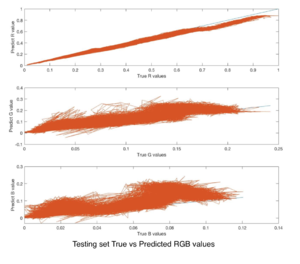

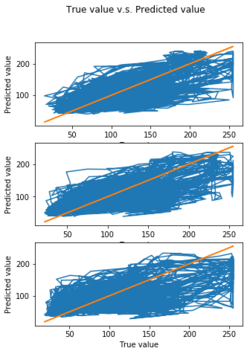

(2) Test set result

Metric 1: MSE = 0.0134

Metric 2: CIELAB ΔE = 4.8342

|

|

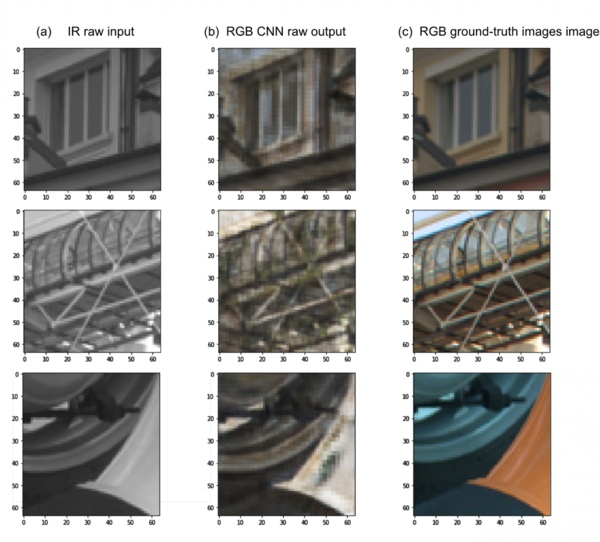

We could see that training set with smaller MSE has better performance compared to the test set in the following three aspects: a) visually more colorful b) the predicted RGB values locates more concentrated around the true line c) a relatively smaller CIELAB ΔE, which indicates smaller visual difference

Also, the performance of inference varies in the test set. The first two row of images has better visual performance than the third row image. Apparently, our CNN failed to learn the color details for the artificial colorful objects in the third image, the reason might reside in the lack of colorful images in our train set. The Urban Building dataset are filled with modern buildings and blue sky which have relatively neural color distributions. So when encountering a different color distribution in test set, the trained model lost the ability to inference on the brand new IR image.

Guided Filter result

The raw output image of CNN in last section look blurry, it seems that we retrieve the color at the expense of resolution. So we put the results into a guided filter. The following gives the filtered result of under different parameters combinations: a) local window radius r and b) ε epsilon. Local window determined the smooth region of a local filter and the epsilon ε indicates the denoising ability of the filter. We tried 3 three common window radius and 3 epsilon values. The experiment results are given below, we found that guided filter with a window radius r = 5, and noise parameter epsilon = 1e-5, has better edge preserving and smoothing ability for our problem.

CNN Final result

In this section, we give the final results of the integrated CNN approach.

|

|

Conclusions

L3: From several attempts for improvements on the L3 model, our intuition on the influence of color channel, image category and mapping information was tested. Our future steps would be to collect a larger amount of dataset, or to collect images with higher resolution to give more matches for L3 training. Moreover, a desirable training method would involve categorizing the images by similarities then rendering separately, and increase the input NIR data information for the mapping by changing the setups during the sensor to image collection step. It is also important to perform more experiment to figure out the cause of those reddish effects on training output images.

CNN: Our CNN model shows promising result, but the dataset seems biased on colors with low saturation values. The future work involves collecting a larger and more comprehensive dataset which consists of various color distribution to enable multi-category training. This might give the CNN model better inference ability on a new IR input. Besides, we could also try a different CNN model. For now, we integrated the RGB channel in our training process, but we could definitely try to train them separately. Triplet DCGAN model[9] is a good reference to turn to in the future. It is an extension of Generative Adversary Network to image processing tasks, in which a generative model G captures the data distribution to generate R, G, B channel respectively and then combined to form a normal RGB image, and a discriminative model D estimates the probability that a sample came from the training data rather than G. In this way, we could better preserve the inner channel information.

Reference

[1] A. Levin, D. Lischinski, and Y. Weiss, “Colorization using optimization”, ACM Transactions on Graphics, vol. 23, no. 3, pp. 689–694, 2004.

[2] L. Yatziv and G. Sapiro, “Fast image and video colorization using chrominance blending”, IEEE Transactions on Image Processing, vol. 15, no. 5, pp. 1120–1129, 2006.

[3] S. Iizuka, E. Simo-Serra, and H. Ishikawa, “Let there be color!: Joint end-to-end learning of global and local image priors for automatic image colorization with simultaneous classification”, Proceedings of ACM SIGGRAPH, vol. 35, no. 4, 2016.

[4] G. Larsson, M. Maire, and G. Shakhnarovich, "Learning representations for automatic colorization", Tech. Rep. arXiv:1603.06668, 2016.

[5] Limmer, M., Lensch, H.P.A.: "Infrared colorization using deep convolutional neural networks". In: ICMLA 2016, Anaheim, CA, USA, 18–20 December 2016, pp. 61–68, 2016.

[6] Jiang H, Tian Q, Farrell J, et al. "Learning the image processing pipeline"[J]. IEEE Transactions on Image Processing, 2017, 26(10): 5032-5042.

[7] Simonyan K, Zisserman A."Very deep convolutional networks for large-scale image recognition"[J]. arXiv preprint arXiv:1409.1556, 2014.

[8] He K, Sun J, Tang X. "Guided image filtering"[C]. European conference on computer vision. Springer, Berlin, Heidelberg, 2010: 1-14.

[9] Suárez P L, Sappa A D, Vintimilla B X. "Infrared image colorization based on a triplet DCGAN architecture"[C]. Computer Vision and Pattern Recognition Workshops (CVPRW), 2017 IEEE Conference on. IEEE, 2017: 212-217.

[10] isetL3, Github source code

Appendix I

Github Page for this project: Colorize-NIR-to-RGB

Appendix II

Work Breakdown:

Yao Chen: data processing, L3 model, CNN model and improvement

Jun Li: data processing, L3 model, L3 improvements