Jim Best-Devereux

Introduction

With the advent and growth of digital sensors film photography, once a titan of imaging within the United States and the world, began to become more and more obscure as the years pressed on. But to this day the allure of film captures the interest of amateur and professional photographers alike. Just as digital sensors are characterized and described, so too did film include much of the same analysis of its characteristic curves, MTFs, and spectral-sensitivity. Each type of film acted like a completely different sensor, generating different perceptions of the same scene. This “feel” that film generated is what draws so many to it still. Recreating this perception has led to many software tools ranging from image filters on the likes of Instagram and VSCO, and to attempts to faithfully recreate the properties of film in a digital world like DxO Filmpack. While these filters are great tools, they do not impact the image at the time of capture and do not quite truly recreate what so many seek when shooting with film.

In addition to software tools some digital sensors have been created that miimic the dynamics and principles with which film began with in the past. Sensors like Foveon’s X3, a stacked sensor that used the penetration depth of different wavelengths of light at a pixel to determine color, operates much the same way an image would be captured on film. Just as the Foveon sensor depends on the penetration depth of photons on the digital sensor so too did film depend on the same penetration of light passing through layers within the film and exciting the light sensitive silver crystals embedded in the layers. Through the use of a simulated Foveon sensor this work aimed to recreate the filter spectra that would produce images as if they were captured on a specific type of film.

Background

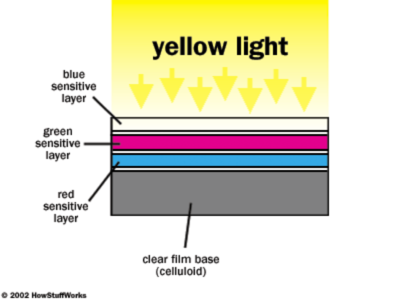

When aiming to simulate the characteristics of film it is important to understand how a color film image is created. Seen below is an image highlighting the structure of the color film and the layered structure it has.

Within the film there are embedded silver crystals that are light sensitive. When they are exposed to light they react, and later when exposed to developing chemicals in the film processing pipeline the crystals are washed away out of the image. For a black-and-white image this is how the negative is captured, and the exact characteristics of the image and its reactivity is based around the chemistry of the substrate and the crystals themselves. For a color image, these silver crystals are paired with a dye particle. When the silver crystals are washed away so too is the pigment, resulting in the color negative.

The colors formed in the color negative film are based on the subtractive color formation system. The subtractive system uses one color (cyan, magenta or yellow) to control each primary color. Unlike a black-and-white negative, a color negative contains no silver and the negative is a color opposite of the scene captured. They are a color negative in the sense that the more red exposure, the more cyan dye is formed.

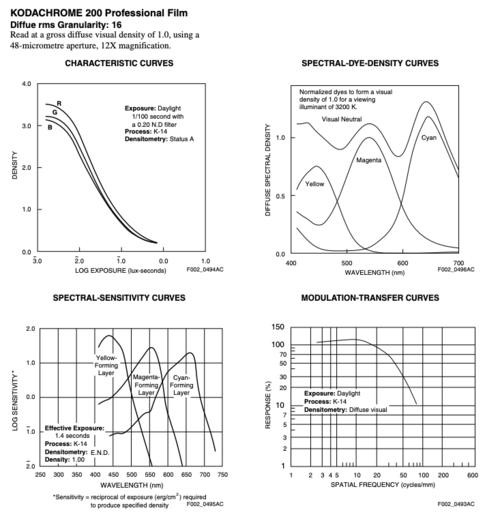

Each kind of film has a specific set of characteristics much like the digital sensors covered in this class. Two examples of the curves for different types of film are as below. Kodak Portra 400 and Kodachrome 200 are very popular types of film that are still used extensively today.

As we can see the spectral-sensitivity curves are very different for the two types of film, and this leads to the difference in their color perception of the same scene. These curves are what we aim to simulate using our simulated Foveon sensor.

Methods

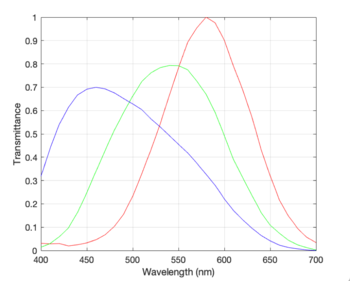

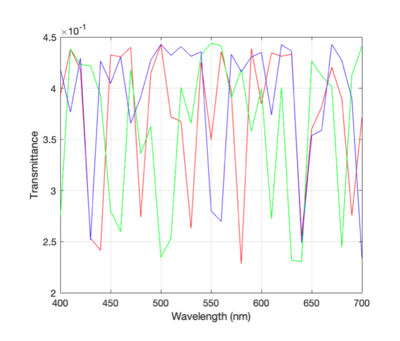

To start out first was the task of simulating a Foveon sensor. Taking a stacked array of monochromatic sensors it was possible to create a simulated version of the X3 for our testing and experimentation. Each layer in the Foveon sensor would be treated as its own monochrome sensor, and this can be fed into the ISETCam methods without issue since many methods such as sensorCompute can take in an array of sensors as an input. For these default Foveon sensors, a baseline filter spectra was used which is shown below.

Each of the monochrome sensors had a different curve from the filter spectra applied to them so that each filter was paired to one color output, so the monochrome sensor that would capture the red data would only have the filter spectra of the related curve from the filter spectra above.

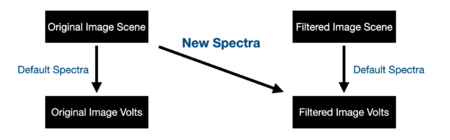

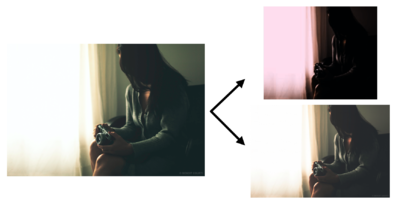

In addition to this images were taken from DxO Filmpack to act as our baseline and comparison that the work aimed to fit. Using these images the following process was attempted to fit the new spectra that was needed to create the “feel” of film. Both original and film filtered images were loaded in as scenes, and from these the output volts that would be fed into the image processing pipeline were created. The output volts from the filtered image would be what we want to create from the transform of the original scene radiance and the “film” mimicking spectra. This is visualized as below.

The images used for this are as below, with the original image on top and the two filtered versions of it below.

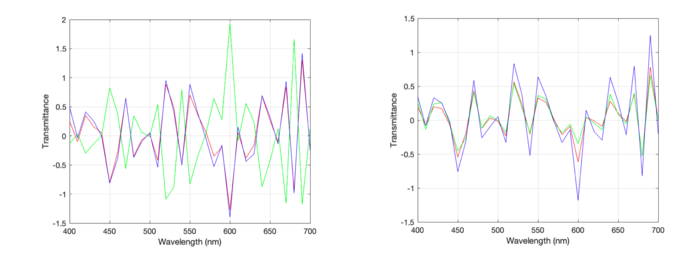

As a first pass the normal equation solution was employed to train and fit to the “film” spectra. While this is simple to implement there are several issues with it. Since least squares does not limit the range of possible values for the output the generated spectra does not obey the rules of being bound between [0,1]. In addition to this because of the structure of the problem and there being more input features in comparison to output features (there are more wavelengths of light measured in comparison to the three colors output for the RGB image) there are infinitely many solutions. Becuase of this the solution is unstable and can vary greatly between runs.

After this a non-linear bounded least squares was used in an attempt to add more constraints on the values returned so they actually fell within the realm of possible solutions. Using this there was a noticeable improvement in performance in how well the two captures matched. While there was improvement it was still not a perfect match and the spectra is substantially noisier than what would be hoped for, but this shows the possibility in using more and more constrained fittings to try and produce the filter spectra desired.

Results

Fitting with the normal equations we find spectra similar to those below. These are both noisy and chaotic, as well as falling outside of the acceptable bounds of spectral transmittances. While a simple solution at times can be the best approach, fitting from the input scene photons to the volts of the filtered image led to poor performance.

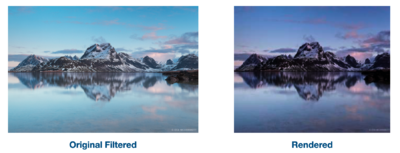

An example of one of the rendered images where the normal equation filter spectra was used is as below, compared to the result hoped for.

Moving onto the non-linear least squares the filter spectra is at least now within the realm of what is allowed of filter spectra. By adding bounds to the predictions the filter spectra predictions will stay within the range [0,1] as it should, but the output spectra is still noisy and sporadic. One potential route for further work would be to add constraints to the differentiability of the solution so the output spectra has smoother curves that better match those of the film spectral-sensitivities seen above.

While the computed volts from the sensor lacks some of the detail, in the rendered images it shows that the image is approaching that of the “film” image.

When applied to another image from those downloaded from DxO Filmpack it is clear that there are still some large discrepancies. One note of interest is that it seems the red saturation in rendered image is too high. This red artifact seems to appear in just about all of the rendered images from the Foveon filter. It was questioned if this is a colorspace issue, but comparing outputs from both Foveon and Bayer arrays (with a Nikon D1 filter spectra) there does exist a 3x3 transform to perfectly convert one to the other so they do exist within the same colorspace and are only a linear transform apart.

Conclusions

While this project did not perfectly capture the filter spectra of the film types as hoped for, it does show the promise and the possibility of capturing the spectra that would output a matching image to what would have been captured using a color film. Additional constraints such as the solutions differentiability and continuity could be imposed onto the fitting in an attempt to force the solution to better fit the dynamics of the spectral-sensitivity of the film. In addition this work shows how once a filter spectra has been fit using training images of “film” photos how those can be applied to other scenes to attempt to create the capture those would have produced.

References

[1]A simulation tool for evaluating digital camera image quality (2004). J. E. Farrell, F. Xiao, P. Catrysse, B. Wandell . Proc. SPIE vol. 5294, p. 124-131, Image Quality and System Performance, Miyake and Rasmussen (Eds). January 2004

[2]Digital camera simulation (2012). J. E. Farrell, P. B. Catrysse, B.A. Wandell . Applied Optics Vol. 51 , Iss. 4, pp. A80–A90

[3]Image Systems Simulation (2015). J.E. Farrell and B.A. Wandell Handbook of Digital Imaging (Edited by Kriss). Chapter 8. ISBN: 978-0-470-51059-9

[4] KODACHROME 25, 64, and 200 Professional Film (2009) Eastman Kodak Company

[5] KODAK PROFESSIONAL PORTRA 400 Film (2016) Kodak Alaris Inc

Appendix

Main Scripts Referenced for Work:

- s_sensorStackedPixels.m

- t_sensorEstimation.m