KodaliVilkhuJolly

Introduction

The human eye is always moving: usually either large movements to smoothly track a target in a scene or small ballistic movements that occur even when fixated at a single point in a scene. While the exact purpose of the small, continuous (fixational) eye movements is still an area of active research, literature agrees that the primary purpose is to counter perceptual image fading [1]. Perceptual fading occurs when a static image is projected onto the retina and higher-level, cognitive function results in a perceived fading of the image over time due to the lack of change of stimulus (see Appendix I for an optimal illusion demonstrating this effect). Therefore, the purpose of fixational eye movements is to provide a mechanical "refresh" of the visual system to have continual neural responses even to static scenes.

Specifically, for the scope of the Psych 221 final project, we set out to explore how fixational eye movements contribute to human visual acuity. This is a tangible problem that allows us to leverage the ISETBio toolkit [2] and our understanding of how to interpret the Modulation Transfer Function (MTF) curves.

Background

The primary inspiration of the study was from a paper out of Michele Rucci's lab, which illustrated the importance of fixational eye movements such as drift and saccades to accurately model the primate (macaque) visual system [3]. The study focused on determining the contrast sensitivity as a function of the retinal ganglion cell (RGC) output of macaque retina. This output was measured both with and without fixational eye movements (which was included as a parameter in a modeled retinal neuron), and the key finding was that the presence of eye movements was critical to replicating the observed contrast behavior demonstrated by past behavioral experiments (De Valois et al. 1974). This is illustrated in the figure below (from the paper), which clearly shows that the model with drift better matches the findings of behavioral experiments and actually leads to higher observed contrast sensitivity. For further details on the exact neural model used in the paper, please consult the Materials and Methods section of the referenced paper. To briefly summarize for the purposes of this wiki, Rucci's lab used real eye movement data from 5 human participants and used the data to augment how visual stimuli were fed into a neural model of RGC cells, the output of which was used to determine contrast sensitivity.

Based on the findings of this study, we were able to formulate a hypothesis that the presence of fixational eye movements improves human contrast sensitivity. This is the claim we plan to validate/contradict based upon simulating eye movements in the ISETBio toolkit.

Fixational Eye Movements

Fixational eye movement (FEM) comprises of three distinct components: drift, microsaccades, and tremor. Drift is considered as oculomotor noise and is visible as a net change in the position of the eye-fixation point over time. Microsaccades are small-amplitude motions within the drift component that move towards the fixation point; they are characterized by their “ballistic” and sudden movement pattern. Like microsaccades, tremor is also a small-amplitude motion but is oscillatory. Not all eye-tracking devices are able to record the tremor properly, so it is not accounted for in all eye movement models. [1] The figure below shows the three components in FEM [4].

Finally, it is worth noting that all the group members are graduate students working on the Stanford Artificial Retina project. Therefore, we were particularly interested in how the findings from the study would impact future retinal prosthesis design [6].

Methods

The ISETBio Fixational Eye Movement model was used to generate different eye movement patterns. The model successfully captures drift and microsaccades but does not account for tremor. The drift component was modeled as an implementation of a delayed random walk model from previous literature. [1] The drift was represented as the sum of an autoregressive term for excitatory burst neuron response, a baseline noise component for the tonic unit neuron response, and a negative feedback component for movement stabilization. The drift model is illustrated in the figure below [5]. The microsaccade model used statistical properties from the inter-saccade interval to create a micro-saccade jump [2]. Both the drift and microsaccade submodels had several parameters which could be utilized as degrees of freedom to generate different eye movement patterns.

Although several parameters are available for the drift/microsaccade submodels and a fine-grained exploration of FEM patterns could be interesting, it would likely yield a more detailed analysis of the FEM model itself and its limitations. In order to have a higher-level understanding and understand the contributions of different FEM subcomponents, the following FEM patterns were generated with the ISETBIO FEM model:

1. No drift, No microsaccade (fixed point)

2. Yes drift, No microsaccade

3. Yes drift, Yes microsaccade

Drift was removed by setting the autoregressive gamma parameter was set to 1, the noise to 0, and the feedback steepness ∈ to 0. Note that a FEM pattern without drift and with microsaccade could not be generated; since microsaccade is embedded in the drift component, no drift - regardless of whether a microsaccade is present - would result in a stationary point.

In addition to the aforementioned ISETBIO FEM model-generated patterns, custom eye movement patterns were explored. The team is interested in retinal and visual prosthetics, and these systems are not limited by biological constraints of drift or microsaccades. Novel movements can be generated in these systems to facilitate or even augment vision. As a simple exploration of custom movements, the following patterns were generated:

4. Rapid large horizontal movement

5. Rapid large vertical movement

6. Rapid large positive slope movement

7. Rapid large negative slope movement

All the evaluated FEM patterns are summarized in the following table:

| Eye Movement Pattern | Model | Description |

|---|---|---|

| 1 | ISETBio FEM | No drift, No microsaccade |

| 2 | ISETBio FEM | Yes drift, No microsaccade |

| 3 | ISETBio FEM | Yes drift, Yes microsaccade |

| 4 | Custom | Rapid large horizontal |

| 5 | Custom | Rapid large vertical |

| 6 | Custom | Rapid large positive slope |

| 7 | Custom | Rapid large negative slope |

A simulation environment was based upon the ISETBio eye movement tutorial. A slanted bar visual stimuli was created and modeled going through a standard human eyeball to generate an optical image. The optical image was then projected on a cone mosaic of a specified cone type spatial density. The photoreceptor absorption is recorded from the model and ISO12233 is utilized to generate a contrast reduction plot, a version of a modular transfer function plot. The figure below summarizes the environment pipeline. The code was modified to allow multiple trials to be executed and plotted for each unique eye movement pattern shown in the table above.

Results

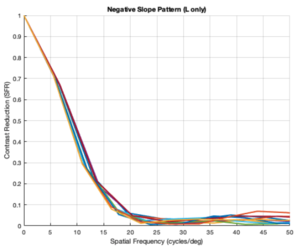

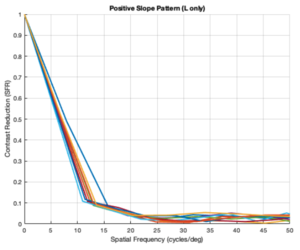

All the generated plots represent the findings from 10 trials of varying eye movement patterns (according to whichever movement scheme was being analyzed) and the resulting SFR vs Spatial Frequency plots.

No Drift and No Microsaccades (LMS cones)

No Drift and No Microsaccades (L cones only)

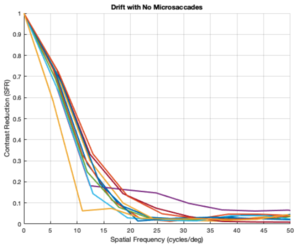

Drift and No Microsaccades (L cones only)

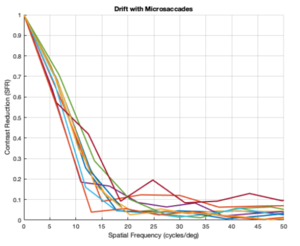

Drift and Microsaccades (L cones only)

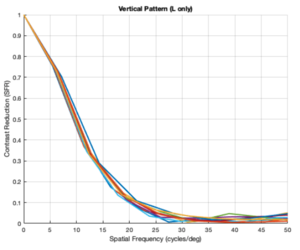

Custom Vertical Pattern (L cones only)

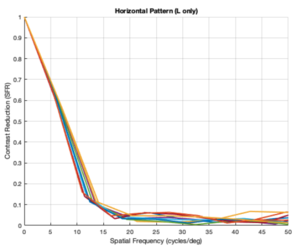

Custom Horizontal Pattern (L cones only)

Custom Negative Slope Pattern (L cones only)

Custom Positive Slope Pattern (L cones only)

Analysis

From the figures above, it is evident that different eye patterns led to different levels of absorption at the photoreceptors. However, due to the amount of variance between and within trials, it was difficult to conduct relevant quantitative analysis on the data. Nonetheless, we were able to observe noticeable shifts in the SFR curves with varying eye-movement patterns.

The key takeaway from the results presented above is that less eye movement yields better visual acuity. Looking at the plots above, it is obvious that the SFR curve for the system with no drift and no microsaccades (stationary gaze) is shifted further to the right compared to all the other curves that have some sort of movement present. This seems to contradict the Rucci study presented in the Background section. However, it is important to note that ISETBio generates the SFR curve based on the photoreceptor absorptions and not the ganglion cell outputs! Therefore, in some sense, the ISETBio toolkit is modeling the mechanical aspects of the human visual system and not the perceptual components -- so, mechanically it makes sense for more motion to yield lower contrast sensitivity (when you move a camera while it is capturing a scene it generally produces a blurrier image). Additionally, even the custom generated patterns followed the trend of less movement yielding higher contrast sensitivity: the custom vertical movements output is shifted rightward compared to the custom horizontal movement output, and one can watch the movement videos shown in Appendix I to observe that the horizontal movements lead to larger magnitude stimulus movements on the cone mosaics. So, while on the surface we seem to report findings opposing Rucci's findings, it could hold true that once the visual information passes through the photoreceptors to the ganglion cells there could be an inverse relationship in the context of perception (which is what Rucci would have observed). In fact, as a first-order check, it can be seen that no movement would cause perceptual problems due to perceptual fading from static images on the retina -- thus, immediately showing a limitation of the current ISETBio model which does not model the ganglion cells. This could be a great forward research direction for ISETBio to use the ganglion cell models Rucci shares in [3] to add ganglion cell models to ISETBio and then re-run this study.

Finally, these observations still are very useful in the context of a retinal prosthesis that our team is interested in developing as a long-term research project. We discovered that maybe it is not overly critical to focus on perfectly matching FEMs in a prosthetic for the purposes of contrast sensitivity. It is worth noting that this might not be entirely true once the ganglion cells are modeled in ISETBio and those results are gathered, but regardless the ability to use this simulation framework to gauge the importance of eye movement encoding in a prosthetic is vital to the development of the envisioned retinal prosthetic.

Conclusions

Overall, the findings of this study helped provide insights into the relationship between photoreceptor responses due to eye movements and contrast sensitivity: less eye movement yields better contrast sensitivity when calculated as a function of photoreceptor absorptions. Additionally, by testing out custom eye movement patterns, we saw noticeable shifts in the SFR plots; therefore, when working on a retinal prosthetic it could be an interesting case study to experiment even with custom eye movement patterns that wouldn't necessarily be found in the biology.

Finally, future steps include performing a similar study once a ganglion cell model has been incorporated into the ISETBio toolkit to observe how/if the observed trends change.

References

[1] Mergenthaler et. al. "Modeling the Control of Fixational Eye movements with Neurophysiological Delays." Physical Review Letters, 30 Mar. 2007, doi:10.1103/PhysRevLett.98.138104.

[2] ISETBio Toolkit: https://github.com/isetbio/isetbio

[3] Casile, Antonino, et al. “Contrast Sensitivity Reveals an Oculomotor Strategy for Temporally Encoding Space.” ELife: Computational and Systems Biology, Neuroscience, 8 Jan. 2019, doi:10.7554/eLife.40924.

[4] Makarava, N., et al. "Bayesian estimation of the scaling parameter of fixational eye movements." Europhysics Letters, 3 Dec. 2012, doi:10.1209/0295-5075/100/40003.

[5] Eye movement model: https://github.com/isetbio/isetbio/wiki/Eye-movements.

[6] Stanford Artifical Retina Project: http://med.stanford.edu/artificial-retina.html.

Appendix I

Parameters for Generating Different Eye Movements

Parameters of the ISETBIO FEM model can be changed to produce different eye path matrices for cones.compute(). They are:

- Drift - parameters set in setDriftParamsFromMergenthalerAndEngbert2007Paper.m

- Control gain (gamma) for autoregressive term of excitatory burst neurons

- Control noise, ksi, with zero MEAN and sigma STD

- Position noise, eta with zero MEAN and rho STD

- Feedback gain (lambda)

- Feedback steepness (epsilon)

- Feedback delay for x- and y-pos

- Microsaccade

- Model Type - set in emGenSequence

- None

- Statistics based

- Heatmap/fixation based

- General Statistics - parameters set in setMicroSaccadeStats.m

- Mean Interval Seconds

- Interval Gamma Shape

- Mean Amplitude (Arc Min)

- Amplitude Gamma Shape

- Mean Speed (Deg/sec)

- Standard Deviation (Deg/sec)

- Min Duration (ms)

- Target Jitter (Arc Min)

- Direction Jitter (degs)

- Model Type - set in emGenSequence

Other parameters not in the ISETBio FEM model but that still affect eye movement path generation include:

- Integration time - amount of time between saccade movements (20-150ms)

- Center eye movement paths - boolean parameter, whether to center eye movement paths or not

- Number of eye movements - number of saccades to generate per trial

Link to perceptual fading optical illusion

http://www.123opticalillusions.com/pages/lilac_chaser.php

Link to the mosaic video of custom vertical and horizontal movements

Custom vertical pattern: https://www.youtube.com/watch?time_continue=1&v=x8gk1wPC6DU&feature=emb_logo

Custom horizontal pattern: https://www.youtube.com/watch?v=p0VfhDBI8-I&feature=emb_logo

Appendix II

Paul worked on writing the MATLAB script to generate SFR curves given various eye movement parameters. Sreela performed a thorough analysis of the Mergenthaler and Engbert model used in the ISETBio toolkit to determine how/which parameters to adjust in the MATLAB script for varying movement patterns. Raman helped with the analysis of the data in the context of the Rucci research study. All group members worked together on the final interpretation of the results, the creation of the slides, and the creation of the wiki page.

Source Code

Zipped source code can be found here: Media:source_code.zip

It contains multipleTrials.m and genPatterns.m.