Lange

Introduction

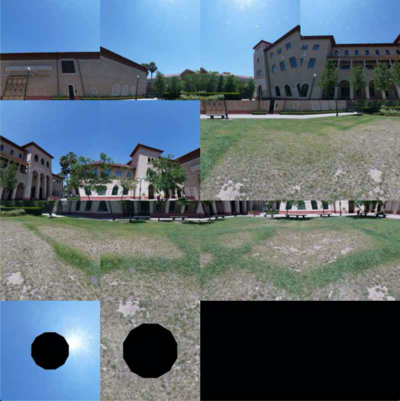

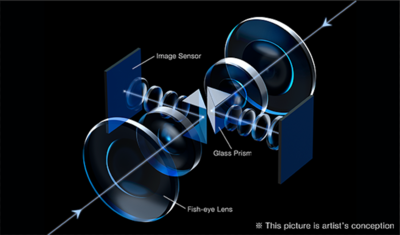

360-degree immersive imaging as become increasingly popular due to the research and development of VR headsets. This has led to a renewed interest in capturing 360-degree photography and video with both convenient solutions and production-ready cameras being developed in recent years. For high-quality production-ready 3D-360 video, a 360-degree polydioptic camera, such as the Surround360 [1] from Facebook, is used to capture multiple wide-angle overlapping fields of view. In addition to being bulky and expensive, the videos coming out of these cameras are typically recorded at resolutions greater than 4k and at bit rates that can be over 50 Mb per second — 22 GB per hour of video [2]. A more convenient and consumer friendly solution exists with dual-fisheye cameras, such as the Ricoh Theta shown in figure 1. Each fisheye lens captures a field of view between 195-210 degrees and sensor are aligned on the same visual axis as shown in figure 2. The two captures therefore contain 10-15 degrees of overlap. These images can be projected into the equirectangular space and aligned together using a purely geometric approach.

Background

Typical image alignment and stitching is done using either direct (pixel-based) or feature-based alignment algorithms [3]. Spherical or equirectangular panoramas can be constructed using these traditional alignment and stitching algorithms as shown below. However this approach has some difficulty as the photographer must have a well equipped tripod and the necessary knowledge to produce a spherical projection with a high degree of accuracy.

This conventional stitching does not work well on raw images from dual-fisheye cameras since there is very limited overlap [4]. The images can instead be warped to an equirectangular projection, aligned, and blended together to remove the seam.

Methods & Results

The following geometric approach for converting dual-fisheye captures into a 360-degree equirectangular projection will work for any two fisheye images taken at an aperture of >= 190 degrees and taken on the same alignment axis and pointing in the opposite direction to capture the entire 360 space. The center point, radius, rotation, and aperture are all necessary parameters to produce images will align properly.

The following images are captured as still frames from a video recorded with a Ricoh Theta S. This camera does not provide a method to capture dual-fisheye still photography, although future models do support it with the provided API [5]. Due to this limitation, the raw images I used are at a 1920x1080 resolution. The left fisheye capture is located in a rectangle from pixel (0, 0) to (960, 960) therefore the center point is at pixel (480, 480) and has a radius of 480 pixels, it also is at a -90 degree rotation. The right fisheye capture is located in a rectangle from pixel (960, 0) to (1920, 960) therefore the center point is at pixel (1440, 480) and this fisheye also has a radius of 480 pixels but is at a 90 degree rotation.

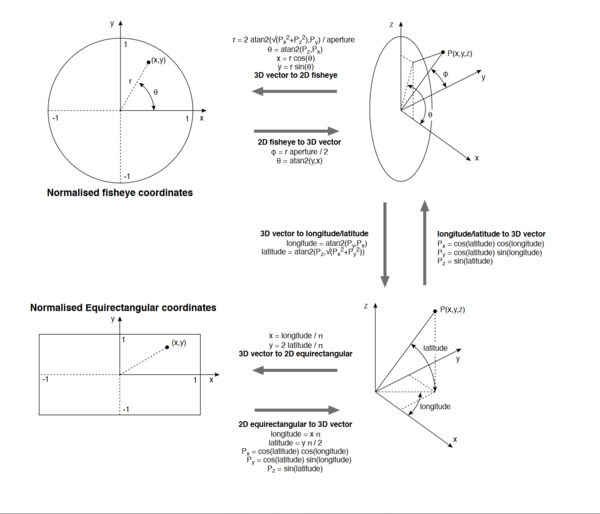

The following steps are repeated for the left and right fisheye image respectively. First destination image is created at the approach size for the given parameters. Next each pixel in the destination image sample is remapped and projected to a location on the fisheye lens. This is accomplished by first mapping the row & column coordinate of the destination pixel into normalized equirectangular coordinates. The normalized equirectangular coordinates are then projected to a 3D vector on a unit sphere using the following equations:

Finally using this point on the unit sphere, the 3D vector is projected to a spherical coordinate on the unit circle using the following equations where the aperture is the radians of the field of view of a single fish eye, by experimentation this value was discovered to be roughly 205 degrees for the Ricoh Theta S:

This (x,y) value can then be denormalized to a location on the source fisheye image using the radius and center point. The color at the location in the fisheye image is then sampled back to the destination image. This produces a spherical projection for each fisheye lens.

The two projections can then be aligned by using the left half of each image to produce a single image with a clear pixel seam. Note that objects close to the lens suffer from parallax issues and are not in alignment.

The seam can be blended using an alpha gradient from the overlapping regions of the spherical projections [6].

Rendering

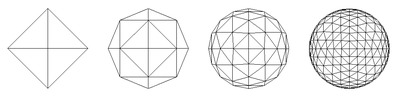

To render these images for end-user consumption, we can display using a 3D graphics framework such as OpenGL. First a geospherical mesh is created by subdividing triangles in an octahedron as shown in figure 4. This mesh is then placed in a vertex buffer on the GPU. A simple fragment shader to texture the image is needed, along with a simple vertex shader to transform vertex positions of the geosphere based on user input. The equirectangular projection is then loaded as a shader resource. Finally to produce an immersive experience as if the user is located inside the sphere, we must enable a CullFace mode set to the back face of the mesh.

Conclusions

In summary, dual-fisheye cameras over a convenient and simplistic method for producing results sufficient for mass market use. The images can then be easily rendered on a GPU to provide an immersive experience.

References

[1] https://www.facebook.com/facebookmedia/blog/facebook-360-updates-and-introducing-surround-360

[2] https://engineering.fb.com/video-engineering/under-the-hood-building-360-video

[3] Szeliski, Richard. “Image alignment and stitching: A tutorial.” /Foundations and Trends® in Computer Graphics and Vision/ 2.1 (2007): 1-104.

[4] T. Ho and M. Budagavi, “Dual-fisheye lens stitching for 360-degree imaging,” in /Proc. of the 42nd IEEE In- ternational Conference on Acoustics, Speech and Signal Processing (ICASSP’17)/, 2017 (Accepted).

[5] https://api.ricoh/docs/theta-web-api-v2.1/options/_image_stitching/

[6] http://paulbourke.net/dome/dualfish2sphere/