Mapping Depth Information from a Depth Sensor to an RGB Camera

Introduction

Trisha Lian, Michelle Vue, Haichuan Yu

Summary

In this project, we map depth information from an external depth sensor to a query image taken by any RGB camera. Before runtime, we scan a scene of interest using a depth sensor and construct a 3D model of the scene. We also save a database of RGB images taken by the depth sensor's RGB camera. During runtime we localize the query camera in world space by matching descriptors between our query image and our database of images. By solving for the query camera pose and using our 3D model, we can obtain the depth information for our query image and produce RGBD images. The estimated depth images we obtain from our pipeline visually match our query images. For our ground truth test case, we obtain errors in rotation ranging from 4 to 13 degrees and translation errors from 15 to 60 cm. The amount of error depends on the number of iterations used in our RANSAC loop, but increased accuracy comes at the cost of slower processing speed.

Our mentor for this project was Roland Angst.

Motivation and Previous Work

Augmented reality (AR) is an emerging technology built on an infrastructure that aims to visually modify individual perception of the world, adding information and interactivity. The applications are wide-ranging and tremendous. As this infrastructure develops with current generation smartphones and wearable devices, a key component missing among all of them is a depth sensing camera. How can one obtain depth information about a scene using only one 2D RGB query camera?

In our project, we solve this problem by mapping previously stored depth information to any RGB camera. By scanning a scene with a depth sensor prior to runtime, we can produce RGBD images without needing to attach a potentially unwieldy depth sensor to our camera. Previous work [1] has been done in mapping depth information to a query camera, but the approach used relied only on a stationary, fixed Kinect with a single point of view. Features from an RGB image were matched with features from a stationary Kinect's RGB image. These matches were used to find the camera pose of the query. The depth image from the Kinect was then warped and projected back into the query camera, thus producing RGBD images. The major drawback of this method is that the user can only look in the same direction of the Kinect. Too large of a deviation angle and the Kinect's depth information would no longer be relevant to what the query camera was seeing. By using a dense 3D reconstruction and a database of images, our approach is not limited by the view of the Kinect; our user can move freely around the room and still obtain depth information. In addition, our implementation uses a much larger database of descriptors, which should theoretically allow us to find matches more consistently.

One possible application of this project is in augmented reality systems that assist individuals with low vision. The depth data could be used in AR to warns users when they are about to collide with objects in their proximity. This project would make such a system more lightweight and accessible because it does not require the user to carry around a depth sensing camera.

Pipeline Overview

In this section, we give an overview of our pipeline.

Software:

- OpenCV

- Point Cloud Library

- Kinect Fusion (from PCL)

- OpenGL

Hardware:

- Xbox Kinect

- RGB Camera (iPhone 4s)

Preparation:

- Camera Calibration

- Create 3D scan of room using Kinect Fusion

- Find keypoints using Hessian affine detector

- Extract SURF features from all Kinect RGB image keypoints

- Find each keypoint’s corresponding world coordinates using OpenGL, the camera pose, and the 3D model

- Create database of SURF features with corresponding world coordinates from depth map projection

Query procedure:

- Capture 2D indoor image using query RGB camera

- Find keypoints using Hessian affine detector

- Extract SURF descriptors from RGB image keypoints

- Match query RGB camera features with Kinect RGB camera features

- Obtain correspondences between 2D camera keypoints and 3D database keypoints

- Obtain camera pose from Perspective n Point solution using point correspondences in a RANSAC approach

- Synthesize depth map from OpenGL visualizer deprojection

Pipeline Details

In this section, we will explain the details of each step in our pipeline.

Calibration

Two cameras were used in this project, a depth sensor and an RGB camera. The depth sensor used was the Microsoft Kinect, which carries a RGB sensor and an IR depth sensor. This makes it possible to output both 2D RGB images and depth maps. Our query camera was an iPhone 4s with a fixed focal length.

We were initially interested in two devices for the depth sensor: the Google Tango and the Microsoft Kinect. Despite initial success in capturing 3D point clouds using the Tango, an application to create a dense surface reconstruction from a 3D point cloud was no longer available for the public. One solution was to develop the software ourselves, however, that would have required the resources of an entirely separate project. The alternative was to use the Microsoft Kinect. After we discovered that Kinect Fusion can create a 3D surface reconstruction, we chose this route instead. For a detailed summary of how Kinect Fusion aggregates depth maps into a 3D model, see [2] and [3].

In order to perform some of the computations needed for this project, intrinsic parameters for our two cameras was needed. Intrinsic parameters include parameters such as focal length and principal points (pixel coordinates of the center of the image). For more information on intrinsics, see [4]. We calibrated both the Kinect RGB camera and the iPhone 4S camera using a standard checkerboard pattern and OpenCV. The findChessboardCorners function in OpenCV automatically detects the corners of the checkerboard image, and the intrinsic parameters are calculated using OpenCV's calibrateCamera function. The calibration values we obtained for the Kinect were similar to those described online by other Kinect users. The intrinsic parameters of the Kinect are used to project keypoints into world space and the intrinsics of the iPhone are used to estimate for the query camera's pose when we solve for PnP. Both of these steps will be described in the following sections.

Kinect Fusion

We installed Kinfu, an open source implementation of Kinect Fusion from the Point Cloud Library on Ubuntu 14.04 [5]. We were not able to install it on Mac OS X due to missing NVIDIA graphics cards and incompatible versions of the g++ compiler. Kinfu's instruction set allows us to process collected depth maps into a dense 3D reconstruction of the scene. At periodic intervals during the scan, we also have Kinfu save RGB snapshots. We use these to build a database of descriptors which describe our scene. For a simple object such as our pool table, we collect around 20 RGB snapshots during the Kinect Fusion scan. The corresponding camera pose of the Kinect, for every RGB snapshot, is also saved. The following is an image of our 3D model and a diagram of our Kinect Fusion outputs.

There were several factors we had to consider to make our inputs compatible with Kinect Fusion's outputs. These outputs include the object model and the camera pose extrinsics, which were given as a rotation (R) and translation (t) matrix. Extrinsics describe the location of a camera to an origin in the world space.[4] First of all, the world origin was not defined to be the center of the image. Instead, Kinect Fusion defines it to be [-1.5, -1.5, -0.3] meters from the starting position of the Kinect when the scan begins. This is with respect to the standard, right-handed computer vision coordinate system with +z looking through the camera and +y pointing up. Secondly, the rotation matrix given from the Kinect is from camera system to world system instead of world to camera. This requires us to transpose R to get the right rotation matrix. To account for these differences, we used the following as the Kinect's extrinsics:

Features and Descriptors

As we will describe below, we would like to be able to match points of interest between our query image and our database of images. We do this using keypoints and descriptors; our keypoints indicate where on the image a point of interest is located and the "descriptor" describes the points so that they can be matched.

In order to match points across camera perspectives, we need to select keypoints that remain relatively constant across camera perspectives. Since uniform regions and edges match poorly across camera perspectives, corners are used as keypoints. While it has raw pixels do not match well between views, it has been shown that the gradients of a corner keypoint remain more constant across camera perspectives [11]. A descriptor can be more robust under changes in perspective, illumination, and other optical distortions. Therefore, instead of storing the raw pixel values of a keypoint, a descriptor is built for each keypoint and stored for matching.

In this project, we used a Hessian affine feature detector[6] to locate "corner-like" parts on both the RGB dataset and the query image. The Hessian detector is similar to the standard Harris corner detector in that it first determines interest points across scale spaces, and then determines ellipsoidal interest regions for each point. Both detectors are also based on derivatives. However, the Hessian detector outpaces other feature detectors in both the number of correspondences and the number of correct matches for all viewpoint variations. The lead is more pronounced especially for smaller angles. Because we expect viewpoint variation between our query camera and the RGB images from our database, the higher correspondence rate between features is important. We describe the detected features using the Speeded Up Robust Features (SURF) feature descriptors[7]. We selected SURF descriptors because they are several times faster than the standard SIFT features while providing similar capabilities in describing the Hessian features obtained. Our feature extraction and description was handled by OpenCV functions. A default threshold value of threshold=400 was used for the Hessian affine detector, and we obtained approximately 2000 descriptors per image.

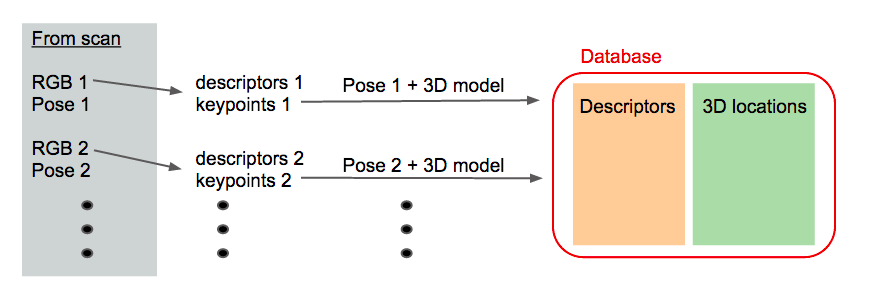

Project Features out to World

After we extract feature descriptors from all 2D RGB images obtained during the scan, we create a sparse "point cloud" of descriptors to represent our database. Specifically, we use the 3D model given by Kinect Fusion to find the corresponding 3D world coordinate of each keypoint in our database of descriptors.

We can accomplish this by using the camera poses outputted from Kinect Fusion. Each RGB image saved during the scan also had its extrinsics saved. Using our inherited OpenGL framework [8], we process each set of descriptors extracted from the RGB database images. We pass in the Kinect's extrinsics and intrisics for each RGB image, the extracted keypoint locations, and the 3D model. The framework uses the camera pose and model to obtain the corresponding depth for each point. Using this new depth information, we can obtain each keypoint's corresponding 3D world location.

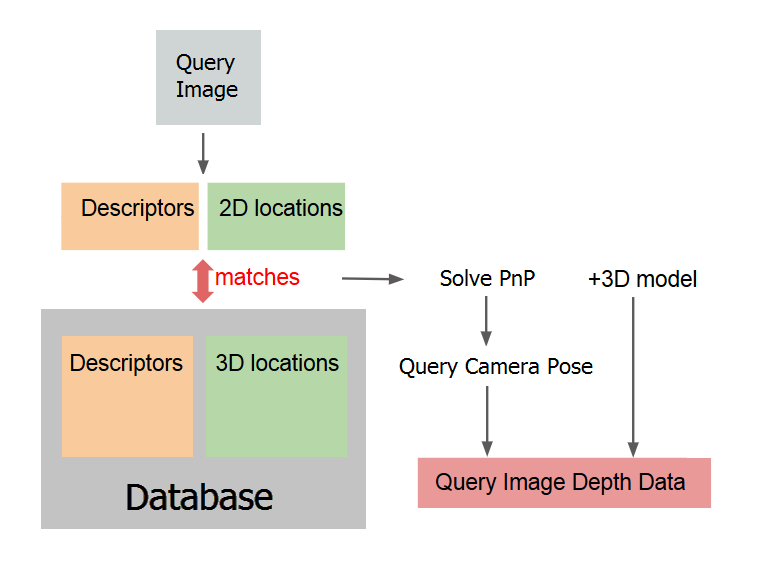

Our database now consists of a list of descriptors, aggregated from all the RGB images taken during the scan, and their corresponding 3D locations. The following figure summarizes how the world coordinates of the features are found.

Query Pose Estimation

We obtain the camera pose of the query camera by solving the Perspective n Point (PnP) problem [9]. The PnP problem describes a situation where we have 2D and 3D correspondences, and we would like to find the camera pose of the image plane. First, however, we need to extract feature descriptors from the 2D RGB image provided by the query camera, and match it to features in our database. We used a Brute Force matcher and filtered out distances (the "difference" between two descriptors) that were more than a third of the maximum distance away. This threshold was determined through a combination of trial and error and an inspection on the distribution of distances for a query image.

We tested our matching procedure by matching descriptors between different RGB images in our database. An example of this is shown in the next image.

Similarly to how we match descriptors across two 2D images, our next step is to match feature descriptors between the 2D query image and the sparse "point cloud" of descriptors. This point cloud of descriptors was generated by our database, as we described in the previous section. From the matching descriptors, we can find corresponding 2D and 3D locations. The 2D locations are from the query image, while the 3D locations are from our database. With these 2D to 3D correspondences, we obtain the position of our query camera by solving PnP using an algorithm such as Random Sampling and Consensus (RANSAC) [10]. OpenCV's implementation of solvePnP finds the camera pose that "fits" the highest number of corresponding 2D-3D locations. It determines this camera pose by minimizing the reprojection error, the error between the given image points and the projection of the world points into the image using the estimated pose.

Find Query Depth Map

Now that we have the camera pose, we can go through every pixel in the query image and use our OpenGL framework and our estimated camera pose to extract the depth associated with that pixel. This is a similar process to how we found the 3D location of our database keypoints above. Finally, we assemble the pixels in the correct order to create a depth from the view of the query camera, which does not have a depth sensor. Our final output is a depth map that matches our query image.

Experiments

iPhone Camera Query Tests

After constructing our pipeline, we tested our code on several query images of our scene taken by an iPhone camera. The following depth images were extracted by estimating the location of our query camera through the process we described above. Once we found the rotation/translation matrix of our camera, we used our 3D model to find the corresponding depth image for a camera at that location. The depth images that are estimated seem to visually match the query images.

The following are several query images and their estimated depth maps. A value of 1 in the depth map indicates that there is no corresponding depth information for that pixel. This corresponds to areas in the view that do not intersect with our 3D model. It also corresponds to the points that were too close for the OpenGL viewer to resolve.

In the following depth map, the corner of the model is missing depth values due to a limitation in our visualizer. Objects that are too close to the camera are clipped.

In the following depth map, the left side of the pool table is missing. This is because we did not scan the left side of the pool table and the depth map has no information when viewed from that angle. This can be seen in the 3D model as well. A full 360 degree scan of the object would fix this issue.

Speed and Accuracy Evaluation

To test the accuracy of our implementation, we tested our pipeline with a ground truth case. In order to obtain a ground truth, we saved one of the RGB images from our scan and did not include it in the database. We treated this RGB image as a query image and tested it with our system. Using the camera pose given by the Kinect, we can quantify the accuracy of our estimate.

The following figure shows the RGB image for this query. It was taken by the Kinect's RGB camera during the scan, but was not included in the database. On its right is the "ground truth" depth image associated with this query. It was extracted from the 3D model using this RGB image's corresponding camera pose. This camera pose was given directly by Kinect Fusion and is therefore considered ground truth. Once again, the front half of the table is clipped due to a limitation in our visualizer.

To evaluate the speed and accuracy of our system, we ran this query image through our pipeline using different numbers of RANSAC iterations when solving the PnP problem. The following image shows the estimated depth map obtained using 1,000 iterations and 10,000 iterations.

This next image displays the error between our 10,000 iteration estimate and the ground truth.

We quantify the error numerically in the following table.

To calculate the rotation error, we found the angle of rotation around each axis for our estimated camera pose and our ground truth camera pose. The error is the Euclidian norm of both vectors. For the translation error, we calculated the norm between the translation vector found by our estimate and that of the ground truth.

From the table, we can see that increasing the number of iterations results in a more accurate estimate. In our case, the number of iterations in RANSAC indicate how many different camera poses we will try in order to find one that produces the largest number of inliers. Inliers are the set of corresponding points that fit our model parameters. In this case, they consist of the 2D-3D point correspondences that fit an estimated camera pose. Generally, a larger number of inliers indicates a more accurate estimate because we have found more correspondence pairs that work with a certain camera pose. From our rotation and translation error, we see that using more than 1000 iterations gives us a more accurate set of inliers.

For this test case, using 5,000 iterations and 10,000 iterations gave us the same set of inliers. This implies that there is a threshold for the number of iterations required to find an accurate set of inliers. Using more iterations will not necessary improve your estimate. Finding this threshold is important because processing time increases as the number of iteration increases. Further tests must be applied in order to determine the ideal threshold for our specific set up, and thereby obtain the most accurate results possible for a specific processing time. For example, our results indicate that 5,000 iterations is enough to find a set of inliers that is more accurate than the set found from 1,000 iterations.

The results also indicate the trade off between accuracy and processing time. Currently, the times we achieve are not usable for real-time applications. There are various methods we could implement to expedite our process including experimenting with new descriptors, using a faster matching algorithm, or generally optimizing our code. We could also utilize an initial camera pose guess when solving PnP. Because we know that the user will not move very far between image frames, the previous known camera pose can be used as an initial guess to speed up the process. To save database space and loading times, we could attach multiple descriptors to a single world point if they happen to project to similar locations. The slowest piece of our pipeline, however, is the time it takes to solve PnP. There is the possibility of using a different method to solve the PnP problem instead of using OpenCV's implementation. This may significantly speed up processing time.

Future Work

- In the future, we hope to implement this mapping in real-time. This would require several modifications, the most important being that the processing speed must be fast enough to produce decent frame rate. This would require us to find and use descriptors that are faster than SURF, but still descriptive enough to generate enough good matches to solve PnP. In addition, careful analysis must be performed in order to determine the ideal number of iterations to use. We would like the fastest processing speed, but still produce an accurate estimate of the query's camera pose.

- To improve the accuracy of our matching, we should account for differences between the illumination our our query image and our database images. One way to do this is to gather different database images with a variety of different illumination setups. For example, we could scan the room at different times during the day (e.g. day and night). We would then mark descriptors found based on the time of day the RGB image was taken. During runtime, we could utilize our knowledge of the current time to only search descriptors that were found at a similar time. This would reduce the variation in illumination and improve the matching.

- One of the main drawbacks to this implementation is the stationary 3D model. Because we are using a model that has been scanned prior to runtime and stored in memory, we make the assumption that the environment cannot change. One way around this is to construct an intelligent system that can update the 3D model as the user walks around. It could even mark objects that are movable or stationary and store them in memory. The new depth information needed to update the model would have to be gathered by either a second depth sensor on the user or a visual SLAM system on the query camera's stream.

Conclusion

In this project, we managed to obtain depth information for images from a RGB camera using a database of SURF features and the 3D dense reconstruction of a scene. We were able to improve the accuracy of this depth map by changing the parameters of the code to run more iterations of RANSAC, but this came with the cost of added run-time. Ideally, we want the depth mapping to be done in real time, but the current implementation requires 0.6-6 seconds just to estimate the pose of the query camera. We can improve the speed by implementing a smarter search algorithm for feature matches, but there is still the run-time associated with mapping each query image pixel to its depth once we have the camera pose. Additionally, our project does not account for dynamic scenes because then the room must be scanned again and the database rebuilt, which requires even more time and processing power. While our method might be accurate enough for real world applications, it still requires significant future work to make the system fast enough for real-time applications.

References

[1] The work described was an independent study project by John Doherty from Stanford's CS department.

[2] http://razorvision.tumblr.com/post/15039827747/how-kinect-and-kinect-fusion-kinfu-work

[3] http://research.microsoft.com/apps/video/default.aspx?id=152815

[4] http://en.wikipedia.org/wiki/Camera_resectioning

[5] https://github.com/PointCloudLibrary/pcl/tree/master/gpu/kinfu

[6] Mikolajczyk, Krystian, and Cordelia Schmid. "An affine invariant interest point detector." Computer Vision—ECCV 2002. Springer Berlin Heidelberg, 2002. 128-142.

[7] Lowe, David G. "Object recognition from local scale-invariant features." Computer vision, 1999. The proceedings of the seventh IEEE international conference on. Vol. 2. Ieee, 1999.

[8] The OpenGL framework that we adapted to to project 2D points into 3D using a object model was provided by Kristen Lurie from Stanford's EE department.

[9] http://docs.opencv.org/trunk/doc/tutorials/calib3d/real_time_pose/real_time_pose.html

[10] http://en.wikipedia.org/wiki/RANSAC

[11] http://www.cs.ubc.ca/~lowe/keypoints/

Acknowledgements

We would like to thank Roland Angst for guiding and mentoring us on this project. In addition, we would like to thank Kristen Lurie for the OpenGL framework we adapted for our pipeline and John Doherty for the previous work he has done on this topic.

Code

Code and data for our project can be found here. [1]. However, some external libraries were also used are not included. They are available upon request.