MeganckSajdakWu

Ryan Meganck, Adam Sajdak, Stephen Wu. Predicting Human Performance Using ISETBIO.

Introduction

Motivating Examples

Modern displays have benefited heavily from the technological advances surrounding the manufacture and design of transistors. Display designers have been able to package an increasing number of transistors in a given display to yield stunningly detailed images while also improving the energy efficiency of the displays. In a vacuum, the goal of these display designers would be to strive for an infinite number of pixels in a display, but in all pipelines, there is a bottleneck for performance. In this case, the pipeline consists not only of display, but the observer watching the display. The observer is limited by non-idealities in the eye as well as the image processing portion of the brain.

At very low display resolutions, it is expected that a human observer would be able to notice an increase in resolution or pixel count. In this case, the display resolution is the limiting factor in the pipeline. Conversely at high resolutions, there is a point where the resolution of the display is no longer the limiting factor and the human observer can no longer resolve higher resolutions. A more realistic goal for display designers is therefore to build a display that shifts the bottleneck to the observer.

The purpose of this project is to determine the critical point for display performance in the visual pipeline. In other words, to find the critical resolution at various viewing distances where the observer is no longer able to discern two different images.

For practicality, three different use-cases were simulated throughout the course of the project. Given the different viewing distances, it follows that each application would have a different critical PPI.

- Tablet - 0.5m viewing distance

- Monitor - 1.0m viewing distance

- Television - 2.0m viewing distance

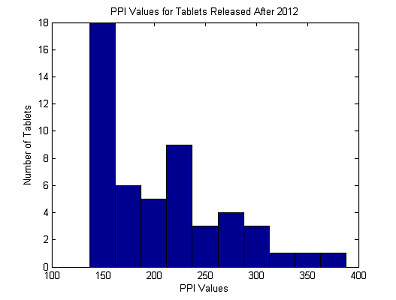

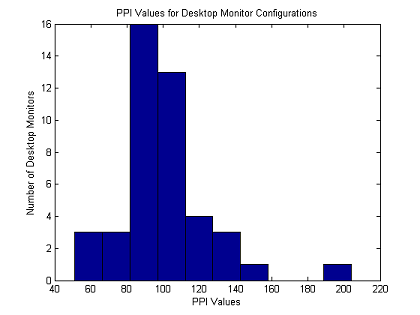

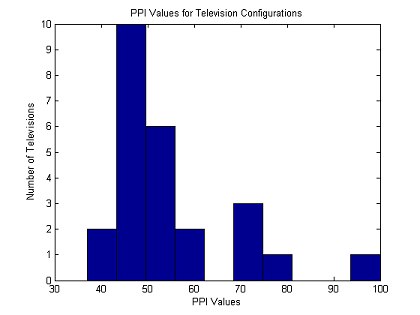

A preliminary market study [1] was done to find typical PPI values for different applications. Histograms of the results are shown below. These graphs will be referenced later when analyzing the results of the experiments. It is important to note that tablet PPI's are centered around 200, desktop monitors around 100, and televisions around 50. This goes to show that viewing distance plays a large role in the practical PPI of a commercial display.

Vernier Acuity

The metric used in this project for predicting an observer's ability to resolve an image is Vernier acuity. This metric describes an observer's ability to discern the alignment of two line segments. The two images below are examples of the two scenes used throughout the project. As labeled, the first scene is an aligned line segment and the second is misaligned. As the display resolution is increased, it becomes more difficult to discern if the segment is aligned when the misalignment stays at one pixel. In our tests, the observer was shown 1,000 images per simulation (500 aligned, 500 misaligned) and the computer algorithm would attempted to classify them as aligned or misaligned. A 75% classification accuracy indicated that the human visual system was not the limiting factor in the visual pipeline for the given set of parameters.

Image Pipeline Noise Sources

In order to simulate the effect of the human visual system on the visual pipeline, several physical non-idealities and noise sources are added. These noise sources make it much more difficult for the computer algorithm (and the human brain) to accurately classify the images.

One source of noise is the point-spread function (PSF) and blurring associated with the lens of the human eye. The lens is not ideal and has a point-spread function that dictates how light will be diffused as it passes through the lens. Furthermore, chromatic aberrations cause the diffusion to depend on the color or wavelength of the light. The image below shows a scene (from above) that has passed through the human lens and projected on the retina. As shown, the line on the retinal image is much thicker than the original scene due to the blurring and it is much more difficult to tell that the image is misaligned.

Another source of noise in the image pipeline is fixation eye movement. Fixational eye movement is the involuntary movement of the eyes when trying to focus on a single point. While these movements are typically very short, they affect the integration of photons on photoreceptors in the eye. In the context of classification, the eye movements add noise to the photon data and make it more difficult to accurately classify a scene.

The last source of noise is photon noise. This noise arises from the discrete nature of photons. Even in a fully lit scene, a given photoreceptor will receive a slightly different number of photons than a neighboring pixel during a given integration time. The end result is a noise source superimposed with the ideal photon count to produce the measured photon data.

Methods

The general procedure used for both methods in this experiment is:

- Generate two scenes (one aligned, one misaligned).

- Obtain cone absorption data for these scenes.

- Perform additional processing on the data if necessary.

- Train the system using the cone absorption data.

- Obtain a new set of cone absorptions for the scenes.

- Use the system to predict the classification of the test cone absorptions.

- Compute prediction accuracy and other metrics.

Data

Training and test scenes were generated in MATLAB and ISETBIO with the aid of a tutorial script provided by course staff. A human eye and display are modeled, and the retinal image is computed by applying functions which integrate the incident photons and approximate the noise generated by aberrations in the path from image to retina. This also includes noise induced by fixational eye movement.

The feature vector for the experiment is the vector of cone absorptions. The length of this vector varies with FOV, viewing distance, and screen resolution, since not all cones in the eye are affected by the scene.

1-Nearest Neighbor

The k-nearest neighbors algorithm classifies a test point by observing the k training examples closest to the point and chooses the output label of the plurality. In this case, k = 1. The distance between two images was measured using the euclidean distance (2-norm) on vectorized retinal images. The images were vectorized so that each pixel yielded a unique dimension.

This method benefits from being a relatively simple machine learning algorithm and does not require extensive parameter tuning. However, because the feature vector is large sequence of images, it does suffer from the curse of dimensionality. Because each image is vectorized, each image in the training database is approximately 300 values. With 1,000 training images, this leads to a very large database that can be difficult to use for calculations and store. Furthermore, the high dimensionality can skew the euclidean distance measure by reducing the impact of any one dimension in the high dimensional space. In this case, the performance was not adversely affected, but for larger images, principle component analysis or model order reduction would be necessary to maintain performance.

Support Vector Machine

One approach uses a support vector machine to classify test images. Given a training dataset, this is achieved by calculating the separating hyperplane which yields the largest functional margin between the two classes. Test inputs are then classified based on which side of the hyperplane they fall on.

The particular flavor of SVM chosen for this investigation is C-support vector classification (C-SVC) and the kernel trick was employed using a radial basis function (RBF) kernel to allow for interpretation of higher-dimensional features aside from the individual cone absorptions. This method required the tuning of two parameters:

- C-SVC cost parameter

- Controls the number of misclassified examples allowed when processing the training data to maximize the margin. This allows the system to ignore outliers if they exist.

- RBF parameter

- Controls the scale of the kernel function.

Since these parameters are relatively independent, an exhaustive search across parameter pairs was deemed unnecessary. Instead each parameter was varied along a single dimension on a logarithmic scale and the values generating the highest prediction accuracy were chosen.

Experiments

Experiment 1: Determine the Critical PPI

Scope

The purpose of the first experiment was to determine the PPI where a human observer can start to discern misalignment in two line segments. A classification accuracy of 75% was used to signify an ability to accurately evaluate an image. The classification accuracy was plotted with respect to PPI for three different viewing distances: 0.5m, 1.0m, and 2.0m. In order to account for the discrete nature of a simulation, a simulation with 500 samples was run 20 times at a given PPI and the classification accuracy was averaged over these 20 runs.

1-Nearest Neighbor Results

The results from the nearest neighbor method were mostly as expected. At each viewing distance, the viewer's accuracy decreased as PPI was increased, apart from one anomaly at high PPI for the 0.5m case. Also, viewers that were closer were able to observe a difference at higher PPI than viewers who were farther away. This method indicates that at 2m, PPI increases above 150 makes no difference to the viewer. At 1m, this number was about 300. At 0.5m, this number expanded to 600 PPI. This suggests an inverse relationship between viewing distance and maximum observable PPI.

Support Vector Machine Results

The results from the SVM show the same general trends as those from nearest neighbor. Once again, accuracy declines with increasing PPI. Also, maximum observable PPI decreases with viewing distance. The curves for SVM were noticeable noisier than those from nearest neighbor, indicating that this method could be more susceptible to noise, and also that the lines may have been smoother if more samples were used per experiment. Remarkably, SVM suggests the same maximum observable PPI for the three viewing distances as nearest neighbor: 150 PPI at 2m, 300 PPI at 1m, and 600 PPI at 0.5m.

General Conclusions

The table below summarizes the results.

| Viewing Distance | 1-NN Threshold Resolution | SVM Threshold Resolution |

|---|---|---|

| 0.5 m | 600 ppi | 600 ppi |

| 1.0 m | 300 ppi | 300 ppi |

| 2.0 m | 150 ppi | 150 ppi |

As mentioned in the previous sections, higher PPI is required for applications with smaller viewing distances in order to shift the bottleneck in the image pipeline away from the screen resolution. Both machine learning algorithms agree that a tablet might need 600 PPI, while a television might require only 150 PPI. Based on these data, maximum observable PPI is approximately inversely proportional to the viewing distance; specifically,

Both the nearest neighbor algorithm and SVM gave relatively similar results, as shown in the graph below. This is somewhat surprising, given the significant differences in the methods. However, this is encouraging in that if these methods are approximately the same, then they probably also match what the human brain would do.

The deviations from the normal trends are interesting. Both methods have a sharp drop at 625 PPI and go up again at 650 PPI. This deviation appeared again when the test was repeated. This implies that this anomaly is not only the product of noise. The fact that both machine learning algorithms show this feature indicates that it is a flaw in the model of the human visual system and not in the classification.

To examine the accuracy of our results, the graph below shows the standard deviation of the accuracy from each method when the viewing distance was 2m.

It is interesting to note that the standard deviation of accuracy is relatively small when accuracy is above 75%, and increases as accuracy dips below 75%. This makes sense, because if the viewer can tell the difference, he is more likely to be consistent. However, if the viewer cannot, he might get lucky with guessing sometimes and not others. Given that the maximum standard deviation observed in the relevant range is about 22%, the fact that we ran 20 trials and took the average indicates that our results are accurate to within about 5%.

Overall, the trends were relatively consistent and the sought after relationship between maximum observable PPI and viewing distance was identified.

Experiment 2: Effect of Contrast on Classification Accuracy

Scope

For experiment 2, the color of the background was varied from black to white behind a white line. The PPI and viewing distance are held constant at values that approximate the human threshold for Vernier acuity. A PPI of 275 and 100 was used for a viewing distance of 0.5m and 2.0m respectively. The results from Experiment 1 showed that the k-nearest neighbor and SVM algorithms yielded similar results for the critical PPI. Moving forward, only SVM was simulated but it was assumed that both algorithms would provide comparable results.

Results

The trends observed the in plot below are consistent with the intuition gained in the previous experiment. At low background values (black), the high contrasts provides the user the highest Vernier acuity. However, as the background transitioned to the same color as the line, it became more difficult to accurately classify the image. One interesting observation about the contrast curve is the non-linear behavior. At dark background colors, the user does not suffer a loss of acuity until approximately 0.3. Previous research has shown the eye has a non-linear response when it comes to identifying different intensity levels which has led to the gamma curve for displays. In the context of this experiment, the user is unable to distinguish a background between 0 and 0.3 which implies that the acuity would remain the same. At lighter background colors, the user starts to notice the difference and therefore suffers a loss in acuity. Furthermore, the effect of contrast was more noticeable at larger viewing distances. In other words, the accuracy began to decrease at darker contrast levels for a higher viewing distance. At 0.5m, the accuracy decreased to 75% at a background color of 0.55 while it reached this percentage at only 0.4 for 2.0m.

Experiment 3: Effect of Pupil Diameter on Classification Accuracy

Scope

When a person is looking at a screen, their pupil diameter can change based on the level of ambient light in the room. This could change the diffraction properties of the lens as well as the chromatic aberration, and therefore affect the Vernier acuity. At two chosen sets of distance and PPI, pupil diameter was varied among all the available values in ISETBIO: 3 mm, 4.5 mm, 6 mm, and 7 mm. Only the Support Vector Machine algorithm was used since it is expected that the results from nearest neighbor would be comparable.

Results

The results are plotted below.

It appears that the effect of pupil diameter on Vernier acuity is small. Given that our standard deviation is within ~5% on each of these data points, there is no statistically significant relationship observable here. It was expected that there would be a decrease in accuracy with increased pupil diameter, since simulations showed that there is a wider linespread function with the ijspeert model for a larger pupil diameter due to chromatic aberration. The plot above does seem to show a slight downward trend. Perhaps this trend would have been more pronounced had smaller pupil diameters been available or if our accuracy was higher. With the results here, we cannot positively conclude that there is a relationship between pupil diameter and Vernier acuity.

Published Results

Published data suggests that humans can detect Vernier misalignments as small as 2-5 arcseconds apart. This list is in no way comprehensive but shows that upon cursory examination most studies agree on this threshold.

- Bradley, Skottun [2]: 10 arcseconds

- Foley-Fisher [3]: 5 arcseconds

- Geisler [4]: 2-4 arcseconds

- Sullivan, Oatley, Sutherland [5]: 4 arcseconds

Given a fixed viewing distance, it is possible to compute the corresponding resolution on a screen, as seen on the following table.

| Viewing Distance | Arc Seconds | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0.5 m | PPI | 10478 | 5239 | 3493 | 2620 | 2096 | 1746 | 1497 | 1310 | 1164 | 1048 | 953 | 873 | 806 | 748 | 699 | 655 |

| 1.0 m | 5239 | 2620 | 1746 | 1310 | 1048 | 873 | 748 | 655 | 582 | 524 | 476 | 437 | 403 | 374 | 349 | 327 | |

| 2.0 m | 2620 | 1310 | 873 | 655 | 524 | 437 | 374 | 327 | 291 | 262 | 238 | 218 | 202 | 187 | 175 | 164 |

Thus, the corresponding PPI for 5-second acuity is 2096 at 0.5 meters, 1048 at 1 meter, and 524 at 2 meters.

Note that the 1-NN and SVM experiments discovered threshold resolutions (600/300/150) which are lower than the range specified by the published experiments. These results correspond to an acuity of about 16 arcseconds. However, it is important to note that the 1-NN and SVM experiments used a line width exactly equal to the Vernier displacement. The published studies used line widths on the order of dozens of arcseconds (much greater than the displacement). It is likely that the Vernier acuity task is much easier when confronted with lines of greater width. Furthermore, there may be additional noise due to the addition of the display into the imaging pipeline - previous experiments used printed lines as opposed to images on a screen. This additional noise would serve to make the Vernier acuity task more difficult, which in turn would lower the threshold resolution. Given that the data obtained with SVM and 1-NN is close to the published data, it is not unreasonable to say that they are a decent approximation of the human visual processor.

Conclusions

The two machine learning algorithms were very comparable in performance with respect to the Vernier acuity task, and the results are similar to those of previous published experiments. It is important to note that the resolution threshold for Vernier acuity is strongly dependent on (in fact, nearly proportional to) the distance between the observer and screen.

- Results match the current trends in the markets, in that monitors have about half the ppi of tablets, and TV's have half the ppi of monitors. However, in all cases current markets have below the threshold PPI, and therefore have not met their full potential.

- Simulations agree with published results.

- Support Vector Machine and Nearest Neighbor have similar performance in the transition zone.

- The effect of contrast on Vernier Acuity appears to be nonlinear and matches well with qualitative results.

- Pupil diameter seems to have a marginal effect on performance, which implies that acuity doesn't really change with different levels of ambient light.

Further Investigation

The following are potential followup investigations which may be useful in further understanding and exploring the human limit for Vernier acuity.

- Application of principal component analysis to feature vectors

- PCA may be used to discard unimportant information (e.g., noise) from the signal. This approach was implemented but not used in the experiment.

- Neural network and other algorithms

- Only two machine learning algorithms were tested in this experiment. Implementation of a neural network (i.e. with backpropagation) may yield interesting and useful results.

- Modeling myopia and hyperopia

- A large portion of the population is afflicted with one of these two conditions. Even with corrective lenses it is nearly impossible to achieve perfect vision. It is possible that even a small change in lens power may result in a dramatic reduction in threshold resolution, negating the need to improve current display technology.

- Comparing displays

- Only one display was used in this experiment. Different displays may feature different blurring or color characteristics.

- Line width

- Published studies on Vernier acuity appear to have used lines of much greater width than the Vernier displacement. It would be interesting to investigate the effect of the lines' widths on the ability of humans to perform the acuity task.

References

[1] Wikipedia. Comparison of tablet computers. 2014.

[2] Bradley, Arthur and Skottun, Brent. Effects of Contrast and Spatial Frequency on Vernier Acuity. Journal of the Optical Society of America A, 1987.

[3] Foley-Fisher, J.A. Contrast, Edge-gradient, and Target Line Width as Factors in Vernier Acuity. Optica Acta: International Journal of Optics, 2010.

[4] Geisler, Wilson. Physical limits of acuity and hyperacuity. Journal of the Optical Society of America A, 1984.

[5] Sullivan, G.D., Oatley, K., and Sutherland, N.S. Vernier acuity as affected by target length and separation. Perception & Psychophysics, 1972.

Appendix I: Source Code

Source code can be downloaded here or obtained from GitHub at https://github.com/shwu/vernier-modeling.git

The following MATLAB libraries are required to run the code:

- ISET

- ISETBIO

- LIBSVM

The presentation may be downloaded here.

Appendix II: Work Breakdown

Ryan Meganck

- 1-NN implementation

- PCA implementation

Adam Sajdak

- Testing framework

- Contrast resolution and pupil diameter experiment design

Stephen Wu

- SVM implementation and parameter tuning

- Research published results

Shared Responsibilities

- Wiki page

- ISET / ISETBIO investigation

- Training / testing

- Data analysis / interpretation