NickNoahMegan

Introduction

The goal of our project was to gain an understanding of the modern state of demosaicing techniques and how to reduce demosaicing artifacts. We explore existing algorithms to discover their strengths and weaknesses, and implement some of them to improve our understanding. We also look into the future possibilities for the ISP by implementing the demosaicing portion of the DeepISP paper. Our explored methods can broadly be described in two sets of classes: classical vs machine learning methods, and methods that perform direct image interpolation vs cleaning artifacts from bilinear interpolation. In this work, we explore one classical, direct interpolation method and two machine learning methods for cleaning interpolation artifacts.

Background

Digital cameras use Color Filter Arrays (CFAs) such as a Bayer Filter Mosaic to capture colored image data of the light entering the aperture. The image sensor is equally sensitive to any color of light, so a CFA blocks out all but one of the RGB colors so that we can capture a particular band of wavelengths at each pixel. This means each pixel only contains an R G or B value. We must fill in the blanks so every pixel contains all 3 color values. This is done through some form of interpolation. Figure 1 is an example of a Bayer CFA.

The absolute simplest approach is Nearest Neighbor, which just copies the value of an adjacent pixel of the same color channel. This is one of the less desirable approaches as it doesn’t take into account how colors should change across pixels. The most basic usable approach is Bilinear Interpolation. This method averages the adjacent pixel values of the same color channel.

The following visualizations[1] summarize how Nearest Neighbor Interpolation works. Old pixels obtained by the color sensor are used to obtain the new pixels.

Similarly, the below images summarize how we obtain new pixels in bilinear interpolation. The first 3 images correspond to red and blue channels, while the final image corresponds to green channels.

Artifacts are any mistakes that occur as a result of interpolation. The two most common artifacts for a Bayer mosaic are false color artifacts and zippering. False color artifacts appear when interpolation is performed across edges instead of along them. This results in colors that were never in the original image. Zippering also occurs along edges, and results in blurry edges at high spatial frequencies. Because edges cause the most artifacts, typically the best algorithms are the ones that are able to correctly interpolate along instead of across edges. Figures 2 and 3 show examples of both false color and zippering artifacts[2].

The nearest neighbor and bilinear interpolation algorithms both have a tendency to produce false color artifacts and zippering along edges within images, but there are various more advanced algorithms that are able to demosaic an image without introducing too many of these artifacts. Figure 4 shows an example of one such advanced algorithm, Adaptive Homogeneity-Directed Demosaicing (AHD), and compares it to Nearest Neighbor and Bilinear Interpolation [3].

AHD does a much better job of demosaicing the image without creating color artifacts. Below, we describe a few algorithms that can be used for demosaicing:

Variable Number of Gradients (VNG): Detects edges along some number of possible angles to figure out the best direction to interpolate from.

Patterned Pixel Grouping (PPG): An improvement of VNG that additionally takes into account smooth hue transitions to reduce color artifacts.

Adaptive Homogeneity Directed (AHD): Even more advanced algorithm that generates a homogeneity map to pick a direction of interpolation that minimizes color artifacts. Considered by some to be the industry standard.

We consider these to be the "classical" approaches to demosaicing because they all follow the same idea of using heuristics to make good decisions on how to interpolate to minimize artifacts. There also exists another class of techniques to reduce the demosaicing artifacts after interpolation. These methods use machine learning to learn good transformations rather than applying good heuristics. Some examples include:

K-Nearest Neighbors: Create image patches to form a dataset, then adding detail within a patch by choosing the K closest patches (determined by L2 norm) and adding them into the patch.

Linear Regression: We model a missing pixel value within a color channel as being a linear function of the surrounding pixels, then learn the model which minimizes some error metric using an optimization algorithm.

Neural Networks: We can create a non-linear model for our transformation by composing multiple simpler non-linear models in sequence. We can then learn a good model using a loss metric and numerical optimization.

Though machine learning can learn complex functions for input-output mapping, we should note that one weakness of machine learning based methods is that they rely on a good dataset. One result of this for our context is that it is unlikely that a given machine learning algorithm can do better at demosaicing than our ground truth images, so the generation of good ground truth images is critical for this pipeline.

Dataset

For our project, we used a demosaicing dataset provided by Microsoft Research Cambridge [4]. This dataset was designed for machine learning based demosaicing, and includes train, validation, and test sets of low-resolution images to learn a model and verify results. The images were generated using dcraw, which simulates the camera ISP pipeline for some given camera and can convert RAW images to PNG images by pushing the image through this pipeline. It includes images with and without noise. The results are verified using provided ground truth images, which were generated by downsampling the output of the camera in such a way as to reduce aliasing and other unwanted effects. To reintroduce noise into the dataset, they use the commonly accepted noise models that combine a Poisson and Gaussian distribution. The researchers fit the noise model to the original images, and then applied noise sampled from this model to the downsampled versions. The camera model that was used to generate the images we demosaic on is for the Panasonic Lumix DMC-LX3 CCD camera with a Bayer Pattern CFA. Examples of input and ground truth images are shown below. Input images are provided as PNGs which are then converted to raw pixel values within our code.

Methods

For our classical algorithms, we found that common approaches tended to follow certain classes of assumptions. Firstly, they would make the assumption that hue changes in a natural image are subtle, and that we can smooth the hues in the output to try and recreate this expectation. Secondly, they would use luminance gradients to detect edges and then use that information to interpolate along edges instead of across them. Both of these approaches make assumptions about the picture being "natural," meaning that they're pictures we would typically expect to see in consumer photography. Artificially generated images may very well violate all our assumptions, but the importance of these assumptions is that they are very accurate for consumer photography and so can be used heuristically to improve our results.

Paired Pixel Grouping (PPG)

The first algorithm we implemented is Paired Pixel Grouping (PPG). The details for how this algorithm works can be found at [5]. The overall idea behind the algorithm can be broken into 3 steps, each of which is a separate pass over the image. In the first step/pass, we fill in all the green values at red and blue pixels by calculating the luminance gradients in the cardinal directions, and giving more emphasis to the pixel in the direction of the smallest change. In the second step, we fill in the red and blue values at green pixels using what the author defines as a hue transition function to smoothly blend the hues. In the third and final step we fill in the red values at blue pixels and vice versa by computing the diagonal gradients and then using the hue transition function to blend the smaller gradient. The pros of this method are that its very simple to implement, but the downside is that three passes over the image is more computationally expensive than some other algorithms.

K-Nearest Neighbors (KNN)

The second algorithm we implemented is K-Nearest Neighbors (KNN). This method uses bilinear interpolation for the demosaicing and uses KNN for reducing the demosaicing artifacts. A set of ground truth "training" images are stored in an OpenCV KNN model. The way these ground truth images are stored is by dividing each image up into equal patches (for example 6x6 pixel squares) and also storing their bilinear interpolated result of their bayer patterned image.

Once all of these patches are stored, we can start to perform demosaicing on the set of test images. The first step is to perform bilinear interpolation on the test image. Then, the bilinear interpolated image is divided up into patches for each color channel (the same dimension as the ground truth patches). Each of these patches (in each of the color channels separately) passed into the KNN model we created. For the input patch we pass in, the KNN model then outputs all the neighbor patches (which it obtained from the stored ground truth patches) and the distance of each of the neighbor patches. From each of the neighbor patches, we get the corresponding bilinear interpolated version of the patch (which we also have stored) and we subtract it from the neighbor patch itself (which is just a patch from the ground truth image). This difference gives us the detail patch which we take a weighted average of all the detail patches from all the neighbors based on the distance of the neighbor patches. The larger the distance, the smaller the weight and vice versa. This weighted average is then added to the bilinear interpolated image patch of our test image. This process is repeated for the entire test image and for every color channel to give us our resulting image.

The idea behind this method is to add in missing detail resulting from just bilinear interpolation alone from a set of similar stored ground truth images. For example, if a test image patch we are working on is of the sky, we would most likely find all the closest neighbor patches to also be areas of the sky from our stored images. The advantages of this method are that it is a relatively simple machine learning technique to apply and it also doesn't require us to train a model. However, the disadvantages are that we see low performance if our dataset isn't specifically catered to this particular method. For example, if we do not have a large enough dataset for our ground truth "training" images, or if the images are too diverse, this method will struggle to find neighbor patches similar enough to add meaningful detail to the image. Another disadvantage is that if we want this algorithm to work well, we would need a relatively large amount of storage to store enough images.

Deep Neural Network (DNN)

The third algorithm we implemented was a Deep Neural Network (DNN). The principle of operation of a neural network is to build a complex non-linear model by stacking multiple, simpler linear models together interspersed between non-linear activation functions. Thesse models learn weight values that best map our input image to our ground truth image. This is done by creating a Loss metric, in this case, Mean Squared Error, which measures the difference between our expected and actual output. From there, we modify the weights of our network by using backpropagation to compute the gradient of the loss with respect to a certain weight value and then using stochastic gradient descent to modify the weight value such that it is moved in a direction to help minimize the loss.

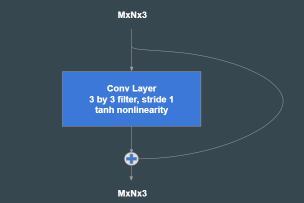

The network we trained was a type of Deep Neural Network called a Convolutional Neural Network (CNN). CNNs work by learning linear filters rather than fully connected weight values where each output pixel is a function of all input pixels. Instead, each output pixel is only a function of input pixels within some square vicinity within the image. This structure for a neural network limits the type of models our neural network learns to ones that mostly learn local features of our input image. By stacking multiple convolutional layers one after the other, we can form higher and higher-level features that are able to help our network learn our function. CNNs have recently done very well at learning a number of machine learning tasks for images and videos, so we build a CNN model to try and learn how to properly map an image mosaic to a demosaiced image. The image below visualizes the computation done within a single output channel of a convolutional network.

Our network is composed of 20 convolutional layers. Each layer uses a 3x3 filter with a tanh nonlinearity and a stride of 1. We also include skip connections so that each layer is essentially just creating an additive tweak to its input. We also add Reflection Padding to each convolutional layer input. The optimization algorithm used was ADAM, a modification to stochastic gradient descent which uses averages of first and second moments of the weight gradient to essentially create an adaptive learning rate for each weight depending on image and activation statistics. The input of this network is a bilinearly interpolated image, which the network is trying to clean up. The output of the network produces an image of the same size as the input. This neural network was based on the neural network defined in DeepISP[9]. The primary difference between the two networks is that ours uses fewer filters per layer, only 3 vs DeepISP which used at minimum 16 layers and got their best performance at 64 layers. Our network was implemented using PyTorch.

One advantage of neural networks is that they are able to learn complicated function mappings by learning good features within the input, rather than requiring the user to define their own hand-designed features. One downside of using neural networks is that they tend to require a lot fo compute and training time. The DNN we trained required 2 days to train on a Google Cloud VM with 8 vCPUs and 30 GB of RAM. Training time could be reduced by using a GPU instead, but this increases cost to train per unit time. One final downside to using neural networks is that they act like a black-box model. Even if you can train a network to learn your input-output mapping, it doesn't mean that the network itself will use computations that are easily interpretable to a human. Neural Networks are powerful, however, interpreting why an algorithm works or doesn't work is difficult and currently an important topic of research.

Results

We used various methods to analyze our results. The first is to visually inspect them to see what conclusions we can draw from how our algorithms handle different situations and where their shortcomings are.

PPG

For PPG, we looked at the following images (Figures 11 and 12)

We can see some places where PPG succeeds and others where it fails. One place where it succeeds is on the bottom right of the bus where the window is divided by a metal bar. This bar is a common place where simple algorithms like bilinear interpolation would have just combined colors from both windows and the bar to get a blurry off-colored line. Here, PPG was able to avoid the zippering artifact, although there is some false color. One place where PPG does particularly poorly is the branches of the tree. These branches have a very high spatial frequency and there wasn't enough data to correctly resolve edges and colors.

An example where PPG performs well throughout is seen in figures 13 and 14

Here there are a few areas with minor false color artifacts, but the lack of edges through makes the picture an easy target for demosaicing properly.

KNN

Looking at the same bus image for KNN (Figures 15 and 16), we see that KNN does not solve the zippering issue and there are overall more color artifacts than the PPG method.

Figures 17 and 18 show an example where KNN performed well and has few artifacts. The reason KNN performs better on this image is simply because it is a much easier image to demosaic since it is fairly monochromatic. The main color is red which is why we don't see issues of zippering or false coloring. It also makes it easier for KNN to identify closer neighbor patches.

Figures 19 and 20 show an example where KNN fails completely. We see this behavior from our KNN model when the weight parameters are not tuned for this specific case. For a better result, we would need to reduce the weights of the patches we add to prevent the overexposure we see here. This shows the disadvantage of a single set of parameters in the KNN model not working well for all images in our dataset.

DNN

Figures 21 and 22 look at the same bus image as before, and we see that the DNN model does a better job of reducing false color artifacts. It does an okay job of reducing zippering, but false color artifacts have substantially decreased, especially in the tree branches in the image. One downside though is that the image seems very blocky, almost as if it has lost resolution. This effect is more prevalent in certain images than others.

Figures 23 and 24 show an example where the network sometimes tends to dull colors a little bit.

Figures 25 and 26 show an example which has good colors but has a very blocky output.

This blockiness on the output could be a result of reducing the number of convolutional filters per layer in or network compared to DeepISP. One possible future work would be to retrain using more filters and see if this improves any of the issues we've seen here.

PSNR

In our research, we found that several papers also looked at the PSNR of the outputs to as a metric for judging the images. We compared the PSNR of our images versus the ground truth for each individual color channel, as well as for the image as a whole, and found the following.

For images without noise (Figure 27).:

For images with noise (Figure 28).:

Looking at the table without noise, we see a pretty similar outcome when compared to our visual analysis. KNN performs very poorly for the reasons we discussed previously. PPG performs better than KNN, but still isn't as good as our DNN approach which performs much better than the rest. When taking noise into account, we get a very similar trend, but each algorithm performs just a little bit worse.

ISO 12233 Slanted Bar Metric

In order to judge how our algorithms affected the sharpness of our images, we attempted to run the ISO 12233 slanted bar image metric on the output of our algorithms. Using ISETCam and the f-number and focal length of our cameras, we generated 4 slanted bar images, one for pixel dimensions sizes of 2, 3, 5, and 9 microns.

We ran the raw pixel values of these images through our algorithms and used the ISO 12233 metric on the resulting images. We were successfully able to run this metric on the ground truth slanted bar images as well as the output of PPG. These values are listed below in lines per mm (Figure 30).

As expected, PPG has a bit of image sharpness reduction in comparison to the original ground truth image. The only exception is the 9 micron sensor, where we actually see better sharpness from PPG.

We were unable to obtain the same metric on our KNN and DNN models though. Our models were trained on the images from the MSR Demosaicing dataset. The Slanted Bar image is very different from the images seen in this dataset which is composed of more natural-looking scenes. As a result, our outputs looked very different from our expected output. As a result, we were unable to obtain this slanted bar metric either because the ISO 12233 metric code from ISETCam couldn't identify the slanted line or because the MTF resulting from images output from these methods looked completely off. We include example outputs from all three algorithms below (Figures 31-33).

You'll notice that the output of PPG has a red tint. This is the result of the camera sensor simulated in ISETCam, which seems to be more sensitive to red light than green and blue. We saw similar results in our bilinearly interpolated images. We are not sure of the cause of this red tint at this time.

One important thing to discuss is why KNN and DNN failed here. Both of these algorithms rely on images from the training set to learn. None of the images within our training set look like our slanted bar image. Most of them look like natural scenes. As a result, a possible reason for failure here could be a result of overfitting on our training dataset. A way to overcome this would be to generate more images like this and retrain our algorithm on both natural and synthetic images.

Delta E

Another error metric we used was the delta E from spatial CIELAB[10] to determine the average difference in colors between our resulting image and the ground truth images. To calculate this, we used the scielabRGB function from ISETCam and found the average delta E value across all our resulting images for each method. The table below summarizes our delta E results. As we can see, the DNN obtained the lowest delta E values and KNN obtained the highest. This matches our PSNR data where DNN has the highest performance and KNN has the lowest. Also, we see that the images with no noise obtain higher delta E values across all methods. This makes sense since the images we expect there to be less difference between ground truth and our results with images with no noise. We also ran spatial CIELAB on just the bilinear interpolated images. The result we obtained was a delta E of 6.1885 for images without noise images and 6.7407 for images with noise. Using this evaluation metric shows how badly KNN performed on these set of images. KNN did even worse than just the bilinear interpolated images which means it did not contribute any improvement in color to the resulting image on average.

Conclusion

In the end, we found that classical simple algorithms like PPG failed to fully avoid false chromatic artifacts on very low-resolution images.

KNN needs a lot more data to be able to run inference on never before seen images.

The deep learning approach worked very well but could have done better with more filters per layer, at the expense of longer training times.

References

[1] https://wiki.apertus.org/index.php/OpenCine.Nearest_Neighbor_and_Bilinear_Interpolation

[2] https://www.arl.army.mil/arlreports/2010/ARL-TR-5061.pdf

[3] https://ngi-user-guide.readthedocs.io/en/latest/demosaicing/

[4] D. Khashabi, S. Nowozin, J. Jancsary and A. W. Fitzgibbon, "Joint Demosaicing and Denoising via Learned Nonparametric Random Fields," in IEEE Transactions on Image Processing, vol. 23, no. 12, pp. 4968-4981, Dec. 2014.

[5] https://sites.google.com/site/chklin/demosaic/

[6] http://stanford.edu/class/ee367/Winter2017/Chen_Sanchez_ee367_win17_report.pdf

[8] https://www.freecodecamp.org/news/an-intuitive-guide-to-convolutional-neural-networks-260c2de0a050/

[9] Schwartz, Eli, Raja Giryes, and Alex M. Bronstein. “DeepISP: Toward Learning an End-to-End Image Processing Pipeline.” IEEE Transactions on Image Processing 28.2 (2019): 912–923. Crossref. Web.

[10] X. M. Zhang and B. A. Wandell. A spatial extension to CIELAB for digital color image reproduction. Symposium Proceedings, 1996

Appendix I

Code can be found here

Appendix II

Noah worked on PPG and the PSNR metric. Nick worked on DNN and the slanted line metric. Megan worked on KNN and the SCIELAB metric. All members worked on the report and presentation.