Noise characterization & spectral calibration of a stereo camera

Project motivation

An image processing pipeline entails all the components that are used between the acquisition of a scene (e.g., a camera) and an image renderer (e.g., a display device or printed copy) [1], [2]. All these components work together to capture, process and store an image [3]. A lot of effort has been spent on modeling the individual components or subcomponents of an imaging process pipeline including the camera, camera optics and sensor noise [4], [5]. However, by modeling only the system subcomponents, a narrow view of the imaging processing pipeline is captured. There is great interest in not only modeling individual components but also simulating the entire imaging pipeline as a means to guide the understanding of the system and to guide design decisions [6].

One of the goals in Dr. Joyce Farrell's research is to create a software simulation that models a stereo camera and to have these simulations feed into a large-scale simulation of an image processing pipeline. Our project focuses on a narrow aspect of the image processing pipeline: characterizing the noise as well as the spectral sensitivity of a stereo camera which would serve as critical inputs to, first, a simulation of the stereo camera that can predict camera performance over a wide range of conditions and, second, to a large-scale simulation of the image processing pipeline. The objective of our class project is three-fold: follow well established procedures to (1) collect information about image sensor noise, (2) obtain and validate image sensor spectral sensitivities and (3) feed our experimentally determined data into ISET toolbox to simulate the image sensor.

Background

Sensor spectral quantum efficiencies

The sensor spectral quantum efficiency is the relative efficiency of detection of light as a function of wavelength. It results from a combination of the effects of lens transmittance, color filter arrays and photodiode quantum efficiency [6].

An ideal spectral efficiency would be one linear transform shy of the CIE XYZ curves [7]. This ideal camera would be able to capture all the colors that the human eye can see. In practice this is difficult to achieve perfectly, and image processing algorithms for sensor correction are used to correct for the difference. Camera manufacturers enjoy some freedom in designing the spectral sensitivity curves for their device, and the resulting spectral curves can differ significantly, as seen in Fig. 1.

Noise characteristics

Photon noise

Image sensors directly measure scene irradiance by determining the number of photons that hit a sensor surface over some time interval [2]. Given the inherent nature of fluctuations of photon flux, when collecting photons from an unvarying source over some period of time, there will be variations of how many photons hit the sensor surface; this variation leads to photon (or shot) noise. The number of photons emitted from an incoherent source can be treated as independent events of random temporal distribution. The number of incident photons onto a sensor surface, , is distributed according to a Poisson distribution and the uncertainty described by this Poisson distribution is photon noise. Because photon noise follows a Poisson distribution, the variance is equal to its expectation: ,in which t is the time interval.

Photon noise is inherent to the properties of light and is independent of all other sources of noise. The ratio of the signal to photon noise is proportional to the square root of the number of photons captured: . This signifies that the photon noise grows in absolute terms with signal however is is relatively weak at higher signal levels. Generally the only way to reduce the effects of photon noise is to capture more signal which requires longer exposure durations.

Dark Voltage

Electrons are created over time, independent of the number of photons incident on the detector surface, within a CMOS image sensor. These electrons are captured by potential wells and counted as signal. An increase in signal also contains a secondary statistical fluctuation that contributes to dark current noise.

Dark current is a source of noise that arises from increases photon counts due to thermal energy. At high thermal energies, the sensor releases a larger random number of electrons and these thermally generated electrons build up in the sensor pixels. The rate of the build up of these thermally generated electrons is a function of the temperature of the image sensor. Dark current is present when the sensor is either illuminated or not illuminated because at any temperature above absolute zero thermal fluctuations in silicon can release electrons [8]. Dark voltage can be generally minimized by reducing the ambient temperature of the image sensor as well as reducing the exposure duration.

Read and reset noise

Read and reset operations are inherently noisy processes. CCD architecture enables the charge from each pixel to be transferred through a readout structure (to be converted from charge to voltage); therefore each pixel is subjected to the same readout noise. However, CMOS technology allows each pixel to possess a different readout structure when converting from charge to voltage. Therefore the read out noise in CMOS sensors follows some distribution. This suggests that if the same charge is placed in each given pixel in two different images, there will be variations in the analog-to-digits units recorded. Typically, the read noise is 1 - 3 electrons depending on the camera manufacturer [8]. Read noise has an effect on the contrast resolution that a particular camera is able to achieve, meaning that a sensor with a lower read noise can detect smaller changes in signal amplitude.

Image sensors undergo a reset operation to provide a reference level to quantify the number of electrons that are incident on the detector. Reset noise arises as a result of thermal noise that causes voltage fluctuations in the reset level for each pixel in a pixel array.

Photoreceptor non-uniformity (PRNU) & Dark signal non-uniformity (DSNU)

There exists a linear relationship between a pixel value and the exposure duration. PRNU refers to the variance in the slope of pixel value versus exposure duration across different pixels in a pixel array. This variance is a result of the fact that different color pixels have different sensitivities to light [9]. DSNU refers to the variability in dark noise across pixels in a pixel array. PRNU and DSNU are sensor characteristics, and can be thought of as imperfections of the image sensor surface.

Instrumentation

Stereo camera

A stereo camera is a type of camera that mimics binocular vision. Typically, a stereo camera contains two lenses each of which acquire an image of (approximately) the same scene at the same time. Our project focused primarily on studying the noise and spectral characteristics of a JEDEYE stereoscopic VR camera from Fengyun Vision.

Each lens is equipped with a CMOS solid-state image sensor (SONY IMX123LQT-C). The pixel size is 2.5 μm x 2.5 μm. The lens f-# and focal length of 2.3 and 2.1 mm, respectively. The exposure duration range of the camera is approximately 125 μs to 30 ms. A screenshot of the spectral sensitivities characteristics, excluding all lens characteristics and light source characteristics, are included in the sensor datasheet (Fig. 2), however the effects of infrared blocking are not captured.

The only type of noise that is quantified in the image sensor datasheet is dark voltage, which is reported as 0.15 mV (at 1/30 s). The fill-factor is not reported but (given that the sensor is back-illuminated) a fill-factor of 99% is assumed. Note that the image sensor datasheet is included in the Appendix of this report.

Monochromator

A Cornerstone 130 monochromator from Oriel Instrument was used to obtain a narrow band of wavelengths of light. For our experiments, we used a wavelength range of 380-1068 nm (which is the range of the spectroradiometer).

Spectroradiometer

A SpectraScan PR-715 spectroradiometer was used to measure: (1) surface reflectance of each of the twenty four patches of a MacBeth ColorChecker and (2) the spectral power distribution (SPD) of a source. The latter was necessary to characterize the illuminant.

Various light sources

Various light sources were used in our experimental setup including a Illumination Technologies 3900 Remote Light Source with a USHIO halogen projector lamp. Additionally, a X-Rite SpectraLight III was used to provide various illuminants such as simulated daylight (D65) and incandescent (tungsten) lighting.

Noise quantification

In order to simulate the JEDEYE using ISET, it is necessary to quantify the noise characteristics of the camera, namely: DSNU, PRNU, dark voltage and read noise. The sections below outline how the noise characteristics of a JEDEYE were obtained as well as what the found parameters are.

Experimental setup

The following images are required to quantify each type of noise:

Dark voltage quantification requires a set of images with zero light intensity (dark field images) with exposure durations ranging from 125 μs - 3 ms. A total of 150 images were acquired per image sensor.

PRNU quantification required a set of images with a uniform light intensity (light field images) captured at exposure durations ranging from 125 μs - 3 ms. However, at shorter exposure durations the images are dominated by noise and at higher exposure durations the image tended to be saturated. Therefore, only a total of 142 images per image sensor were analyzed. Images were taken of a white paper taped onto a wall. A MATLAB script was implemented to determine a small bounding box (~200 pixels x ~200 pixels) that lies in the center of the light field image, and the analysis was performed on that part of the image.

DSNU quantification necessitates a set of dark field images of constant exposure duration. The average pixel value at each pixel is obtained, and the standard deviation over all the pixels is the estimate for DSNU. A total of 149 images per image sensor were analyzed, each taken at the minimum exposure duration as to minimize the effects of dark voltage. The same set of data was used to obtain read noise, which is simply the mean pixel values obtained at the same pixel and averaged over all the pixels in a dark field image.

The aforementioned procedure was implemented at the suggestion of the procedure outlined in the Farrell et al. paper [9].

When obtaining dark field images, it was important to minimize all sources of light including ambient light and light from, e.g., the computer monitor. Caps were placed over the camera lenses. Secondarily, TeamViewer was installed on the lab computer as a means to remotely access the lab computer. This enabled the capturing of dark field images to begin after we've left the room.

To manage the large number of raw image files obtained, a systematic naming of files was adopted by previous students. In each file name, the name of the image sensor (left vs. right) was denoted as well as the exposure duration used to obtain that image and the image number. (The left image sensor is labeled "vcap0" and the right image sensor is labeled "vcap1".) The images obtained from the two image sensors are sorted into different listings. MATLAB scripts were implemented in order to naturally sort the images obtained by each image sensor by the image number.

Results

A MATLAB script, adapted from ISET s_sensorSpatialNoisePRNU, was used to determine the slopes (exposure time versus measured voltage for each pixel) of the pixel responses, normalize them and estimate the PRNU from the standard deviation of these slopes. The photon noise from both the left and right image sensor is Gaussian and the measured PRNU for the left and right image sensor, respectively, is 2.00 and 2.03%. The PRNUs determined for the left and right image sensor are in good agreement with each other.

A MATLAB script, adapted from ISET s_sensorAnalyzeDarkVoltage, was used to estimate the dark voltage. A voltage versus exposure time curve is generated for images acquired from both sensors, pooling from all the pixels in the image; the average dark voltage is estimated from the slope of the positive trend between voltage and exposure time (Fig. 4). The dark voltage does not become significantly until an exposure duration of approximately 0.012 s and the dark voltage, estimated from a linear fit starting at the exposure duration in which the noise begins to dominate, is approximately 0.0021 and 0.0017 mV/s for the left and right image sensor, respectively.

The measured dark voltage values has a relatively large discrepancy relative to the dark voltage value quoted on the image sensor datasheet (0.15 mV at 1/30 s = 0.005 mV/s). This discrepancy can be attributed to the fact that the vendor acquired dark voltage measurements at an elevated temperature. Specifically the datasheet states the dark voltage measurements were taken at a "device junction temperature of 60oC" [10]. The ambient temperature at which our measurements were taken were at least 35oC lower therefore it makes senses that our measured dark voltage are considerably lower than those reported in the sensor datasheet.

Lastly, a MATLAB script was implemented, adapted from ISET's s_sensorSpatialNoiseDSNU, that could characterize the image sensor DSNU and read noise. This code averages the pixel response across all the pixels in a dark field image and averaged across multuple images and estimates the standard deviation across all the pixels. The DSNU was found to be 0.285 and 0.306 mV for the left and right image sensor, respectively. The read noise was measured to be 0.940 (left sensor) and 1.198 mV (right sensor). The histogram of the read noise fro the two image sensors is depicted below:

We summarize the results of our experiments in Fig. 6.

Spectral Calibration

Experimental Setup

To measure the spectral quantum efficiencies we captured images of a set of narrowband lights. We calculated the mean R, G and B values from a central part of the image. While capturing the images of the narrowband light we had a constant exposure time of 30 ms. We placed the R, G and B measurements in a matrix C of size 3 X , where is the number of lights.

The narrowband lights were generated using the monochromator and ranged from 380 nm to 800 nm in increments of 10 nm. We measured the spectral power distribution of the narrowband light using spectroradiometer. We stored the outputs of the spectroradiometer in a matrix of size x , where is the number of wavelengths. These quantities are related by the equation , where is the spectral sensitivity. We estimate using linear least squares estimation.

Results

We modified the MATLAB script "s_sensorSpectralEstimation.mat" to compute the spectral quantum efficiencies. The resulting curves are shown in Fig 8. The plots were normalised to be in the range [0,1] by dividing by the maximum value of each of the respective curves. We note that the spectral curves from the left sensor (solid line) and right sensor (dashed line) are in close agreement. Furthermore, the measured curves are also in agreement with the curves provided in the image sensor datasheet (Fig. 2).

Validation

To validate the spectral curves, an image of the Macbeth ColorChecker under fluorescent light was captured with the camera. The R, G and B values for each of the patches on the ColorChecker were found by selecting the appropriate regions on the raw image.

The power spectral densities of the each of the 24 patches on the Macbeth ColorChecker was measured using the spectrophotometer. The spectral measurements were multiplied by the spectral sensitivity values to derive the predicted R, G and B values. The predicted and measured R, G and B values were both normalised to lie between [0,1]. We compare the results below.

We plotted the measured and predicted RGB values in the form of the Macbeth ColorChecker. On visual inspection we see that the resulting values look similar.

In order to quantify our result, we plotted the measured R, G and B values vs the predicted R, G, and B values. The left image in Fig. 12 shows the plot derived for the right image sensor. We note that the relationship is linear. We also calculated , a CIE metric for color difference, and note that the values are small. The right image in Fig. 12 shows a histogram of values. Fig 13. shows the corresponding plots for the left image sensor. The results are similar for both sensors.

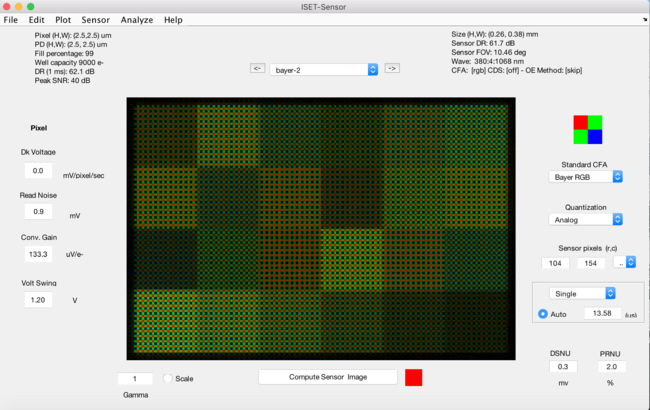

Simulation

Using the spectral and noise parameters derived above, we can simulate the output of the sensor using ISET. From the datasheet we found the pixel width was 2.5 um, f-number was 2.3 and focal length was 2.1 mm. The well capacity and pixel fill-factor were unknown. We guessed that the well capacity was approximately 9000 electrons per pixel by comparing this sensor to other sensors [11]. We guessed that the fill factor was close to 99% since the sensor is back-illuminated. Using the script "t_introduction2ISET.m" as reference, we set the sensor parameters to these derived values on ISET. Assuming diffraction limited optics, we were able to simulate the output of the sensor given different radiometric scenes as input.

Conclusions & Next Steps

We followed well established experimental procedures to characterize noise and estimate the spectral quantum efficiencies of a JEDEYE camera equipped with two CMOS solid-state image sensors.

To find the spectral quantum efficiencies: we captured images of narrowband lights and measured their spectral power distributions using a spectroradiometer. The estimated quantum efficiencies for the right and left sensor were in close agreement, and visually resemble the spectral curve provided by the manufacturer. We validated the spectral curves by measuring and predicting the R, G, and B values of a Macbeth ColorChecker under fluorescent light. We verified that the measured and predicted values were similar.

To estimate noise characteristics: we acquired dark field and light field images of constant and varying exposure. We modified MATLAB scripts available from the ISET toolbox to carry out the analysis to determine DSNU, PRNU, dark voltage and read noise. Firstly, there was excellent agreement between the two image sensors. Secondly, there is a discrepancy between the dark voltage measured and that reported in the image sensor datasheet; we present a hypothesis that such a discrepancy is due to the widely different ambient temperatures at which our measurements were taken versus those dictated by the protocol the vendor followed.

Lastly, the image sensor was simulated using the ISET toolbox under one lighting condition. values were smaller than those determined from measured data. Some of the discrepancies can be attributed to errors in experimentally determined noise parameters and characteristics of the illumination used. We hope another student can take on this project and compare simulated and measured R, G and B values under various illuminants. We also hope that, ultimately, this simulation can feed into a large-scale high-fidelity simulation of the image processing pipeline.

Acknowledgements

We would like to acknowledge the support and guidance we received from Zhenyi (Eugene) Liu who helped us set up and run our experiments as well as debug code.

We would also like to thank Dr. Joyce Farrell who proposed this project idea to us and gave us resources to complete the measurements.

Lastly, we would like acknowledge Professor Brian Wandell and Trisha Lian who helped to instill important concepts that were necessary to successfully implement our project.

References

[1] Wikipedia. "Color image pipeline". (2017).

[2] B. Wandell. “Lecture #1: Introduction to Image Formation”. PSYCH 221:Image Systems Engineering. Stanford University (2017).

[3] J.Redi, W. Taktak, J.L. Dugelay. “Digital image forensics,” Multimedia Tools and Applications. 51(1)133-162. (2011).

[4] R. Gow, D. Renshaw, K. FIndlater. "A comprehensive tool for modelling CMOS image-sesor-noise performance." IEEE Trans. Electron Devices. 1321-1329. (2007).

[5] P.E. Haralabidis and C. Pilinis. "Linear color camera model for a skylight colorimeter with emphasis on the imaging pipe-line noise performance." J. Electron. Imaging. 14. (2005).

[6] J. Farrell, P.B. Catrysee, B. Wandell. “Digital camera simulation”. Applied Optics. 51(4)80-90. (2012).

[7] B. Wandell. “Lecture #5: Image Processing”. PSYCH 221:Image Systems Engineering. Stanford University (2017).

[8] I. Calizo, "Reset noise in CMOS image sensors". San Jose State University. (2005).

[9] J. Farrell, M. Okincha and M. Parmar. “Sensor calibration and simulation." Proc. SPIE 6817. 68170R (2008).

[10] CMOS solid state SONY IMX123LQT-C image sensor datasheet.

[11] Digital Camera Reviews and Sensor Performance Summary (ClarkVision)

Appendix I

Slides presented to Prof. Brian Wandell, Dr. Joyce Farrell and Trisha Lian (with some minor modifications) can be found here: slides

The code and data can be accessed here: Code

The CMOS solid state SONY IMX123LQT-C image sensor datasheet is located File:SONY IMX123LQT-C.pdf

Appendix II

The division of work was as follows: Ashwini and Marta both participated in setting up and running experiments to capture the images necessary to get noise and spectral sensitivity characteristics. Both of us worked on analysis and writing code. Marta focused on the noise analysis whereas Ashwini focused on validating the acquired spectral sensitivities. Both of us contributed to the presentation and project documentation.

![{\displaystyle E[N]=Var[N]=\lambda t}](https://wikimedia.org/api/rest_v1/media/math/render/svg/dcfcbbac72126b5ce473d3a90915eceb1380921a)