Objective Measures for Visually Lossless Testing of RGBG Displays

Introduction

Image compression is widely used in applications which store or transmit high resolution images with large data content. To achieve substantial reduction in bits per frame, this technique may result in lossy images reproduced after decompression. Thus, the minimization of perceivable artifacts is a key goal in implementing an effective compression algorithm, and this can be evaluated only through a correct assessment of image fidelity. In this project my goal is to evaluate the image fidelity of reconstructed images as displayed to viewers on RGBG Pentile displays.

Background

Image fidelity can be evaluated objectively or subjectively. In the subjective approach, participants with naive eyes evaluate the quality of compression techniques as presented to them in a lab-controlled environment. In contrast, the objective evaluations are independent of individual judgement. They are obtained by applying a model of display to images and measuring the artifacts in the uniform color space of CIELAB. For the quality assessment of color images, the CIELAB E metric is a commonly used tool derived from perceptual measurements of color discrimination of large uniform patches. However, CIELAB metrics are not suitable for measuring the fidelity of natural images, since many related studies found that color discrimination of human vision depends on spatial content of the image [1], [2]. The S-CIELAB metric as proposed in [3] is a spatial extension to CIELAB which incorporates sensitivities to spatial frequencies of the three opponent color channels by adding a spatial pre-processing step before the standard CIELAB E calculation. However, S-CIELAB assumes that all three subpixels are co-located which does not hold for real life displays. This project focuses on Pentile RGBG pattern which is a sophisticated subpixel layout (Fig. 1) used in AMOLED displays such as Samsung Galaxy Tab S. Hence, we need to accommodate the subpixel pattern to evaluate image fidelity in such displays compared to regular RGB stripes.

Methods

ISETBIOLAB: ISETBIO + CIELAB

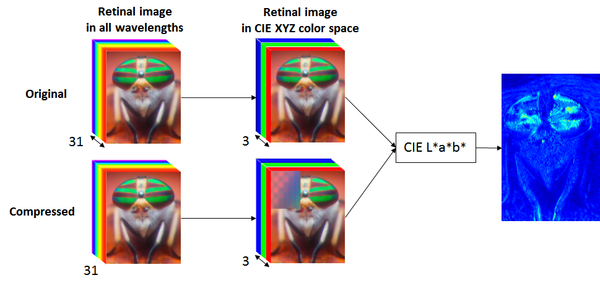

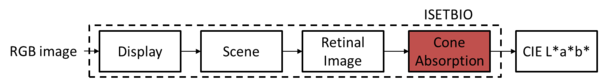

Our proposed solution is to analyze the displayed images through ISETBIO toolbox to account for the human visual system, as illustrated in the figure below. The display renders the image by low-pass filtering to minimize color aliasing, down-sampling R and B pixels, and tilting the subpixel pattern. The gamma curve of the display is also applied. The scene is modeled with the radiance emitted at each coordinate in display within the visible range over 31 wavelengths. The image is constructed onto the retina and photoreceptors by modeling the optics of the eye and chromatic aberration. After constructing the retinal image in all wavelengths, it is converted to CIE XYZ color space, and using CIELAB the compression artifacts are compared with the original image.

My Contribution

This technique has been recently implemented by my colleagues in Samsung Display team and the objective results were compared with the subjective test. It was concluded that using ISETBIO (on RGB and RGBG displays) eliminates the false alarms compared to the conventional S-CIELAB method (without rendering display subpixel pattern), while it increases the detection rate of flickers.In this project, the developed model was enhanced by adding the photoreceptor absorption response to retinal image before converting the image to CIE XYZ color space and using CIELAB.

Eye Movement

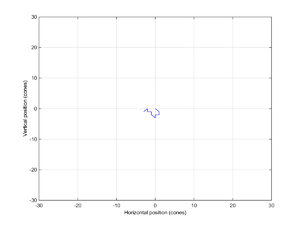

To find the photoreceptor response, the eye movement has been taken into account. It has been known that eye is never still, even during fixation [4]. There are three main forms of eye movement during visual fixation: tremor, drifts, and microsaccades. Tremor is an aperiodic, wave-like motion of the eye with a frequency between 60 to 100 Hz [4]. The tremor spatial amplitude is about the diameter of a cone [4]. In ISETBIO, the timing and the position of tremor are modeled with gaussian and uniform random variables. Drift are superimposed on tremor and is a slow motion trajectory of the eye during the epochs of microsaccades [4]. During drifts, the image can move across a dozen photoreceptors[4]. In ISETBIO, drifts are assumed to be 2D Brownian motion. Microsaccades are small, jerk-like eye movements that carry the image across a range of several dozen to several hundred photoreceptors and are about 25 msec in duration [4]. In ISETBIO, when a Microsaccades is present, tremor and drift are suppressed. In this project, all corresponding parameters to these three types of eye movement are set to default as in ISETBIO. Using these parameters, a fixed sequence comprising 20 eye positions each lasting for 1 msec is obtained illustrated in Fig. 4 and is used for both original and reconstructed image.

Results

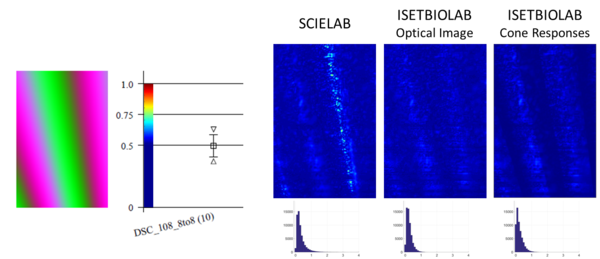

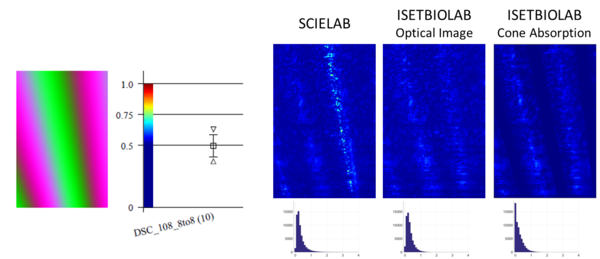

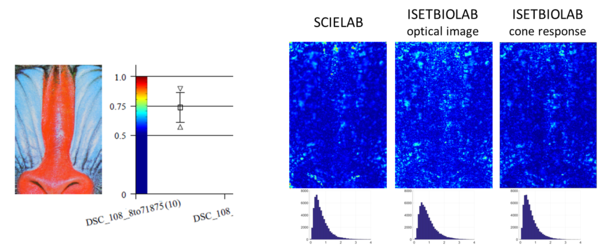

Here we show the obtained results for several reference original/compressed images. For each image, the error map is shown using three approaches: 1) S-CIELAB (without rendering subpixel pattern), 2) ISETBIO+CIELAB over optical image (previous work), 3) ISETBIO+CIELAB over cone absorptions (this project). Also, for each image, the subjective test done by York University (2015) is also shown which was only done on RGB displays over 30 pixel/degree.

Comparing ISETBIO+LAB performed on optical image and S-CIELAB(without sub-pixel rendering) shows that false alarms identified in S-CIELAB error map are eliminated by ISETBIOLAB. This result has been reported by the previous work by Samsung Display. The false alarms happen over dark regions where S-CIELAB seems to be too sensitive to any differences. According to the subjective test, the viewers can not detect such noticeable errors as predicted by S-CIELAB. Comparing the results of ISETBIO+LAB on optical image and ISETBIO+LAB on cone absorption image shows that cone absorption follows the same trend in eliminating false alarms. However, it removes most of the scattered errors across the image, as they get blurred into visually lossless region, while it retains the intensity of the other errors. As a result, some errors become more prominent in ISETBIO+LAB on cone absorptions when compared to the rest of the image, and it would be easier to detect the high flickering region. In order to correlate these results with the subjective tests, we need to set up a new subject test to ask viewers to point out the location of any flicker they observe in the image. This way, we can verify if the locations emphasized by cone absorption match with the subjective perception as well.

Comparing ISETBIO results for RGB and RGBG displays, we can see that more flickers are detected on RGBG displays than on RGB displays by both optical image and cone absorption image.

Conclusion

In this project, an existing model called ISETBIOLAB (ISETBIO+CIELAB) developed by Samsung Display Lab for objective visually lossless testing of RGBG displays, was enhanced by taking into account photoreceptor response to optical image. Photoreceptor response is converted to XYZ image in order to apply CIELAB as in the existing model. The error maps after adding photoreceptor response follow the same trend as before in eliminating the false alarms as produced by the conventional S-CIELAB approach. Please note that in this project, S-CIELAB approach does not render the subpixel pattern as it conventionally does not. Another form of comparison is possible by applying S-CIELAB over the subpixel rendered image, skipping ISETBIO and cone absorption response. However, this comparison is out of the scope of this project and would be an interesting study to pursue following this work.

In this project, the enhanced model was tested over nine images on RGB and RGBG displays out of which two images were presented here. Over all cases, it was observed that ISETBIOLAB over cone absorption provides a more refined error map than ISETBIOLAB over optical image. In other words, some scattered errors in the previous model are de-emphasized as they join the visually lossless region while the others stay as prominent. Hence, the enhanced model makes it easier to differentiate flickering regions from visually lossless regions flickering regions. It makes sense that due to the sparsity of photo receptors in fovea, our eye would not be able to detect all the potentially flickering areas. Also, the fact that some errors get blurred while the others do not, reflect the unbalanced placement of S-cones compared to L- and M- cones.

This project also confirms a conclusion drawn by the previous work which states that images tend to be more visually lossy on RGBG displays than RGB. Comparing the error maps for RGB and RGBG displays, we can see more flickering areas and higher error intensities over RGBG displays.

Acknowledgement

I would like to thank Samsung Display Lab especially Gregory W. Cook for proposing this topic for my project and more importantly providing me with the previous analysis and source code which was primarily developed by Javier Ribera Prat who was with Samsung Display Lab as an intern in Summer 2017. Also, many thanks to Brian, Joyce and Trisha for their valuable and constructive comments in order to improve the quality of this study in Future.

References

[1] Noorlander, C., Koenderink, J.J. “Spatial and temporal discrimination ellipsoids in color space,” Journal of the Optical Society of America, 73, 1533-1543, 1983

[2] Poirson, A. B., Wandell, B. A. “Appearance of colored patterns: pattern-color separability,” Journal of the Optical Society of America, 10(12), 2458-2470, 1993

[3] X. Zhang and B. A. Wandell “A spatial extension of CIELAB for digital color image reproduction,” Journal of the SID, March 1997

[4] S. Martinez-Conde, S. L. Macknik and D. H. Hubel “The Role of Fixational Eye Movements in Visual Perception”, Nature Reviews Neuroscience, 5, 229 - 240, March 2004

Appendix

Presentation slide can be found here: Presentation

The two main Matlab codes can be found here: Codes