RomeroPrabalaWan

Introduction

Material classification combines the fields of computer vision and machine learning in an attempt to emulate the inner workings of the human eye and brain. It attempts to answer the following question: given an image, what materials are present and can be identified?

There have been efforts to answer this question relying solely on RGB images. This generally involves pre-processing a database of images to extract additional information about the material before using all of that information to train a classifier [1]. The pre-processing steps range from a simple high pass filter to computing a spectral histogram of the image [2]. The goal of evaluating pre-processing techniques is to select the most useful features for training a classifier. Although the choice of these techniques is a major contributor to the effectiveness of a material classifier, we looked at the effectiveness of depth and infrared data. More information about related work can be found in [3] and [4].

Our project aimed to train a classifier using a combination of RGB, depth, and infrared images. We evaluated the performance of different classifier algorithms, as well as the effects each feature had on the accuracy. More specifically, we captured and labeled our own images using two models of Intel RealSense cameras, which provided the additional depth and infrared data. In order to demonstrate a proof of concept, we used only two labels: skin and not-skin.

Background

Generating a 2D image with depth data can be done with various techniques that fall under range imaging. Initially, this was done by attempting to emulate the human visual system using stereo cameras and recreating a scene from the differences in the images. Reconstructing the scene involves heavy use of epipolar geometry. However, this technique has a downside of not being able to identify the depths of a uniform surface. On a uniform surface, finding corresponding points is nearly impossible. In addition, this technique struggles whenever there are occlusions present in the images, as one image will contain information not present in the other. Another weakness of stereo algorithms is when there are repetitive elements in the images, as there can be many candidates at a corresponding point. The long-range camera used in this project uses stereo infrared cameras to generate depth data.

The short-range camera used in this project generates depth data through structured light patterns. This process works by projecting a carefully chosen infrared light pattern (or series of patterns) onto a scene. The depth information is calculated based on the distortion of the light pattern when it hits the scene object. The light pattern is chosen in a way to be able to uniquely identify each part of the light stripe. The downside to this approach is the limited resolution of depth data available. Depth is only calculable for the points illuminated by the light pattern, and the granularity of the light pattern determines how many distinct depths can be calculated. This means that interpolation is sometimes used to guess the depths for parts of the image [5]. Hence, this technique is only used for shorter range applications, where resolution is less likely to become an issue.

The final relevant form of measuring depths of a scene is time of flight. This technique is most notably used by Microsoft's Kinect V2. This works by analyzing the time delay from the time light was sent onto the scene to the time that light was detected to be reflected back onto the scene. This measurement is done periodically, so in the case of scene objects that are farther away from the camera, the light might return to the sensor after the next period had already started. This can lead to ambiguity in the depth of parts of the scene. Time of flight was not used by any of the cameras in this project.

Methodology and Tools

|

|

The methodology for our skin versus not-skin classification study was as follows:

- Gather image data using the short-range (F200) and long-range (R200) Intel RealSense Cameras. The F200 has three cameras: a 1080p RGB camera, an infrared camera, and an infrared laser projector for measuring depth. The R200 uses two infrared cameras to capture depth images, and has a 1080p RGB camera. Our images were either completely comprised of a non-skin surface (such as a carpet), or had skin in the foreground (such as a hand or a forearm). An example of the R200 and F200 can be seen in Figures 1 and 2, respectively.

- Perform image pre-processing before using them for classification.

- Train a supervised learning classifier with a subset of the dataset and test the classifier on the remainder of the dataset.

- Use a heatmap to visualize prediction accuracy.

These steps are detailed further in the following section.

Data Acquisition and Pre-processing Pipeline

Figure 3 shows the processing pipeline's four steps.

- The first step is to capture the RGB and depth images with the long-range camera, and the RGB, depth, and IR images with the short-range camera. This is done using the RealSense SDK's raw camera application, with a small modification to capture the streamed image. Figures 4, 5, and 6 shows sample RGB, depth, and infrared images from the dataset taken with the short-range camera, respectively.

- The second step is to resize all the images. The images need to have a one-to-one pixel mapping to each other, since they will be passed to the classifiers as matrices. We selected to downsize the images so as to not create artificial pixels/features. The new image size of 600x400 is slightly smaller than the raw size of the IR and depth images, and allows for easier tiling, which is explained in the next step.

- The third step is to break the image into smaller "tiles". As shown in Figure 7, we selected to partition the image into 100 tiles to make classification and prediction on the images computationally feasible for a personal computer. However, the tiling can be made more fine-grain, which has the potential to improve the classifier's prediction ability. Partitioning the image into tiles also increased our dataset size.

- The fourth step is to label the tiled images as skin, not-skin, or unknown (denoted as a question mark in Figure 3). We manually labeled all of the tiles to be as consistent as possible. In particular, tiles that were on the border of skin or not-skin were marked as unknown and not included in our classifier, since it would lead to inconsistent predictions and results.

|

|

|

|

Model Selection

We evaluated three separate models in our exploration. Given that the problem was explicitly that of binary classification, we did not include image segmentation or unsupervised learning algorithms into our search. However, with proper structure, those could also be applied to our data set.

- Support Vector Machine (SVM)

SVMs are a standard in the industry for classification, and in practice have performed very well on images. However, the major difficulty with using SVMs is their tendency to overfit the training data set, or require very significant hyper-parameter tuning. Originally, we used a Gaussian kernel, but found that the SVM would overfit quite heavily even with some modification to the C parameter. Thus, we switched to using a linear kernel to guarantee there would be a specific decision boundary. The SVM performed adequately, though was overshadowed by other models that we selected.

- Logistic Regression

Logistic Regression is another classic learning algorithm traditionally applied to classification. After spending some time tuning, we found that the model performed the best when it used stochastic average gradient instead of other choices. Again, like the SVM, it was overshadowed by the final model we examined.

- Random Forest Classifier

The Random Forest Classifier is an example of an ensemble learner. Rather than attempt to construct the best "judge," ensemble learners combine the outputs of multiple judges drawn from random probability distributions in order to make a classification judgement. Statistically, the average of multiple judges outperforms a singular judge, regardless of how well it does. As illustrated in Figure 8, in the Random Forest, each "judge" is a decision tree, with features constructed from the dataset at each node in the tree until there are no more features to draw. The result is a multitude of decision trees that are combined into the final labeling decision. Naturally, given more trees, the Random Forest will perform better. However, the tradeoff is in runtime: with more trees, the algorithm takes longer to reach a decision. We chose to use 500 trees in order to get similar runtimes to the other two algorithms.

- Analysis and Train/Test Split

The random forest performed the best on our data set, so we elected to use it in all of our results analysis and data generation. When we tested the model across the various image types, we trained on 80% of the data, and tested on the other 20%. To generate a heatmap, the model was trained on all of the other images in the dataset and the test set consisted only of the image we wished to map onto.

Results

Performance Metrics

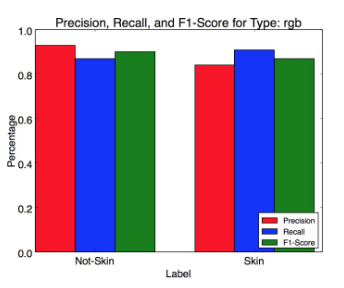

The classifiers results can be divided into three different categories: hits, misses, and false alarms. As shown in Figure 9, a hit is defined to be a true positive (skin label classified as skin), a miss is defined to be a false negative (skin label classified as not-skin), and a false alarm is defined to be a false positive (not-skin label classified as skin). We utilized three standard classifier metrics to measure the performance of our classifier: precision, recall, and F1-Score.

- Precision is the classifier's ability to not label a not-skin picture as skin, and is defined as:

- Recall is the classifier's ability to find all of the skin pictures, and is defined as:

- F1-Score is the harmonic mean of precision and recall, and is defined as:

Long-Range Camera Classifications

|

|

|

Figures 10 to 12 show examples of the random forest classifier's performance as a heatmap overlaid on the raw long-range camera RGB images. For some images, such as Figure 12, the classifier was able to identify almost all skin tiles. However, as shown in Figures 10 and 11, there were several images for which the classifier had a large number of misses and false alarms. Surprisingly, some of the misses occurred on tiles that were completely skin (i.e. not on the edge of skin and not-skin). We attribute this to the lighting conditions under which the skin was exposed, which may have made the pixels look similar to the background (such as a light carpet).

The random forest classifier's performance for each long-range camera feature type is shown in Figures 13 to 15. The left-three bars on each graph show the classifier's ability to predict not-skin with high accuracy (over 90% F1-score for all three types). The right-three bars on each graph suggest the classifier can successfully find the majority of the skin tiles, especially when using RGB and depth as the feature types. As expected, only using depth as a feature type did not lead to an accurate prediction of skin tiles. However, we noted the large number of false alarms led to a low recall, and the precision of the skin predictions was not as high as that of the not-skin. The long-range camera's dataset had a large number of not-skin tiles, and even when we used 20% of the not-skin tiles for the training set (in addition to the skin tiles used for training), the classifier seemed to lean towards predicting not-skin, which turned out to be right the majority of the time.

Short-Range Camera Classifications

|

|

|

Figures 16 to 18 show examples of the random forest classifier's performance as a heatmap overlaid on the raw short-range camera RGB images. When compared to the long-range camera's heatmaps, we see the classifier has less misses and false alarms when using the short-range camera's data for feature inputs. In particular, Figure 16 had an almost perfect classification of both skin and not-skin. The short-range camera's dataset had a better balance of not-skin versus skin images, which contributed to more accurate predictions by the classifier. In addition, the use of infrared images helped the classifier further differentiate between skin and not-skin: something that was not available with the long-range camera.

The random forest classifier's performance for each short-range camera feature type is shown in Figures 19 to 25. As with the long-range camera, the classifier was able to predict not-skin tiles with a high accuracy. As expected, the lowest skin classification accuracy came from using only infrared or depth feature types. When used alone, these two feature types do not encode significant information that the classifier can use to differentiate between skin and not-skin tiles. However, as show in Figure 25, using all three feature types (RGB, depth, and infrared) resulted in a high F1-score for both not-skin and skin classification. Thus, when combined with RGB features, both infrared and depth can improve the classifiers ability to differentiate between skin and not skin.

Future Work

Our data set could be improved to include a larger variety of scenes. The RealSense cameras require USB 3.0, which was only available on our desktop PCs. Hence, we were only able to take images in a very limited number of locations. We would like to explore different lighting conditions and different skin pigments to better train our classifier. In addition, instead of solely focusing on binary classification labels, we would like to expand to multiple classification labels. Other materials we were considering looking at included carpet and wood, which share similar colors as skin. This way, we can evaluate how effective our classifier would be given materials with similar RGB values. We also want to further explore the effects depth has on classifier performance. Our initial hypothesis was that depth would not be useful, since skin does not have any interesting depth characteristics. However, because skin was in the foreground of our images, depth may be been helpful in more easily identifying skin tiles. We would like to explore capturing images that include skin as the background, although this scene would be hard to create at a practical level.

We would also like to explore other pre-processing steps that were described in the Introduction section. We tried using histogram equalization on the RGB image as described in [1], but found that it generally reduced the performance of our classifier. In the future, we would like to try bilaterial filtered and high pass filtered images as used in [4].

Conclusion

This project investigated the ability of a supervised learning classifier to use RGB, depth, and infrared data for classifying image elements as skin or not-skin. Using the Intel RealSense long-range and short-range cameras, we created a database of images with skin, without skin, and with skin in the foreground. For the long-range camera, we found RGB features produce the most accurate predictions of skin versus not-skin in an image, closely followed by a combination of RGB and depth features. We believe that adding images with more diverse background to the image database may produce improved results in terms of improving both the recall and precision of predicting skin tiles. For the short-range camera, we found RGB, depth, and infrared features produce nearly perfect predictions with the random forest classifier. Thus, the short-range camera data classifier improves by adding depth and infrared as features in addition to RGB.

The results of this project can be extended to advanced real-world applications, especially in real-time systems that need to make inferences about a material's properties from an image. Understanding whether an object should be walked on, picked up, or simply interacted with can be done by using state-of-the-art cameras, such as the cameras used in this project, and an image classification pipeline as we have described in this report. We would suggest future projects to build off of our framework to make these material classification systems a reality.

References

[1] Ojala, T., & Pietikäinen, M. (n.d.). Texture classification. Retrieved December 15, 2016, from http://homepages.inf.ed.ac.uk/rbf/CVonline/LOCAL_COPIES/OJALA1/texclas.htm

[2] Liu, X., & Wang, D. (2003). Texture classification using spectral histograms. IEEE Transactions on Image Processing, 12(6), 661-670. doi:10.1109/tip.2003.812327

[3] Corbett-Davies, S. (2013). Real-World Material Recognition for Scene Understanding. http://cs229.stanford.edu/proj2013/CorbettDavies-RealWorldMaterialRecognitionForSceneUnderstanding.pdf

[4] Sharan, L., Liu, C., Rosenholtz, R., & Adelson, E. H. (2013, February 19). Recognizing Materials Using Perceptually Inspired Features. International Journal of Computer Vision, 103(3), 348-371. doi:10.1007/s11263-013-0609-0

[5] Time-of-Flight Cameras. (n.d.). Retrieved December 15, 2016, from http://www.computervisiononline.com/books/computer-vision/time-flight-cameras

[6] Lau, D. (n.d.). The Science Behind Kinects or Kinect 1.0 versus 2.0. Retrieved December 15, 2016, from http://www.gamasutra.com/blogs/DanielLau/20131127/205820/The_Science_Behind_Kinects_or_Kinect_10_versus_20.php

Appendices

Appendix I: Code, Data, and Results

All of the code, data, RealSense SDK modifications, heatmaps for all images, and results can be found in the following Github repository: [1]

Appendix II: Group Work Partition

- RealSense SDK Modifications: Richard

- Data pre-processing: Francisco and Rahul

- Histogram Equalization: Richard

- Classification algorithms: Francisco, Richard, and Rahul

- Heatmaps: Rahul

- Results: Francisco

- Presentation and Report: Francisco, Richard, and Rahul

Please note that when multiple group members are listed for a single item, they are not listed in any particular contribution order.