Visual Acuity and Simulated Depth of Field Cues on the Oculus Rift DK2

Introduction

Stereoscopic head-mounted displays have recently gained popularity. Most commercial displays, including the Oculus Rift Developer Kit 2, consist of a LCD or OLED screen, focusing optics, and equipment for head tracking. In these displays, stereoscopic cues can be generated by modifying the image sent to each half of the display. However, with these devices, the optical configuration remains the same. This configuration appears most natural when the stereoscopic depth matches the display virtual focal plane. However, deviations can cause discomfort.

Prior Research (Citation) has demonstrated this effect, vergence and accommodation mismatch, as causing significant discomfort to users. Our study seeks to reduce the magnitude of these effects by simulating depth cues in the form of depth-of-field blur. Study participants, while wearing the Oculus Rift DK2 HMD, must attempt to solve the binocular problem for interactive scenes with a variety of depths. Their performance while doing so, with the randomized application of depth-of-field cues, has been recorded and analyzed. Recommendations for designers are made in the conclusions section.

Background

Vergence-Accommodation and Viewer Fatigue

Aforementioned, vergence-accommodation conflict is an active area of research. Vergence refers to the actual angular displacement of the eyes so that they intersect at the object of interest; accommodation refers to the optical power of the eyes changing such that they focus at the correct distance [1]. For many people, vergence and accommodation are reflexive and part of the same system, thus when their eyes converge at an intersection, they will also reflexively change their optical power to focus at said intersection. Disparity between these two concepts in virtual optical systems like Oculus Rift can cause discomfort in viewers (e.g. imagine your eyes triangulating an object far away, but they are focused as if the object were close).

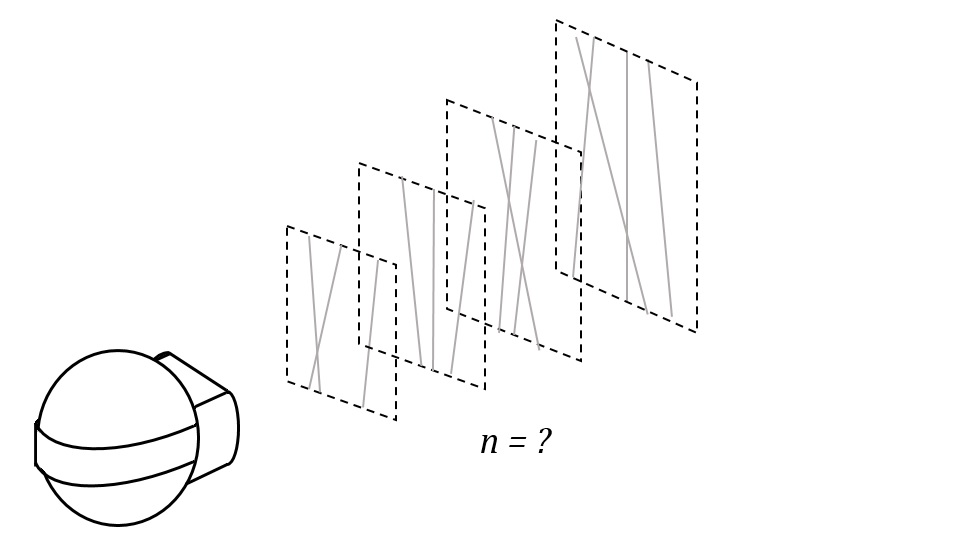

Researchers from UC Berkeley (including Martin Banks and David Hoffman, whom we'll see again) and Microsoft conducted a study using a complex virtual optical system that maintains this synchronization between vergence and accommodation (to an acceptable degree), shown above. This multi-plane, volumetric display utilizes semi-transparent mirrors and a technique called depth-weighted blending which virtually displays objects at their appropriate depth in the scene [1]. Half of the trials used this display, and the other half used an adjusted version that kept accommodation distance constant and allowed vergence to vary. In a double blind study, the researchers asked participants an extensive series of questions to assess their comfort in using each of these systems, and with enough statistical significance they showed that participants who wore the system that adjusted both vergence and accommodation were more comfortable than the other group of participants [1]. This seems to makes sense: virtual optical systems that introduce as little disparity as possible between vergence and accommodation yield the most natural results. The downsides are that this system is expensive, difficult to engineer, and likely take a lot of overhead just to get working. However, we can use this research to ideate an alternative method to aid depth perception in the Oculus Rift.

Focus Cues and Depth Perception

In a study from the Journal of Vision in 2005, a group of researchers, including Martin Banks, sought to determine the efficacy of focus cues in discerning depth. The researchers simulated a rotated plane in front of each participant that was textured with some geometry, like a grid or Voronoi pattern [6]. The researchers then projected that virtual plane onto an independently rotated physical monitor. Thus, when both the simulated angle and physical angle of the monitor are the same, this corresponds to a situation we would see in reality wherein focus cues align with geometric cues. When the simulated angle and the real angle of the monitor are different, the brain receives conflicting depth information. For example, with regard to focus, the monitor could look not slanted at all (i.e. everything is in focus), but the geometry of the scene clearly shows a slant. The participants were asked to identify the perceived slant of the scene in degrees both when using binocular and monocular vision. The binocular setup was one such that the brain receives vergence cues as well as focus cues and geometric cues. Interestingly, when using binocular vision, introducing a physical slant to the monitor (i.e. introducing focus cues) had no statistically significant effect; however, this effect was statistically significant when using monocular vision [6].

Focus Cues are Useful

Building on previous research, Martin Banks and David Hoffman conducted another experiment to determine the effect of focus cues on depth, this time utilizing the multi-plane, volumetric display from the first experiment [4]. A visual of this device is above and to the right, and a visual of the experimental concept is above and to the left. In the experiment, the plane closer to the viewer is frontoparallel and the plane farther from the viewer is slightly rotated about its mid-vertical axis. The angle of rotation is variable. Both planes are textured with thin lines on a different colored background, as shown in the figure (but with a higher density of black lines than depicted). There were two groups in the experiment, those trials in which both planes were displayed on the same depth plane of the volumetric display. This means we have no depth cues and everything is in focus. In the other half of the trials, the planes were displayed at different focal lengths of the eye, so that if a participant focused on the far plane, the near plane would be out of focus. The participants' task was to determine whether the far plane was slanted clockwise or counterclockwise with respect to the front plane. Through several iterations of this experimental design, Hoffman and Banks showed with enough statistical significance that the participants were better able to determine the planes' angle of rotation when given depth cues [4].

Given that the Oculus Rift is not a multi-plane, volumetric display like in the mentioned experiments, we wanted to assess whether or not focus cues significantly affect a person’s judgment of distance on a less sophisticated, but more accessible device.

Methods

Setup

Hardware

The experiment was carried out with an Oculus Rift Developer Kit 2 and a 2012 Macbook Pro

Software

- Windows 7

- Unity 4.6 https://unity3d.com/get-unity/download/archive

- Oculus Software https://developer.oculus.com/downloads/#sdk=pc

- Oculus SDK Windows

- Oculus Runtime for Windows

- Oculus Unity 4 Integration

Configuration

The correct settings are key to a relatively smooth session with the Oculus DK2. The choice of game engine and programming environment, firmware version of the device, and even display update frequency has a marked effect on the performance of the experiment.

Game Engine

We chose to use Unity 4.6 as our game engine. We initially investigated the use of the SDK, but found that our group members' experience with Unity combined with already-implemented shaders for depth of field blur a compelling argument. Unity requires a number of dependencies, including the SDK, the runtime, and the unity integration script, all of which can be downloaded from Oculus as of March 2015.

Computer Settings

At the time of this project, there are two supported display modes. With the "Extended" mode, the HMD appears as a separate display. With "Direct" mode, the game engine can send frames directly to the HMD without the overhead of display configuration. While the second mode will surely be preferable in the future, Unity does not support it as of this project. We used the Rift DK2 in extended mode with Refresh Rate set to 60hz.

Refresh rate is another important setting - Oculus recommends that the DK2 be operated in 75 hz "Low Persistence Mode." At that frequency, the OLED display duty cycle is set very low to reduce smearing. However, running at this frequency is more computationally expensive. Furthermore, on someone computers, there are conflicts between displays which cause a screen commanded to refresh at 75hz to refresh at 60. To resolve these issues, we used the DK2 in 60hz mode, despite the higher persistence and longer-term discomfort.

Experimental Hypothesis

After conducting a survey of background information, we noted that increased fatigue from vergence/accommodation mismatch is an accepted issue with an Oculus-type HMD [2][3] Given the subjectivity and careful experiment design required to objectively measure eye fatigue, we instead decided to test a hypothesis that depth cues could improve a user's ability to solve the correspondence problem in a complex virtual scene. Therefore, we decided to test if depth-of-focus cues in a complex virtual reality scene can improve the user’s ability to solve the correspondence problem for that scene.

Experimental Design

We asked our participants, randomly selected from friend, family, and random strangers to perform a difficult visual correspondence task. Each participant was asked to determine the number of depth planes present in a virtual environment. In designing a repeatable experiment, we sought an objective metric of participants' acuity. Therefore, we measured the deviation from he correct number of depth planes.

The virtual environment was designed to remove normally predominate depth cues. Even though the virtual features were at dramatically different depths (10s of meters in virtual space), the overall dimensions and texture mapping of the features were scaled so that all objects appeared identically when viewed in monocular vision (See Appendix I for examples).

The test stimulus was a variant of the test stimulus used by Hoffmann and Banks[4] in their work on depth perception stimuli. We modified the stimuli to increase the difficulty. In early experimentation, we found that test subjects needed at least five seconds to adjust to the new scene and were not comfortable determining the layer count with a time limit.

Early volunteers were able to use the 6 degree-of-freedom head tracking function of the system to gain a parallax effect advantage. In order to limit the parallax effect on the experiment, we increased the absolute depth of the scene to 100m. This poses a problem: the eye would naturally servo to focus at infinity with such a distance. We artificially increased the magnitude of the blur by modifying the virtual aperture to compensate for this effect.

Experiment Implementation

We spent some time trying to figure out how to implement a custom shader to blur objects that were out of focus and also spent some time searching the Unity Asset Store for good blurring effects, but later discovered that Unity Engine has a built-in, high quality depth of field shader. We apply this shader to each of the cameras that correspond to the two lenses on the Oculus Rift. We wrote a custom script in JavaScript to dynamically update the focal length of the cameras in real time. This script communicates with another script, which communicates directly to the shader. We update the focal length by sending a Raycast (a method of the built-in Physics class) out from the camera. The Raycast returns the object that it first hits, and we update the focal length to the distance that the object is from the camera, which then updates the shader to adjust what is in and out of focus. Because we did not want to have the left eye and right eye out of sync, we assume the user is right eye dominant (80% of the population is) and update the focal length based on what the right camera's Raycast returns.

In addition, we had to determine whether we wanted to use the Gaussian blur effect or the Bokeh blur effect for our depth of field shader. The Guassian blur effect follows a linear curve in terms of how blurry objects are relative to the distance from the depth of field, whereas the Bokeh has a curve that more accurately replicates the human eye. Though the following images are from Unreal Engine 4, they accurately show how this works and the end result.

Though the Bokeh may look slightly better in some cases, we decided to go with Gaussian because it gave us considerably better performance and allowed us to maintain a solid frame rate with nearly the same visual fidelity.

Once we finished writing our scripts to update the focal length, we created a scene, which consisted of multiple layers of pillars and a room for the player to stand in. This was done by simply adding cubes, applying textures, and adjusting their sizes manually in Unity Engine. In order to isolate whether our blurring effect helped depth perception, we attempted to remove other depth cues, such as how objects of the same size appear smaller as they get further away. In order to do so, we made pillars get progressively bigger as they got further away such that each pillar took up approximately the same number of pixels regardless of its location in the scene. We also wrote a script to update which layers of pillars in our test are on and off. This was trickier than we expected, and we ran into a few problems. We first tried deleting objects to turn them off, but then had no way to turn them back on. We then discovered that we could turn the Renderer of each object off in order to basically make the object invisible. The problem with this approach, however, was that the objects still had physics bodies, so the Physics Raycast method would update the focal length to focus on objects that were no longer in our scene. Our solution was to iterate through each pillar in the layer that we wanted to turn off and to turn off each Renderer and Physicsbody. This solved our problem and produced the desired effect.

Results

Test Overview

In all, we surveyed 20 people from ages 9 to 86. The participants either had no visual instruments, contacts, or glasses. We recorded age, optical corrective instruments, their scores on the scenes, and a short survey. For most participants, the test took around five minutes. We did not impose a time limit per scene since all users converged to a confidence answer within 30 seconds.

Test Results

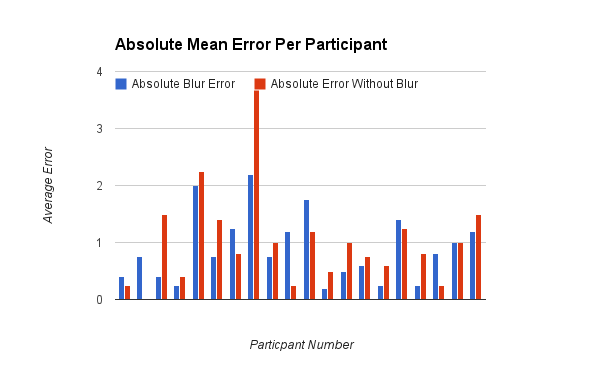

We computed a score for each participant based on their average deviation from the correct value for the scene. The score was computed for both blurred and non-blurred tests.

where is the participant's answer, is the correct answer, and is the number of blurred tests. The non-blurred test score was computed similarly.

The data is attached in .xlsx format [REF].

| Enhancement | Blur On | Blur Off |

|---|---|---|

| Mean (lower is better) | .895 | .997 |

| Standard Deviation | .596 | .842 |

Significance of Results

Using a paired T-test, we found that the results were not statistically significant at the level. Our experiment's p value was .

Additional Notes

After the test, we asked our test participants to answer two questions:

"Did you find the test more or less comfortable with the blur" and "Did you find the test more or less realistic with the blur"

Most subjects found the test uncomfortable, but were evenly divided on whether the test was 'realistic'.

While we collected age data, after analysis we did not find a correlation between age and test performance. A plot of the data can be found in Appendix I.

Conclusions

Our real time depth-of-blur effect did not have a statistically significant effect on the correspondence problem performance of our test participants.

Furthermore, 90% of test participants found the effect uncomfortable, leading us to a number of possible conclusions requiring further study:

- The depth-of-field effect requires further tuning and user testing until it achieves a certain comfort threshold, at which point the experiment should be repeated.

- Our test participants would be better off without the effect, given the lack of performance improvement observed.

- The computation required for the depth of field effect caused jitter and frame dragging which adversely affected the effectiveness of the system (Suggested by our advisor at Oculus)

Nevertheless, as test subjects were divided on the realism of the effect, it seems plausible that, if tuned properly, the effect might promote realism.

References

[1] Hoffman et al. (2008). Vergence-accommodation conflicts hinder visual performance and cause visual fatigue. Journal of Vision, 8(3):33, 1-30, http://journalofvision.org/content/8/3/33, doi: 10.1167/8.3.33.

[2] http://static.oculus.com/sdk-downloads/documents/Oculus_Best_Practices_Guide.pdf

[3] Martin S. Banks ; Joohwan Kim and Takashi Shibata " Insight into vergence/accommodation mismatch ", Proc. SPIE 8735, Head- and Helmet-Mounted Displays XVIII: Design and Applications, 873509 (May 16, 2013); doi:10.1117/12.2019866; http://dx.doi.org/10.1117/12.2019866

[4] Hoffman, D. M., & Banks, M. S. (2010). Focus information is used to interpret binocular images. Journal of Vision, 10(5):13, 1–17, http://journalofvision.org/content/10/5/13, doi:10.1167/10.5.13.

[5] https://docs.unrealengine.com/latest/INT/Engine/Rendering/PostProcessEffects/DepthOfField/index.html

[6] Watt et al. (2005). Focus cues affect perceived depth. Journal of Vision, 5(10):7, 1-29, http://journalofvision.org/content/5/10/7, doi: 10.1167/5.10.7.

Appendix I

End Result

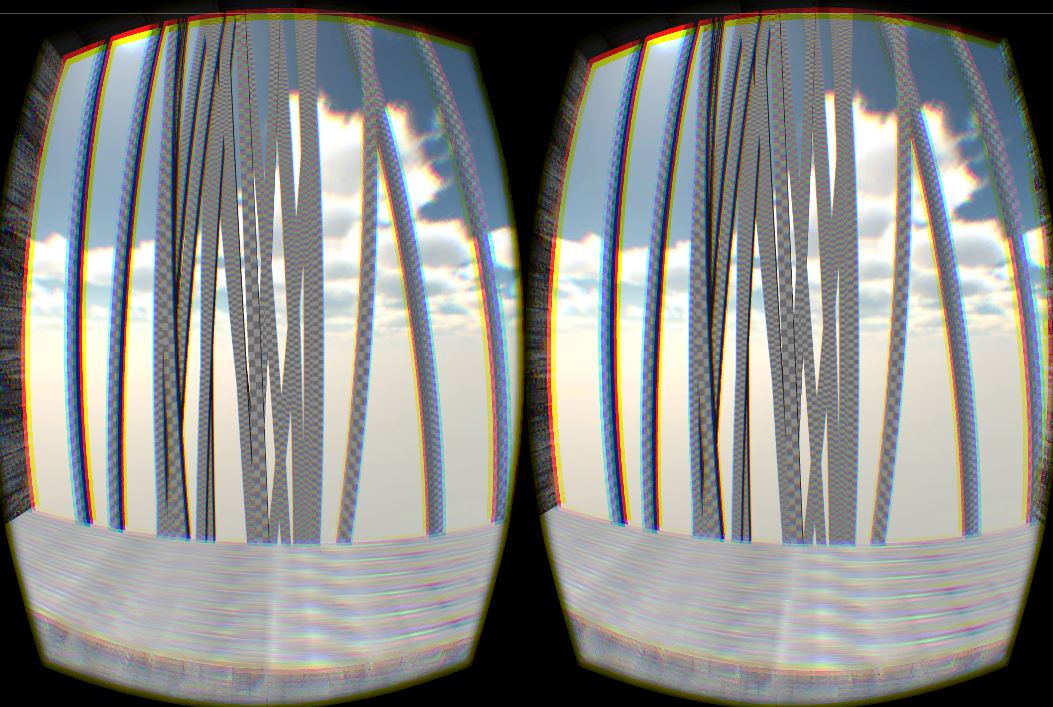

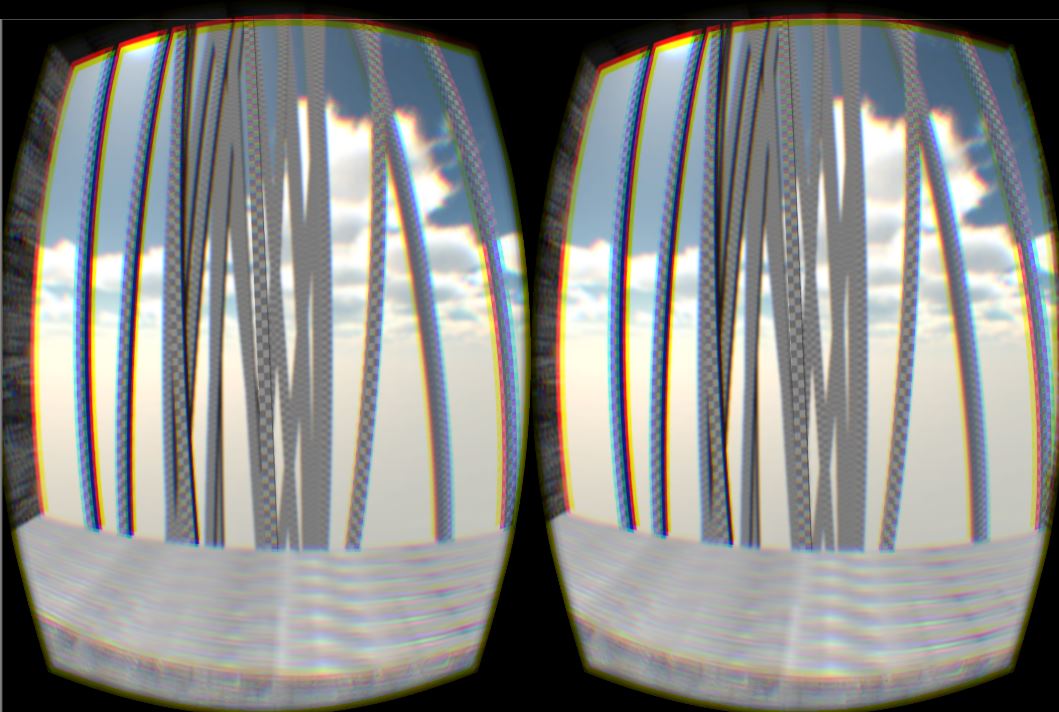

Though it is difficult to visualize whether this effect would help depth perception without an actual Oculus Rift on, these images give a rough idea of what our experiment is like with blur off and with blur on. As you can see, everything is in focus in the first image, whereas the second image contains some objects that are in focus and some that are not. To simulate the 3d effect of viewing in an Oculus Rift, try crossing your eyes to fuse the two images into one, as you would a Magic Eye picture.

This file contains the demo, scene, and source code: File:Psych221FinalProject.zip The implementation of everything is described above in the Implementation section of Methods.

This file contains all of the raw data in the form of an excel file File:Psych221 Oculus Depth project Win 2015 Data.zip

Appendix II

Ian Proulx - oculus development, code, data collection, report

John Reyna - background, data collection, data analysis, report

Nathan Hall-Snyder - experiment design, data collection, data analysis, report