YueYawenXianzhe

Introduction

Depth map estimation is important in understanding 3D geometric in the scene. The goal of our project is to perform depth prediction from stereo image pairs. In a simple stereo system, given two images taken from a camera at two different locations with a transition matrix , for each pixel on the left image, we can match it with a corresponding pixel on the right image as shown in Fig.1.

Disparity is defined as where is the horizontal location of the pixel on the left image, and is the horizontal location of the corresponding pixel on the right image. The depth of the object is then computed following the similar triangle rule with the equation: , where is the focal length, is the transition of the camera as shown in Fig.2. Intuitively, for an object with larger depth, will be smaller as we move the camera around, whereas for a closer object, the difference would be larger. This intuition goes with the above equation that depth is inversely proportional to the disparity of a pixel.

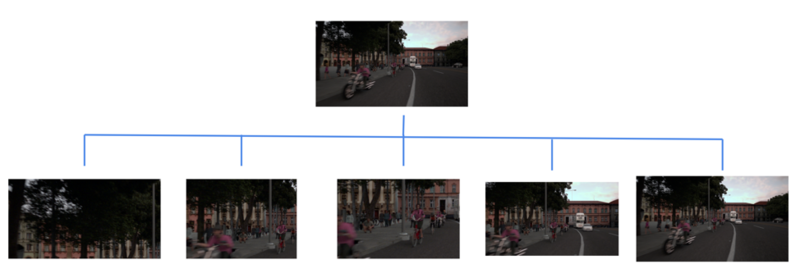

In this project, we train a convolutional neural network to predict the depth map from stereo image pairs. The architecture is composed of two deep networks, the first one makes a prediction based on the global information, the second one tries to combine the initial stereo image and the global prediction result as the input and refine the output based on local information. We used the dataset generated by our Course Assistant, Zheng Lyu, from ISET 3D, which contains 238 stereo image pairs. The dataset mainly consists of scenes on the streets. Fig.3 shows an example of our dataset, with an image pair and the ground truth disparity map.

Background

A popular four-step pipeline has been developed for stereo matching, including matching cost calculation, matching cost aggregation, disparity calculation and disparity refinement[1]. Each step of the pipeline is important to the overall stereo matching performances. We use the traditional sum-of-squared-differences (SSD) algorithm to illustrate these four steps. First, we calculate the matching cost using the squared difference of intensity values at a given disparity. Then, we sum the matching cost over a squared window with constant disparity. Step1 and Step2 of SSD algorithm can be presented using the following equation:

In step3, we calculate disparities by selecting the minimum aggregated value at each pixel. However, the resulting image would have many black pixels as shown in Fig.4. It's clear that as we move the camera, some pixels would be out of our image scope as in the left part of the resulting image, some pixels are black due to being occluded by objects in the front.

To solve this problem, some disparity refinement algorithms can be applied. One simple strategy is to fill those black pixels with the nearest value. In this case, we would have a resulting image as shown in Fig.5.

Due to the complexity of designing traditional methods, researchers are trying machine learning techniques to improve stereo matching performances and find them outperform traditional methods. Machine learning algorithms can be applied to either four of the above steps. Zbontar[2] first introduced CNN for matching cost calculation. They trained a CNN classifier to measure the similarity of two pixels of two images and found that CNN can learn more robust and discriminative features from images, and generates improved stereo matching cost as shown in Fig.6.

Kendall [3] integrated matching cost calculation, matching cost aggregation and disparity calculation into a neural network, which achieves higher accuracy and computational efficiency as shown in Fig.7. Jiahao[3] incorporates disparity refinement in a CNN to refine disparity. The architecture is shown in Fig.8.

Dataset

Our dataset is generated by Zheng Lyu using ISET3D. ISET3D is a set of tools that could create spectral irradiance of realistic three-dimensional scenes. Most of the scenes within this dataset are street scenes in the city.

There are around 200 synthesized image pairs simulating a stereo camera. Every data sample contains a pair of an image set for the left camera and the right camera. An image set contains an irradiance image, a depth map image, a mesh image, and a metadata file.

We use the irradiance images as the input and the depth map image as the ground truth. We divide this dataset into 3 sets: 200 image pairs in the training set, 15 image pairs in the validation set, and 23 image pairs in the test set.

Methods

Model Architecture

Enlighted by the model in [4], we designed our model architecture as shown in the diagram. Our model could be divided into 2 phases: CoarseNet and RefineNet.

The top part is the CoarseNet. We replaced the fully convolutional layer in [4] with a residual convolutional block which contains a residual convolutional layer and a fully convolutional layer. We feed the left image and right image of the stereo camera into the model separately. After two layers of ResConv block, we sum their result up. After several other ResConv blocks, we flatten the 2D matrix into a vector. Passing through two fully connected layers and transform it, we could get a coarse depth map.

The bottom part is the RefineNet, we process the concatenated images with one layer of ResConv, concatenate the output with the coarse depth map. After another two ResConv blocks, we could get the final refined depth map prediction.

Data Augmentation

We performed data augmentation on the original dataset. We mainly augmented it by cropping the image. One strategy is to crop the image around the center of the camera. Another strategy is to perform random cropping on the original image. Both strategies aim at maintaining more details of the original dataset.

Loss Function

We use the loss function defined as follows: where and .

Evaluation Matrix

We computed several evaluation matrix defined as follows:

Abs Relative Difference

Squared Relative Difference

RMSE(linear)

RMSE(log)

Results

Evaluation

| Evaluation | ||||

|---|---|---|---|---|

| Evaluation Matrix | Coarse Model(no data augmentaion) | Refine Model(no data augmentaion) | Coarse Model(with data augmentaion) | Refine Model(with data augmentaion) |

| Abs Relative difference | 0.3190 | 0.1952 | 0.2053 | 0.1576 |

| Squared Relative difference | 0.2618 | 0.1104 | 0.1501 | 0.0667 |

| RMSE (linear) | 0.8477 | 0.6430 | 0.6798 | 0.5776 |

| RMSE (log) | 0.1524 | 0.0732 | 0.1108 | 0.0461 |

Analysis

From the result above, we could observe the improvement with augmented dataset over the original dataset and our model gives us an acceptable depth map prediction precision. For linear RMSE, the data augmentation gives us an improvement of 19.8% on CoarseNet prediction and 10.2% on RefineNet prediction. From the predicted depth map, we could get the rough depth information about the image. The error images show that we lose some details.

However, there are some issues we might need to pay attention to. We can find that our model learned limited information from the input data and there are more aspects to improve. Especially, it seems that our model tends to learn more about the intensity of the input image instead of the disparity information. The output of our model has fewer errors when it estimates the disparity of a test image with a bright sky. However, since our input data contains a lot of street images with a sky, it tends to predict all brighter input with larger depth, just like the sky. This suggests that with a dataset of relatively similar scenes, our model tends to learn the characteristics of the object itself, such as intensity, instead of its disparity. Moreover, to improve our work, we can continue to refine the architecture of the mode, perform more data augmentation, tune hyperparameters, and test on other datasets for more precise depth estimation.

Conclusion

The evaluation and visualization show a promising result and improvement with data augmentation. The RefineNet could improve the precision greatly based on the result of the CoarseNet. Currently, the design of RefineNet is relatively simple comparing to the CoarseNet, further tuning and modification on RefineNet are expected.

But there are many aspects we could improve in the future. We might need to train our model on a larger dataset first and then train it on this synthesized dataset. We also want to train our model on datasets in different domains to make it more robust to avoid errors introduced by this specific domain.

References

[1]. D. Scharstein and R. Szeliski. A taxonomy and evaluation of dense two-frame stereo correspondence algorithms. International Journal of Computer Vision, 47:742, 2002.

[2]. J. Zbontar and Y. LeCun. Stereo matching by training a convolutional neural network to compare image patches. Journal of Machine Learning Research, 17(1- 32):2, 2016.

[3]. A. Kendall, H. Martirosyan, S. Dasgupta, P. Henry, R. Kennedy, A. Bachrach, and A. Bry. End-to-end learning of geometry and context for deep stereo regression. In IEEE Conference on Computer Vision and Pattern Recognition, 2017

[4] Eigen D, Puhrsch C, Fergus R. Depth map prediction from a single image using a multi-scale deep network. InAdvances in neural information processing systems 2014 (pp. 2366-2374).

Appendix I: Code

Github Page: https://github.com/xianzhez/stereo_depth_map

Appendix II: Work Breakdown

Three of us worked together on implementing the model and solve the problems we met.

Yawen worked on data preprocessing, data augmentation, and the result analysis.

Yue worked on data preprocessing, data augmentation, and result visualization.

Xianzhe worked on designing, training the model, tuning parameters and testing performance.

Appendix III: Acknoledgement

Brian and Joyce gave us excellent lectures and helpful advice on this project.

Zheng helped us on project topic selection and generated the dataset for us with ISET3D.

![{\displaystyle t=[T,0,0]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/b267c30b5ecffa044e930a36cde154d783bd487d)

![{\displaystyle \lambda \in [0,1]}](https://wikimedia.org/api/rest_v1/media/math/render/svg/010c0ee88963a09590dd07393d288edd83786b91)