Moqian

Back to Psych 204 Projects 2009

Background

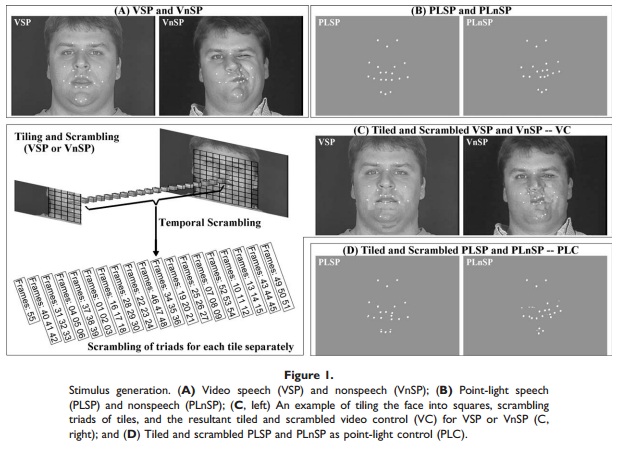

It's an important issue for researcher to investigate how visual speech information is processed in the brain. A traditional view states that the visual speech information is integrated in the unisensory cortices which is the visual areas. In Bernstein (2010) they found a more anterior activation in STS for speech than nonspeech stimuli. Here we are going to use dynamic stimuli to test whether their finding can be replicated.

In their research, the speech stimuli are videos of people reading nonsense syllables, and the nonspeech gestures were puff, kiss, raspberry, growl, yawn, smirk, fishface, chew, furn, nose wiggle, and frown-to-smile.

Methods

Stimuli

There are six dynamic stimuli categories: emotional faces, communicative faces, emotional bodies, hand gestures, scenes and vehicles. all of the stimuli are shown in 2s movie clips.

Emotional faces are faces with 6 different emotions: disgust, happiness, anger, fear, sadness and surprise.

Communicate faces are faces nodding, shaking, talking without sounds etc. Actors are both adults and kids.

Hand gestures are hands that thumb up, thumb down, punch, point or clap.

Emotional bodies are all back of the body, without face appeared in the video. Emotions include anger, sadness, happiness and fear. All actors are adults.

All the stimuli are self-moving, with the camera staying still.

In each category block, the identities and movement types are different. Identities could be the same across blocks.

Design

This is a blocked-design experiment. All of the stimulus categories were shown in a block. There are 6 video clips from the same stimulus category in each block, each lasts 2s, thus make a block of 12s.

Each scan consists of 21 blocks (4 faces, 4 bodies, 4 vehicles, 4 scenes, 5 blanks).

Subjects perform an one-back task by pressing a button when two successive images are the same.

Subjects can freely view the videos during the movie blocks, and need to fixate in the blank blocks.

Subjects

Subjects are 4 adults and 4 kids. Written consent was obtained from each subject, and the procedures were approved by the Stanford Internal Review Board on Human Subject Research.

MR acquisition

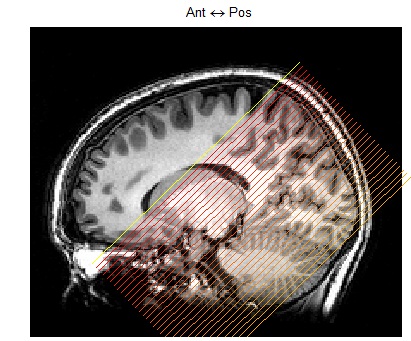

We collected 32 slices at a resolution of 3.125*3.125*3mm using a two-shot T2* spiral acquisition sequence (Glover, 1999). Fov =200mm, TE= 30ms, flip angel=76degree, Bandwidth = 125 kHz, TR=2000ms. Inplane anatomicals were acquired with the same prescription using a two-dimensional SPGR sequence.

Scan plane was oblique to cover the occipitotemporal cortex.

MR Analysis

The MR data was analyzed using mrVista software tools.

Pre-processing

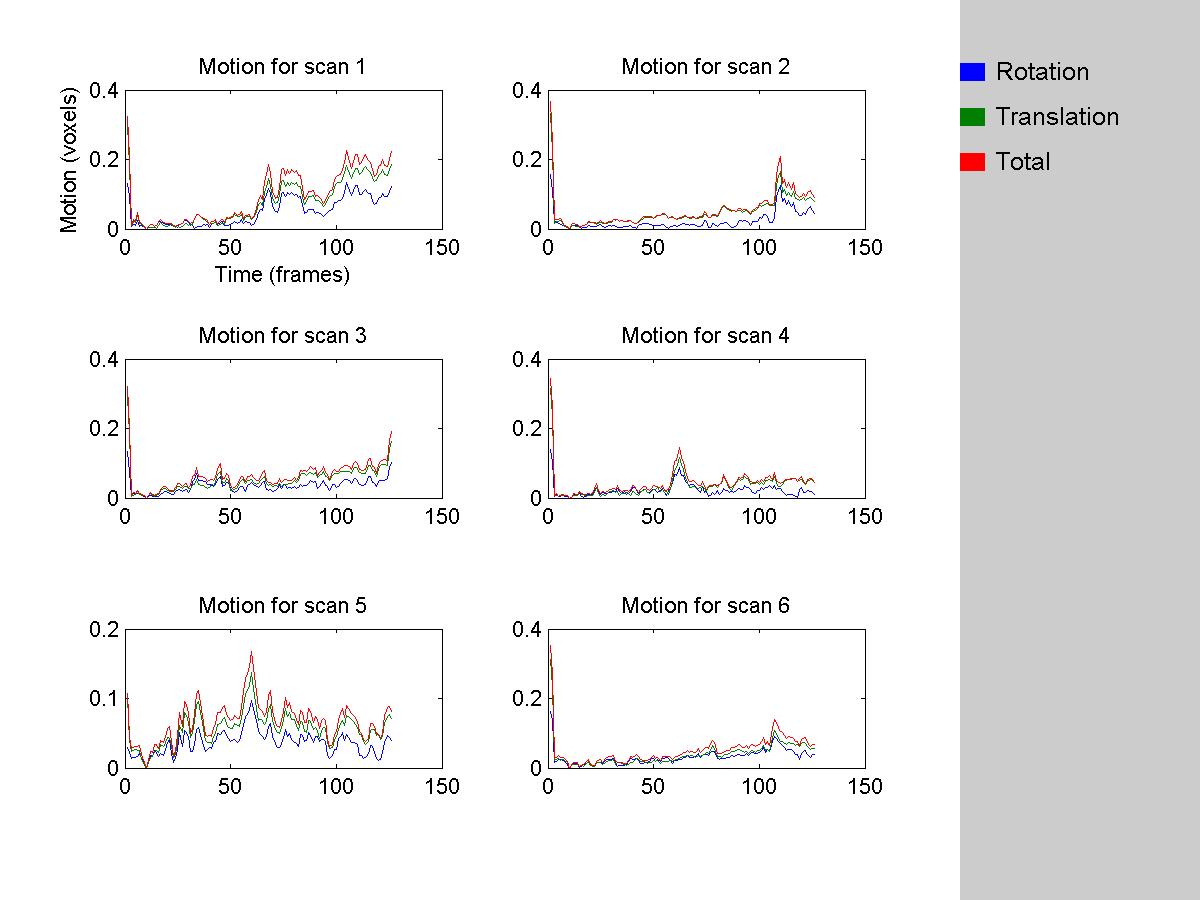

Motion Correction

All data were motion-corrected. Both within-scan motion correction and between-scan motion correction were performed in each subject. Subject exclusion criteria is 2 voxel movement.

We threw out 2 TRs at the begining of each scan, and use the 8th frame of the remaining images as the reference frame to perform the within-scan motion correction.

We looked at within scan motion for each scan of each subject and make sure the motion is less than 2 voxels.

HRF model fits

We used SPM difference-of-gammas to model the HRF

Inhomogeneity Correction

We converted the time course in each voxel to percentage signal change by dividing them with the mean intensity of this voxel.

High-Pass Filter

We used a High-Pass Filter to detrend the time course which is subject to further analysis.

Results

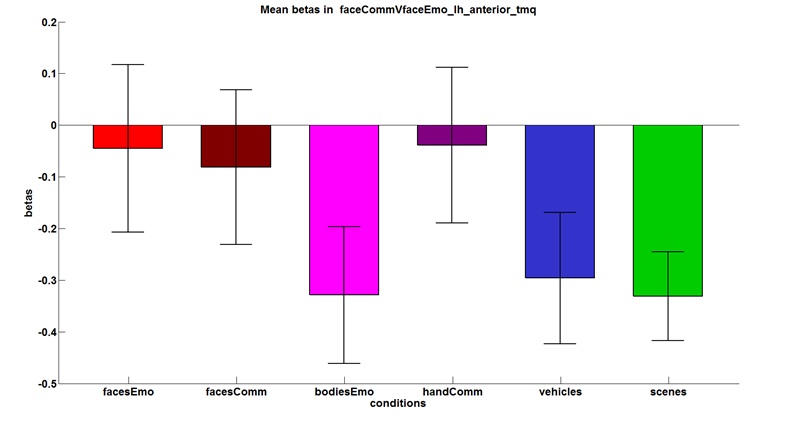

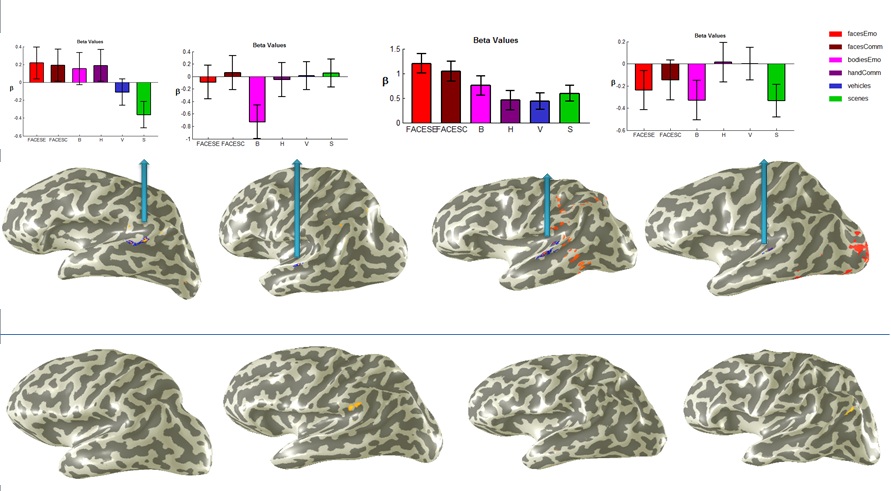

CommunicativeVsEmotional Faces Contrast

In order to have a general overview in the whole brain as where the activation for communicative faces are stronger than emotional faces, we directly performed this contrast, and project the activation onto the inflated surface of each subjects' brain and look for the pattern of activation.

Then, I drew an ROI in the anterior STS for each subject because this is the pattern that is consistent from eyeballing the activation.

After that, I run a GLM analysis on a different run other than the 2 runs based on which the ROI was made. This ensures the independent measurement.

From this graph, we found that out of 8 subjects, 4 have a stronger activation for communicative faces versus emotional faces in the anterior part of STS. Although the time course do not seem to be consistent among these ROIs. Only two of them survived the independent measurement by still showing higher activation for communicative versus emotional faces in an independent run.

Averaged time course across ROIs in different subjects

To better quantify the averaged activation in these ROIs, I extracted the time course in the ROI that I have selected for CommunicativeVEmotional face contrast and averaged them across subjects.

Averaged time course across more ROIs in different subjects

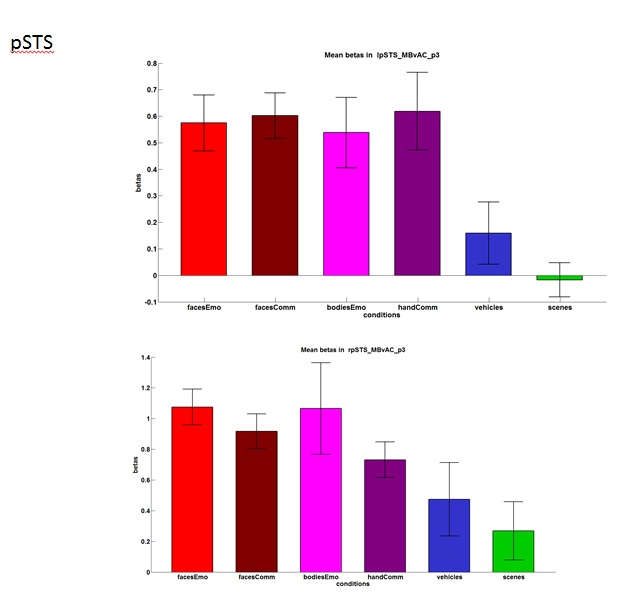

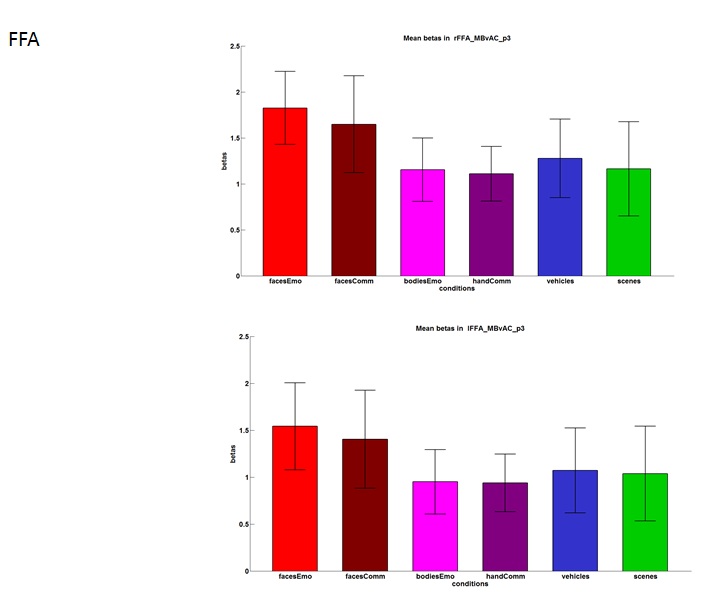

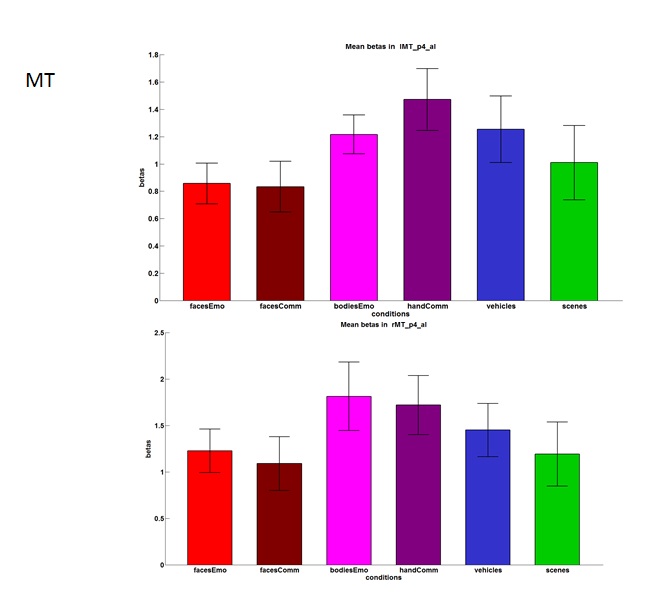

We are still interested whether there is any difference between communicative faces and emotional faces in other areas that can be localized in another experiment.

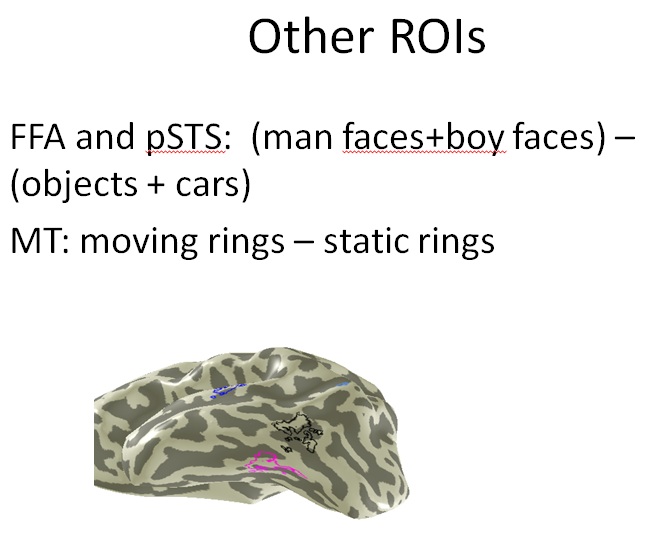

The graph below is an illustration of different ROIs that we defined in a separate experiment. Black line is MT, Magenta is FFA, light blue is pSTS.

We found that in these ROIs that we pre-defined, there is no different activations for communicative faces and emotional faces.

Conclusions

We didn't find significant higher activation for communicative versus emotional faces in the ROI that I defined and the pre-defined ROIs. This result didn't replicate Bernstein's result, which has several possible reason. First, we used different stimuli than theirs. In our stimuli, communicative faces are actually trying to convey some information, whereas in their experiment, speech faces were pronouncing nonsense syllables. Second, maybe they have more stats power by showing subjects more stimuli than we did.

Lynne E. Bernstein, Jintao Jiang, Dimitrios Pantazis, Zhong-Lin Lu, Anand Joshi.2010. Visual phonetic processing localized using speech and nonspeech face gestures in video and point-light displays. Human Brain Mapping. 32, 1660–1676.